Trust-ML

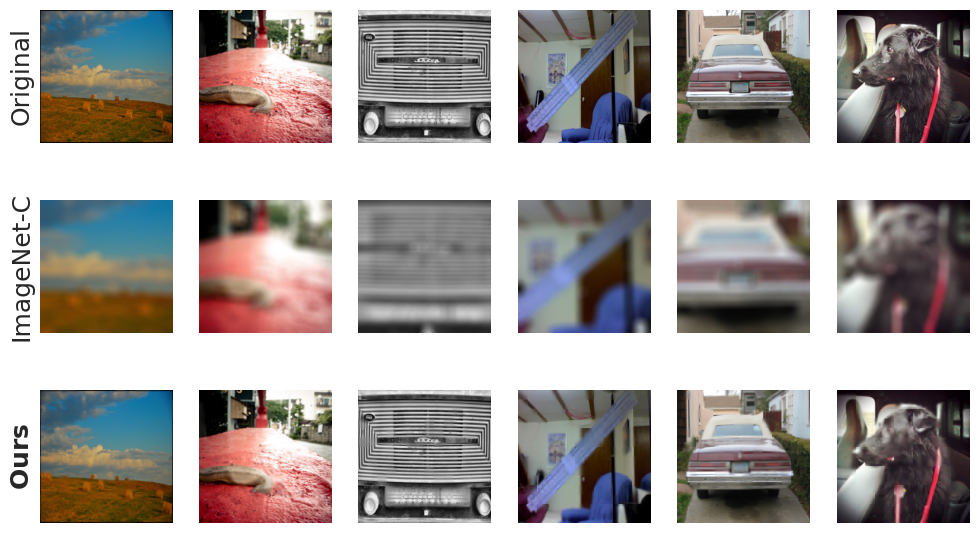

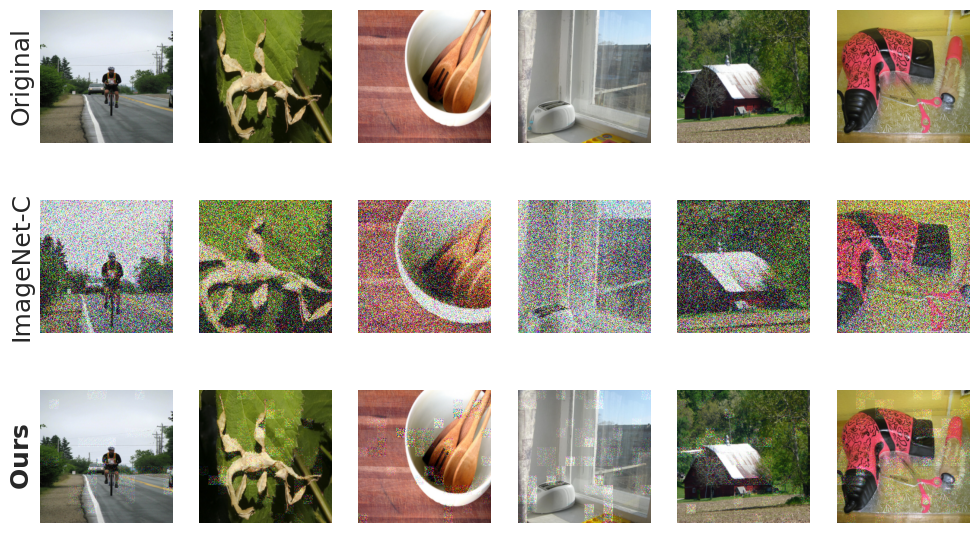

Trust-ML is a machine learning-driven adversarial data generator that introduces naturally occurring distortions to the original dataset to generate an adversarial subset. This framework provides a custom mix of distortions for evaluating robustness of image classification models against both true negatives and false positives. Our framework enables users to audit their algorithms with customizable distortions. With the help of RLAB, we can generate more effective and efficient adversarial samples than other distortion-based benchmarks. In our experiments, we demonstrate that samples generated with our framework cause greater accuracy degradation of state-of-the-art adversarial robustness methods (as tracked by RobustBench) than ImageNet-C and CIFAR-10-C. Examples of images generated by our framework are shown below.

A subset of images from each of original ImageNet, ImageNet-C, and our distorted version of ImageNet. The images shown are for severity level 5 of the Gaussian blur distortion. For the same severity level, images from ours retain much more clarity while being more challenging to classify.

A subset of images from each of original ImageNet, ImageNet-C, and our distorted version of ImageNet. The images shown are for severity level 5 of the Gaussian noise distortion. For the same severity level, images from ours retain much more clarity while being more challenging to classify.

Features

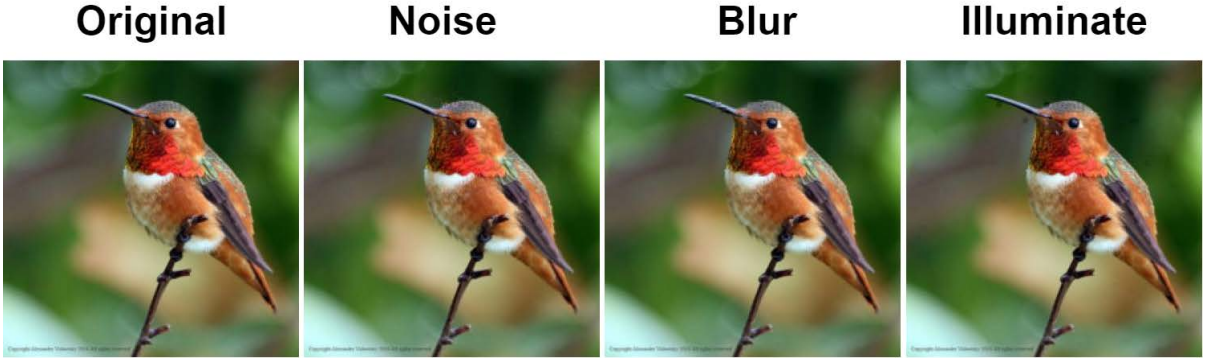

Generate adversarial samples for any type of distortion (see examples in figures below)

Generate samples at multiple distortion levels (severity/difficulty levels)

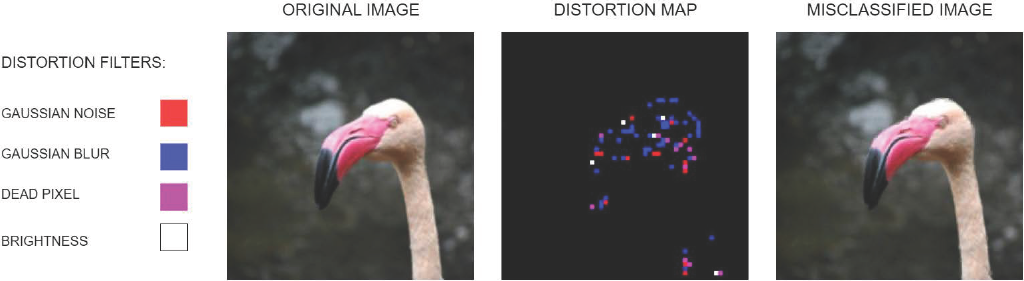

Supports a customizable mix of distortions during generation (see the lower figure below)

Robustness can be audited for both true negatives and false positives

As RLAB only requires black-box access, any model (or ensemble) can be used as the victim in the generator

Some examples of distortions that are supported by Trust-ML

Trust-ML supports a customizable mix of distortions in the generator

Getting Started

Head to the Installation page to get set up with our software

Head to the Usage page to get familiar with generating your own distorted data

You can evaluate on our distorted versions of ImageNet and CIFAR-10 used in the paper from Zenodo (see Usage for dataset structure)

Links

We host the source code for our benchmark on GitHub.

We provide our distorted versions of the ImageNet and CIFAR-10 datasets for download on Zenodo.