# Automated HPE Ezmeral Runtime Enterprise deployment

Usage of Ansible playbooks to deploy the HPE Ezmeral Runtime Enterprise is automated and eliminates manual intervention. Ansible playbooks provides the following functionalities for the installation user to deploy HPE Ezmeral Runtime Enterprise.

Install the SLES OS on bare metal servers

Prepare the hosts for HPERE implementation

Setup docker-registry to deploy ere through air-gapped environment

Install the controller nodes

Add gateway nodes, delete gateway nodes or enable gateway HA

Add and delete Shadow & Arbiter Controller

Enable and Disable controller HA

Add or remove hosts on the Kubernetes cluster

Create and delete Kubernetes cluster

Create and delete tenants

Prerequisites

Centos 7 or RHEL Installer machine with the following configurations is essential to initiate the OS deployment process.

- At least 600 GB disk space (especially in the “/” partition), 4 CPU cores and 8GB RAM.

- 1 network interface with static IP address configured on same network as the management plane of the bare-metal servers and has access to internet.

- Python 3.6 or above is present and latest version associated pip is present.

- Ansible 2.9 should be installed

- OS ISO image is present in the HTTP file path within the installer machine.

- Ensure that SELinux status is disabled.

Minimum five (5) nodes with SLES 15 SP3 or CentOS 7.6 or higher version or RHEL (nodes can be VMs or BareMetal).

Playbooks are used to download the tools (jq). They should be placed in /usr/local/bin.

Obtain the URL of the HPE Ezmeral Runtime Enterprise bundle (using s3 bucket).

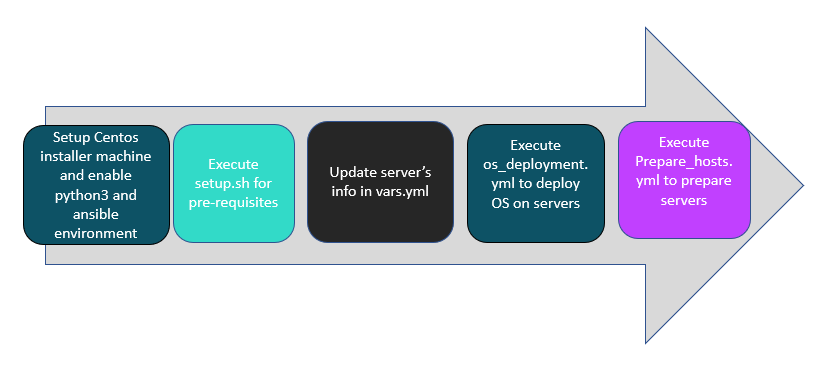

Figure 11. Workflow diagram for Automated HPE Ezmeral Runtime Enterprise deployment

# Automated Deployment

- Update the values in vars.yml and hosts inventory file according to your environment.

# Input file changes for deploying operating system on bare-metal nodes

Figure 12: High Level Flow diagram of the OS Deployment Process

# SUSE hosts Configuration

* 2 network interfaces for the production network

* 1 local drive to be used as the boot device

* Boot mode is set to UEFI

* Boot order – Hard disk

* Secure Boot – disabled

The vars.yml file contains config and server section, both of which needs to be updated with values for all the variables present within them.

a. Edit config section in vars.yml file present in “$BASE_DIR/Lite_Touch_Installation/group_vars/all/” folder with the following command and add the details of web server and operating system to be installed. Default password for Ansible Vault file “vars.yml” is changeme.

Command to edit vars.yml

> ansible-vault edit vars.yml

b. Example values for the input configuration is as follows

{

“HTTP_server_base_url” : “http://10.0.x.x/”,

“HTTP_file_path” : “/usr/share/nginx/html/”,

“OS_type” : “sles15”,

“OS_image_name” : “<os_iso_image_name_with_extension>”

}

NOTE

Acceptable values for “OS_type” variable is “sles15” for SUSE 15 SP3.

c. Edit server section in vars.yml file present in “$BASE_DIR/Lite_Touch_Installation/group_vars/all/” folder Example values for the input configuration for deploying SLES 15 SP3 is as follows.

Note

- It is recommended to provide a complex password for the “Host_Password” variable.

[

{

“Server_serial_number” : “MXxxxxxDP”,

“ILO_Address” : “10.0.x.x”,

“ILO_Username” : “username”,

“ILO_Password” : “password”,

“Hostname” : “sles01.twentynet.local”,

“NodeRole” : “controller”,

“Bonding_Interface1” : “eth*”,

“Bonding_Interface2” : “eth*”,

“Host_IP” : “20.x.x.x”,

“Host_Username” : “root”,

“Host_Password” : “Password”,

“Host_Netmask” : “255.x.x.x”,

“Host_Prefix” : “8”,

“Host_Gateway” : “20.x.x.x”,

“Host_DNS” : “20.x.x.x”,

“Host_Search” : “twentynet.local”,

“GPU_Host” : “yes”

},

{

“Server_serial_number” : “MXxxxxxDQ”,

“ILO_Address” : “10.0.x.x”,

“ILO_Username” : “username”,

“ILO_Password” : “password”,

“Hostname” : “sles02.twentynet.local”,

“NodeRole” : “gateway”,

“Bonding_Interface1” : “eth*”,

“Bonding_Interface2” : “eth*”,

“Host_IP” : “20.0.x.x”,

“Host_Username” : “root”,

“Host_Password” : “Password”,

“Host_Netmask” : “255.x.x.x”,

“Host_Prefix” : “8”,

“Host_Gateway” : “20.x.x.x”,

“Host_DNS” : “20.x.x.x”,

“Host_Search” : “twentynet.local”,

“GPU_Host” : “No”

}

]

NOTE

Acceptable values for “GPU_Host” variable is “yes” for host which has GPU cards and “No” for hosts which do not have GPU cards.

Generic settings done as part of kickstart file for SLES are as follows. It is recommended that the user reviews and modifies the kickstart files (autoinst.xml file) to suit their requirements.

Minimal Installation

Language – en_US

Keyboard & layout – US

Partition

/boot/efi ,fstype=”vfat” ,size=500MiB

root, size = 150G

srv , size = 100G

swap, size = 62.96G

var, size = 100G

home, size = 25G

NOTE

Specified Partitions are inline with HPERE implementation and is advised not to make changes to this.

timezone – America/NewYork

NIC teaming is performed with devices as specified in Bonding_Interface field of vars.yml, server section of the input file. It is further assigned with the Host_IP, Netmask, Domain as defined in the input file.

- Signature handling (accepting file without checksum, with non-trusted gpg key, unsigned file etc) is disabled by default to avoid any pop-ups and warnings and have an unattended installation. These properties can be modified according to the requirements in kickstart_files/autoinst.xml.

<accept_file_without_checksum

config:type=”boolean”>true</accept_file_without_checksum>

+ <accept_non_trusted_gpg_key

config:type=”boolean”>true</accept_non_trusted_gpg_key>

+ <accept_unknown_gpg_key

config:type=”boolean”>true</accept_unknown_gpg_key>

+ <accept_unsigned_file

config:type=”boolean”>true</accept_unsigned_file>

+ <accept_verification_failed

config:type=”boolean”>false</accept_verification_failed>

+ <import_gpg_key

config:type=”boolean”>true</import_gpg_key>

# Input file changes to prepare SLES 15 hosts for HPERE installation

- Update vars.yml file with required detail for registration.

Edit vars.yml present in “$BASE_DIR/Lite_Touch_Installation/group_vars/all/” folder with the following command and enter the details listed below. Command to edit vars.yml

> ansible-vault edit vars.yml

Note

Default password for Ansible Vault file vars.yml is “changeme”.

Mode: There are two modes of registering the server to registration server, RMT and User Email. Permissible values are 1 (For registering the SUSE server to RMT) and 2 (For registering the SUSE server using Email)

Mode 1: Registration using RMT server.

- Update the Mode:1 section in vars.yml with the following inputs

- RMT_URL: RMT server ip address.

- Update the Mode:1 section in vars.yml with the following inputs

Mode 2: Registration using User Email Id and RegCode

- Update the Mode:2 section in vars.yml with the following inputs

- email: User Email-id which will be used for server registration.

- base_code:- Registration code which will be used for server registration.

- caas_code:- Registration code for caas module.

- ha_code:- Registration code for ha module.

- Update the Mode:2 section in vars.yml with the following inputs

Note:

1. Email and Reg_codes are applicable only for Mode 2 else leave blank.

2. sles_packages: sles modules which will be installed separated by comma.

3. Zypper_packages: 27zypper packages which will be installed separated by comma.

- Example values for the registration details in vars.yml are as follows.

#Mode 1 for RMT Registration and Mode 2 for User Registration

Mode: 2

Email: jim. ***@***.com

base_code: 8BAB9EC****

caas_code: 21E02******

ha_code: 87D73*****

RMT_URL: http://20.**.**.**

sles_packages: [sle-module-public-cloud/15.6.0/x86_64,

sle-module-legacy/15.6.0/x86_64, sle-module-python2/15.6.0/x86_64,

sle-module-containers/15.6.0/x86_64,

sle-module-basesystem/15.6.0/x86_64,

sle-module-desktop-applications/15.6.0/x86_64]

zypper_packages: [iputils, bind-utils, autofs, libcgroup1,kernel-default-devel,gcc-c++,perl,pciutils,rsyslog,firewalld]

Note:

Don’t modify the sles_packages and zypper_pacakges as these packages are required for HPERE implementation changes made to this section may impact the installation.

During the playbook execution, while installing the packageHub Module it might throw an error in the first attempt. This is a known issue from SUSE and is being taken care by re-installing the module.

Link for reference https://www.suse.com/support/kb/doc/?id=000019505 (opens new window)

- In case the script fails while checking the internet connection from the hosts, kindly cross verify the internet from the hosts and then re-run the script.

- While checking the apparomor service the error is expected as it verifies the apparmor service status on the hosts and if it running, it will stop and disable the same.

# Automated ERE Deployment

- Use following command to edit vars.yml file. The vars.yml file contains the configuration environment variables and host inventory includes the host ip addresses.

> cd $BASE_DIR/Lite_Touch_Installation/

> ansible-vault edit group_vars/all/vars.yml

HPERE can be deployed by running site.yml or by running individual playbooks. Each playbook description can be found further in this document.

- To build complete setup:

> ansible-playbook -i hosts site.yml --ask-vault-pass

- In case if user want to deploy through individual playbooks. Sequence of playbooks to be followed are:

> ansible-playbook -i hosts playbooks/os_deployment.yml –ask-vault-pass

> ansible-playbook -i hosts playbooks/prepare_hosts.yml –ask-vault-pass

> ansible-playbook -i hosts playbooks/download-tools.yml –ask-vault-pass

> ansible-playbook -i hosts playbooks/controller.yml –ask-vault-pass

> ansible-playbook -i hosts playbooks/gateway-add.yml –ask-vault-pass

> ansible-playbook -i hosts playbooks/shadow-arbiter-add.yml –ask-vault-pass

> ansible-playbook -i hosts playbooks/controller-ha.yml –ask-vault-pass

> ansible-playbook -i hosts playbooks/k8s-add-hosts.yml –ask-vault-pass

> ansible-playbook -i hosts playbooks/k8s-create-cluster.yml –ask-vault-pass

> ansible-playbook -i hosts playbooks/k8s-create-tenant.yml –ask-vault-pass

Playbooks Details:

- site.yml

This playbook contains the script to deploy HPE Ezmeral Runtime Enterprise starting from the OS_deployment until tenant configuration.

- OS_deployment.yml

This playbook contains the scripts to deploy SLES_OS on bare metal servers.

- Prepare_hosts.yml

This playbook contains the script to prepare the hosts for HPERE deployment.

- Download-tools.yml

This playbook downloads the jq under /usr/local/bin in the installer machine and provides executable permissions.

NOTE

In case of facing any issues while running download-tools.yml playbook,Download tools manually from the following links, place it under /usr/local/bin and change executable permissions.

- controller.yml

This playbook contains the script to deploy controller and configuring the controller based on the configuaration details provided in the vars.yml file.

- Gateway-add.yml

This playbook contains the script to add gateways. User can add multiple gateways by providing ip and host information in vars.yml file.

- Shadow-arbiter-add.yml

This playbook contains the script to add arbiter and shadow controller nodes. Provide arbiter and shadow details in vars.yml.

- Controller-ha.yml

This playbook contains the script to enable controller ha. Users need to provide virtual ip with FQDN in vars.yml file to configure controller ha. Please make sure virtual ip details present in DNS entries.

Users need to run playbooks/shadow-arbiter-add.yml playbook first to add required arbiter and cshadow controller nodes. And then run playbooks/controller-ha.yml playbook.

- K8s-add-hosts.yml

This playbook contains the script to add k8 nodes and Data Fabric nodes. Provide k8 nodes information in vars.yml file.

- K8s-create-cluster.yml

This playbook contains the script to create ubernetes cluster and DataFabric cluster. Provide cluster details in vars.yml file.

- k8s-create-tenant.yml

This playbook contains the script to create tenant. Provide tenant details in vars.yml file.

Uninstall Information:

If user want to uninstall HPE Ezmeral Runtime Enterprise and start a fresh installation, use the following playbook.

> ansible-playbook -i hosts playbooks/uninstall-bds.yml –ask-vault-pass

Other Playbooks:

Run the following command to disable controller ha.

> ansible-playbook -i hosts playbooks/disable-controller-ha.yml –ask-vault-pass

Run the following command to delete arbiter and shadow controller nodes.

> ansible-playbook -i hosts playbooks/shadow-arbiter-delete.yml –ask-vault-pass

Run the following command to delete k8 nodes.

> ansible-playbook -i hosts playbooks/k8s-delete-hosts.yml –ask-vault-pass

Run the following command to delete the tenant.

> ansible-playbook -i hosts playbooks/k8s-delete-tenant.yml –ask-vault-pass

Run the following command to delete the cluster

> ansible-playbook -i hosts playbooks/k8s-cluster-delete.yml –ask-vault-pass

Run the following command to delete gateway.

> ansible-playbook playbooks/gateway-delete.yml –ask-vault-pass

# ERE deployment through air-gapped environment

If user want ERE deployment through airgap mode, then perform below steps:

- To setup docker registry update the details under airgap section in vars.yml and run below command to setup docker registry (registry server).

> ansible-playbook -i hosts playbooks/setup_docker_registry.yml –ask-vault-pass

setup_docker_registry.yml

This playbook contains the script to create, configure docker registry and copy all required docker images based on the configuaration details provided in the vars.yml file.

NOTE:

Above individual docker-registry playbook setup docker-registry once registry is up user can run site.yml for complete ere deployment through airgap mode.

> ansible-playbook -i hosts site.yml --ask-vault-pass

For more information check read.me file under "ERE deployment through air-gapped environment" in git repo https://github.com/HewlettPackard/hpe-solutions-hpecp/blob/master/LTI/Lite_Touch_Installation/README.md (opens new window)

For detailed steps, see the HPE Ezmeral Runtime Enterprise on HPE DL360 & DL380 Deployment guide available at, https://hewlettpackard.github.io/hpe-solutions-hpecp/5.6-DL-INTEL/ (opens new window)