# Generating Kubernetes manifests and ignition files

The manifests and ignition files define the master node and worker node configuration and are key components of the RHOCP 4 installation.

Prerequisites:

Before creating the manifest files and ignition files, it is necessary to download the RHOCP 4 packages.

- Download the required packages on the installer VM with the following playbook:

$ ansible-playbook -i hosts playbooks/download_ocp_package.yml

To generate Kubernetes manifests and ignition files:

- Generate Kubernetes manifest file with the following playbook.

$ ansible-playbook -i hosts playbooks/generate_manifest.yml

- Find the ignition files in the following path:

/opt/ hpe-solutions-openshift/DL-LTI-Openshift/playbooks/roles/generate_ignition_files/ignitions/

- When the ignition files are generated, export the kubeconfig file with the following command:

$ export KUBECONFIG= /opt/ hpe-solutions-openshift/DL-LTI-Openshift/playbooks/roles/generate_ignition_files/ignitions/auth/kubeconfig

Installing and configuring iPXE server

To install and configure an iPXE server:

- Browse to the following directory on the Ansible engine.

$ cd /opt/ hpe-solutions-openshift/DL-LTI-Openshift/

Enter the values as per your setup in input.yaml file.

Execute the following playbook:

$ ansible-playbook -i hosts playbooks/deploy_ipxe_ocp.yml

Creating bootstrap and master VM on KVM nodes

To create bootstrap and RHOCP master VMs on your RHEL 8 Kernel-based Virtual Machine (KVM) host:

- Run the following virt-install command:

$ virt-install -n boot --description "boot" --ram=16384 --vcpu=10 --os-type=Linux --os-variant=rhel8.0 --noreboot --disk pool=home,bus=virtio,size=100 --serial pty --console pty --pxe --network bridge=bridge0,mac=<mac address>

Waiting for the bootstrap process to complete

The bootstrap process for RHOCP begins after the cluster nodes first boot into the persistent RHCOS environment that has been installed to disk. The configuration information provided through the ignition config files is used to initialize the bootstrap process and install RHOCP on the machines. You must wait for the bootstrap process to complete.

Login to the installer VM as root user.

Execute the following command to bootstrap the nodes:

$ openshift-install wait-for bootstrap-complete --log-level=debug

The following output is displayed:

DEBUG OpenShift Installer v4.10

DEBUG Built from commit 425e4ff0037487e32571258640b39f56d5ee5572

INFO Waiting up to 30m0s for the Kubernetes API at https://api.ocp.pxelocal.local:6443...

INFO API v1.23.3+e419edf up

INFO Waiting up to 30m0s for bootstrapping to complete...

DEBUG Bootstrap status: complete

INFO It is now safe to remove the bootstrap resources

NOTE

Shut down or remove the bootstrap node after step 1 and step 2 are complete.

- Provide the PV storage for the registry. Set the image registry storage to an empty directory with the following command:

$ oc patch configs.imageregistry.operator.openshift.io cluster --type merge --patch '{"spec":{"storage":{"emptyDir":{}}}}'

- Complete the RHOCP 4 cluster installation with the following command:

$ openshift-install wait-for install-complete --log-level=debug

The following output is displayed:

DEBUG OpenShift Installer v4.10

DEBUG Built from commit 6ed04f65b0f6a1e11f10afe658465ba8195ac459

INFO Waiting up to 30m0s for the cluster at https://api.rrocp.pxelocal.local:6443 to initialize...

DEBUG Still waiting for the cluster to initialize: Working towards 4.10: 99% complete

DEBUG Still waiting for the cluster to initialize: Working towards 4.10: 99% complete, waiting on authentication, console,image-registry

DEBUG Still waiting for the cluster to initialize: Working towards 4.10: 99% complete

DEBUG Still waiting for the cluster to initialize: Working towards 4.10: 100% complete, waiting on image-registry

DEBUG Still waiting for the cluster to initialize: Cluster operator image-registry is still updating

DEBUG Still waiting for the cluster to initialize: Cluster operator image-registry is still updating

DEBUG Cluster is initialized

INFO Waiting up to 10m0s for the openshift-console route to be created...

DEBUG Route found in openshift-console namespace: console

DEBUG Route found in openshift-console namespace: downloads

DEBUG OpenShift console route is created

INFO Install complete!

INFO Access the OpenShift web-console here: https://console-openshift-console.apps.ocp.isv.local

INFO Login to the console with user: kubeadmin, password: a6hKv-okLUA-Q9p3q-UXLc3

The RHOCP cluster is successfully installed.

Running Red Hat OpenShift Container Platform Console

Prerequisites:

- The RHOCP cluster installation must be complete

NOTE

The installer machine provides the Red Hat OpenShift Container Platform Console link and login details when the RHOCP cluster installation is complete.

To access the Red Hat OpenShift Container Platform Console:

- Open a web browser and enter the following link:

https://console-openshift-console.apps.ocp.isv.local

- Log in to the Red Hat OpenShift Container Platform Console with the following credentials:

- Username : kubeadmin

- Password : <Password>

NOTE

If the password is lost or forgotten, search for the kubeadmin-password file located in the /opt/ hpe-solutions-openshift/DL-LTI-Openshift/playbooks/roles/generate_ignition_files/ignitions/auth/kubeadmin-password directory on the installer machine.

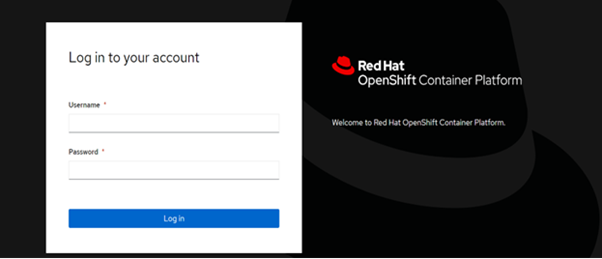

The following figure shows the Red Hat OpenShift Container Platform Console after successful deployment:

FIGURE 25 Red Hat OpenShift Container Platform Console login screen

Adding RHEL 8.6 Worker nodes to RHOCP cluster

Creating RHEL 7.9 installer machine on head nodes

To create a RHEL7.9 VM for adding RHEL 8.6 workers to the RHOCP cluster:

- Run the following command on your RHEL 8 KVM host using the virt-install utility:

$ virt-install --name Installer --memory 2048 --vcpus 2 --disk size=80 --os-variant rhel7.0 --cdrom /home/username/Downloads/RHEL7.iso

Setting up RHEL 7.9 installer machine (opens new window)

To set up the installer machine running RHEL 7.9 and run the playbook:

- Log in to the RHEL7.9 Installer Machine and then register and attach the host pool with Red Hat using the following command:

$ subscription-manager register

- Generate a list of available subscriptions using the following command:

$ subscription-manager list --available --matches '*OpenShift*'

- Find the pool ID for RHOCP subscription from the output generated in step 2 and add it in the following command:

$ subscription-manager attach --pool=<pool_id>

- Enable the repositories required by RHOCP 4.10 with the following commands:

$ subscription-manager repos \

--enable="rhel-7-server-rpms" \

--enable="rhel-7-server-extras-rpms" \

--enable="rhel-7-server-ansible-2.9-rpms" \

--enable="rhel-7-server-ose-4.10-rpms"

- Install the required packages including openshift-ansible.

$ yum install openshift-ansible openshift-clients jq

Setting up RHEL worker node

Prerequisites:

- The RHEL 8.6 OS must be deployed on the worker nodes

To set up a RHEL worker node for each worker node perform the following:

- Log in to the RHEL host and then register and attach the host pool with Red Hat using the following command:

$ subscription-manager register

- Generate a list of available subscriptions using the following command:

$ subscription-manager list --available --matches '*OpenShift*'

- Find the pool ID for RHOCP subscription from the output generated in step 2 and add it in the following command:

$ subscription-manager attach --pool=<pool_id>

- Enable the repositories required by RHOCP 4.10 with the following commands:

$ subscription-manager repos \

--enable="rhel-8-for-x86_64-baseos-rpms" \

--enable="rhel-8-for-x86_64-appstream-rpms" \

--enable="rhocp-4.10-for-rhel-8-x86_64-rpms" \

--enable="fast-datapath-for-rhel-8-x86_64-rpms"

- Stop and disable firewalld on the host.

$ systemctl disable --now firewalld.service

Adding RHEL worker node to RHOCP cluster using RHEL 7.9 installer machine

This section covers the steps to add worker nodes that use Red Hat Enterprise Linux 8 as the operating system to an RHOCP 4.10 cluster.

Prerequisites:

- The required packages must be installed, and the necessary configuration must be performed on the RHEL 7.9 installer machine

- RHEL hosts must be prepared for installation

To add RHEL 8 worker nodes manually to an existing cluster using RHEL 7.9 installer VM machine:

- Create an Ansible inventory file in the /

inventory/hosts directory. This inventory file defines your worker nodes and the required

variables:

[all:vars]

# Specify the username that runs the Ansible tasks on the remote compute machines.

# ansible_user=root

# If you do not specify root for the ansible_user, you must set ansible_become to True and assign the user sudo permissions.

ansible_become=True

# Specify the path and file name of the kubeconfig file for your cluster.

openshift_kubeconfig_path="~/.kube/config"

# List each RHEL machine to add to your cluster. You must provide the fully-qualified domain name for each host. This name is the hostname that the cluster uses to access the machine, so set the correct public or private name to access the machine.

[new_workers]

worker1.ocp.isv.local

worker2.ocp.isv.local

worker3.ocp.isv.local

- Navigate to the Ansible playbook directory:

$ cd /usr/share/ansible/openshift-ansible

- Run the following playbook to add RHEL 8 worker nodes to the existing cluster:

$ ansible-playbook -i /<path/>/inventory/hosts playbooks/scaleup.yml

For /

- Verify that the worker nodes are added to the cluster using the following command:

$ oc get nodes

The following output is displayed:

NAMESTATUSROLESAGEVERSION

master0.ocp.isv.localReadymaster,worker13dv1.23.3+e419edf

master1.ocp.isv.localReadymaster,worker13dv1.23.3+e419edf

master2.ocp.isv.localReadymaster,worker13dv1.23.3+e419edf

worker1.ocp.isv.localReadyworker23hv1.23.5+3afdacb

worker2.ocp.isv.localReadyworker23hv1.23.5+3afdacb

worker3.ocp.isv.localReadyworker23hv1.23.5+3afdacb

Once the worker nodes are added to the cluster, set the mastersSchedulable parameter as false to ensure that the master nodes are not used to schedule pods.

Edit the schedulers.config.openshift.io resource.

$ oc edit schedulers.config.openshift.io cluster

- Configure the mastersSchedulable parameter.

apiVersion: config.openshift.io/v1

kind: Scheduler

metadata:

creationTimestamp: "2019-09-10T03:04:05Z"

generation: 1

name: cluster

resourceVersion: "433"

selfLink: /apis/config.openshift.io/v1/schedulers/cluster

uid: a636d30a-d377-11e9-88d4-0a60097bee62

spec:

mastersSchedulable: false

policy:

name: ""

status: { }

NOTE

Set the mastersSchedulable to true to allow Control Plane nodes to be schedulable or false to disallow Control Plane nodes to be schedulable.

- Save the file to apply the changes.

$ oc get nodes

- The following output is displayed:

NAMESTATUSROLESAGEVERSION

master0.ocp.isv.localReadymaster13dv1.23.3+e419edf

master1.ocp.isv.localReadymaster13dv1.23.3+e419edf

master2.ocp.isv.localReadymaster13dv1.23.3+e419edf

worker1.ocp.isv.localReadyworker23hv1.23.5+3afdacb

worker2.ocp.isv.localReadyworker23hv1.23.5+3afdacb

worker3.ocp.isv.localReadyworker23hv1.23.5+3afdacb

NOTE

To add more worker nodes, update the worker details in HAProxy and BIND DNS on head nodes and then add RHEL 8.6 worker nodes to the RHOCP cluster.