SustainDC¶

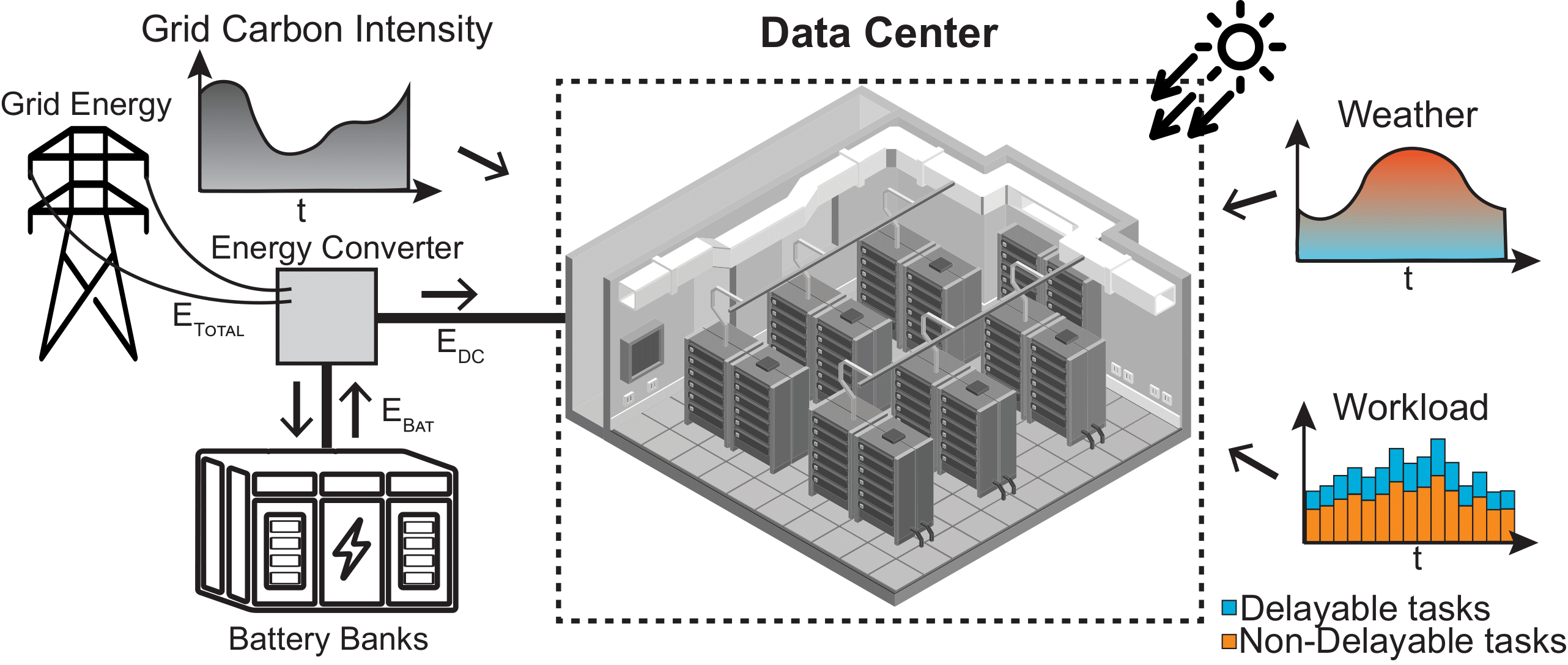

SustainDC is a set of Python environments for benchmarking multi-agent reinforcement learning (MARL) algorithms in data centers (DC). It focuses on sustainable DC operations, including workload scheduling, cooling optimization, and auxiliary battery management.

This page contains the documentation for the GitHub repository for the paper “SustainDC: Benchmarking for Sustainable Data Center Control”

Disclaimer: This work builds on our previous research and extends the methodologies and insights gained from our previous work. The original code, referred to as DCRL-Green, can be found in the legacy branch of this repository. The current repository, SustainDC, represents an advanced iteration of DCRL-Green, incorporating enhanced features and improved benchmarking capabilities. This evolution reflects our ongoing commitment to advancing sustainable data center control. Consequently, the repository name remains dc-rl to maintain continuity with our previous work.

SustainDC uses OpenAI Gym standard and supports modeling and control of three different types of problems:

Carbon-aware flexible computational load shifting

Data center HVAC cooling energy optimization

Carbon-aware battery auxiliary supply

Demo of SustainDC¶

A demo of SustainDC is given in the Google Colab notebook below

Features of SustainDC¶

Highly Customizable and Scalable Environments: Allows users to define and modify various aspects of DC operations, including server configurations, cooling systems, and workload traces.

Multi-Agent Support: Enables testing of MARL controllers with both homogeneous and heterogeneous agents, facilitating the study of collaborative and competitive DC management strategies.

Gymnasium Integration: Environments are wrapped in the Gymnasium

Envclass, making it easy to benchmark different control strategies using standard reinforcement learning libraries.Realistic External Variables: Incorporates real-world data such as weather conditions, carbon intensity, and workload traces to simulate the dynamic and complex nature of DC operations.

Collaborative Reward Mechanisms: Supports the design of custom reward structures to promote collaborative optimization across different DC components.

Benchmarking Suite: Includes scripts and tools for evaluating the performance of various MARL algorithms, providing insights into their effectiveness in reducing energy consumption and carbon emissions.