OpenShift Virtualization

Install and Configure Openshift Virtulization

Introduction

RedHat OpenShift Virtualization stands as a cornerstone of Red Hat's OpenShift Container Platform, seamlessly blending virtual machines and containers to offer a unified management interface. This integration empowers organizations to efficiently deploy both modern and traditional applications, encompassing virtual machines, containers, and serverless functions within a single platform. Built upon the "container-native virtualization" concept, driven by the KubeVirt project, it harnesses the RHEL KVM hypervisor to seamlessly merge virtual machines with Kubernetes and KubeVirt for streamlined management and orchestration. Through this infrastructure, OpenShift Virtualization enables the coexistence of virtual machines and containers within a Kubernetes environment, providing a cohesive solution for workload management.

OpenShift Virtualization adds new objects into your OpenShift Container Platform cluster via Kubernetes custom resources to enable virtualization tasks. These tasks include:

Creating and managing Linux and Windows virtual machines

Connecting to virtual machines through a variety of consoles and CLI tools

Importing and cloning existing virtual machines

Managing network interface controllers and storage disks attached to virtual machines

Live migrating virtual machines between nodes

An enhanced web console provides a graphical portal to manage these virtualized resources alongside the OpenShift Container Platform cluster containers and infrastructure.

OpenShift Virtualization is tested with OpenShift Data Foundation (ODF) and Alletra MP Storage.

OpenShift Virtualization allows the usage with either the [OVN-Kubernetes] (opens new window)or the [OpenShiftSDN] (opens new window)default Container Network Interface (CNI) network provider

Enabling OpenShift Virtualization

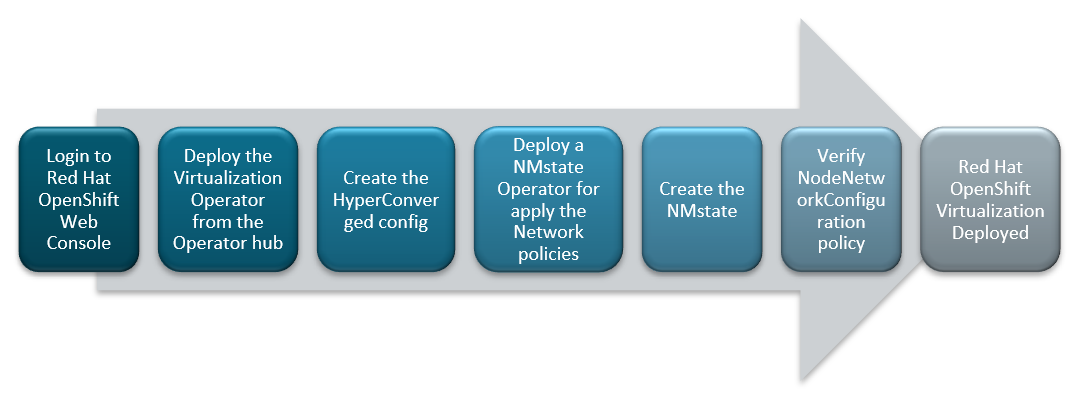

FIGURE 32. Red Hat OpenShift Virtualization deployment flow

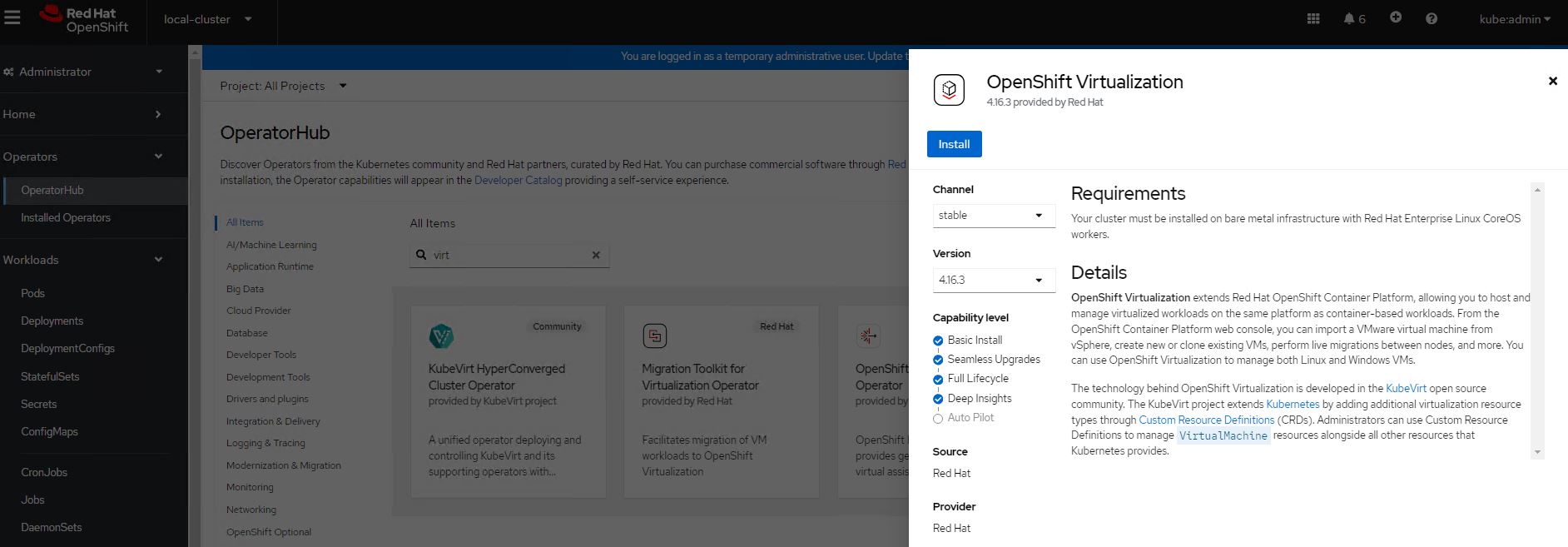

Installing OpenShift Virtualization Operator from OperatorHub

Log into the OpenShift Container Platform web console and navigate to Operators → OperatorHub

Type OpenShift Virtualization and Select OpenShift Virtualization tile

FIGURE 33. OpenShift Virtualization in OperatorHub

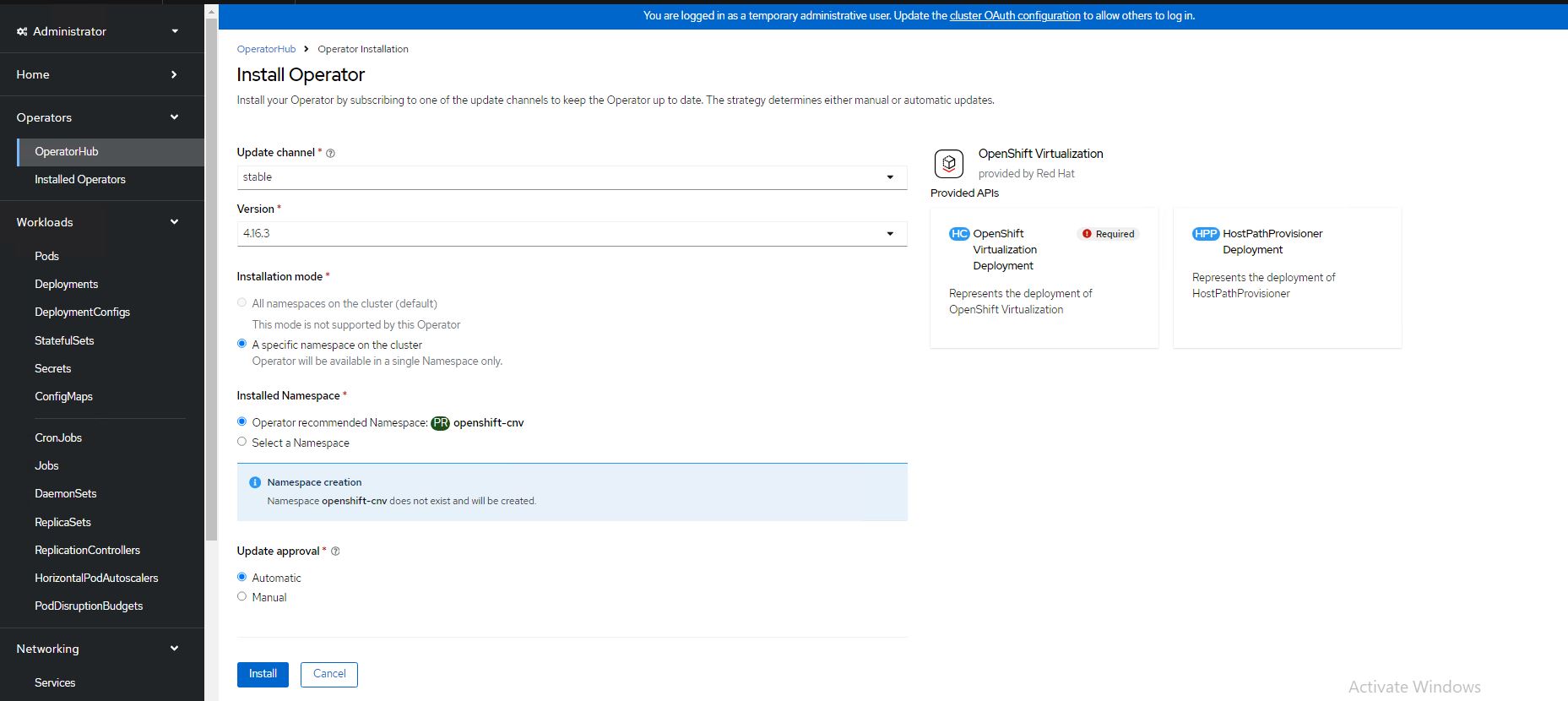

- Click and Install the Operator to the "openshift-cnv" namespace

FIGURE 34. Inputs for the OpenShift Virtualization operator

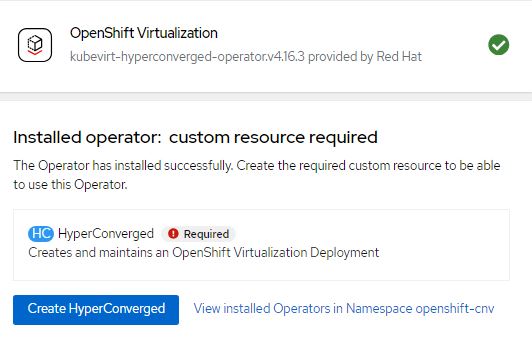

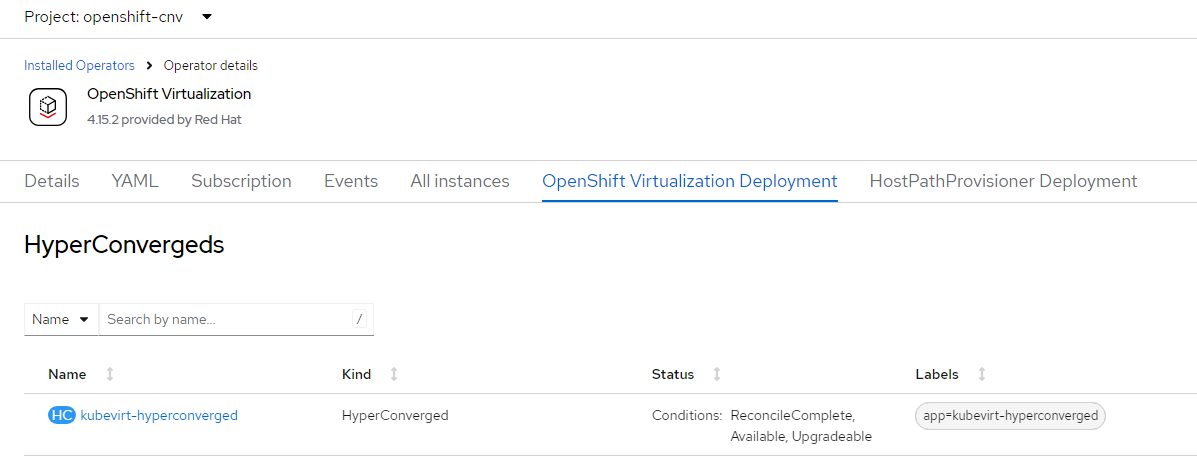

- Once OpenShift Virtualization is successfully installed , Create HyperConverged Custom resource

FIGURE 35. Creation of HyperConverged in OpenShift Virtualization operator

After successful deployment of operator and creation of HyderConverged. Virtualization will be enabled in webconsole.

Network configuration

You would have internal pod network as default network after successful deployment of OpenShift Virtualization. For additional network, we would deploy the network operator "NMState Operator" and configure Linux bridge network for external VM access and live migration.

Administrators can also install SR-IOV Operator to manage SR-IOV network devices and MetalLB Operator for lifecycle management.

Configuring a Linux bridge Network

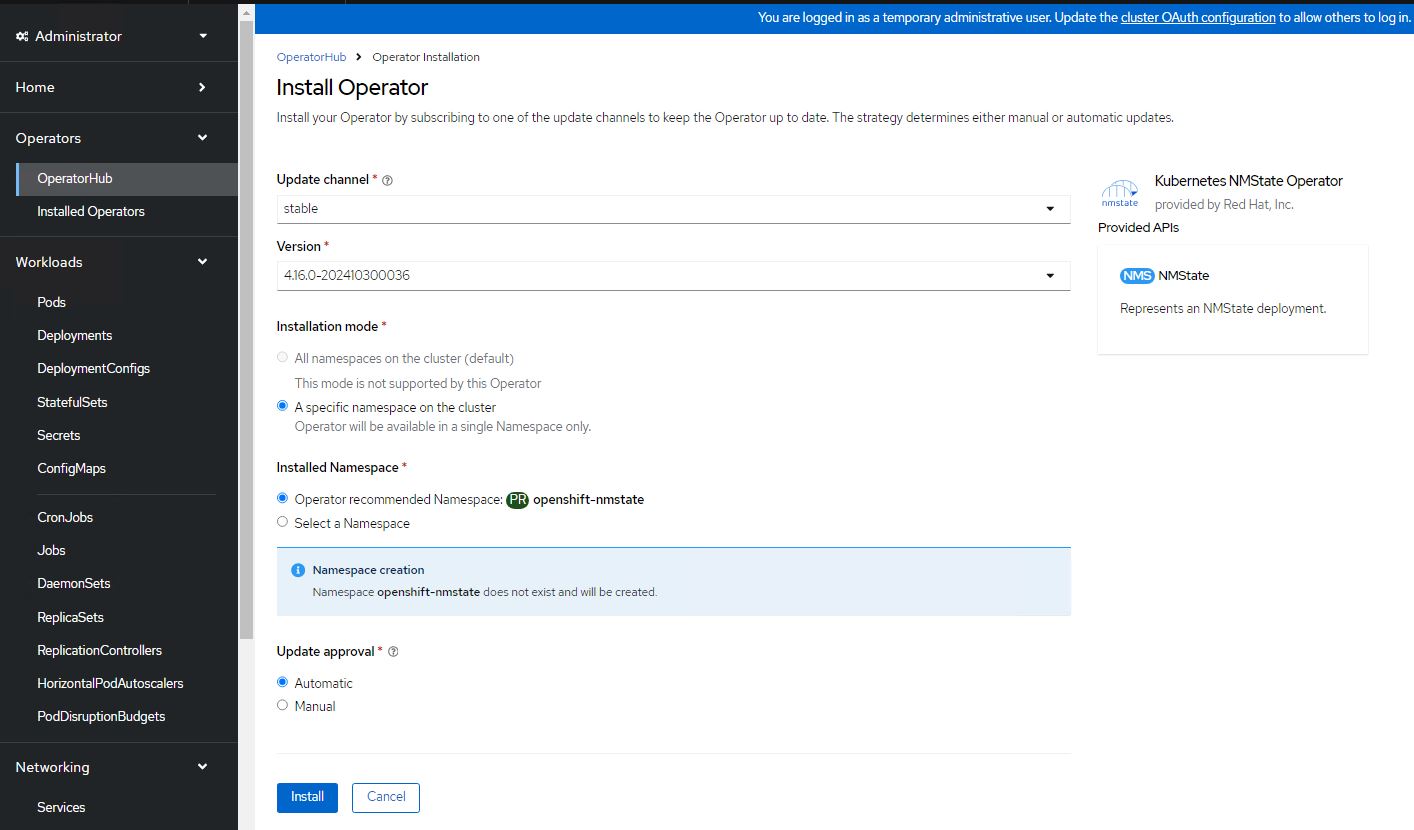

To install NMState Operator, navigate to Operators → OperatorHub in webconsole

Type NMState, Select Kubernetes NMState Operator tile and Install the Operator

FIGURE 36. Installation of NMState in OperatorHub

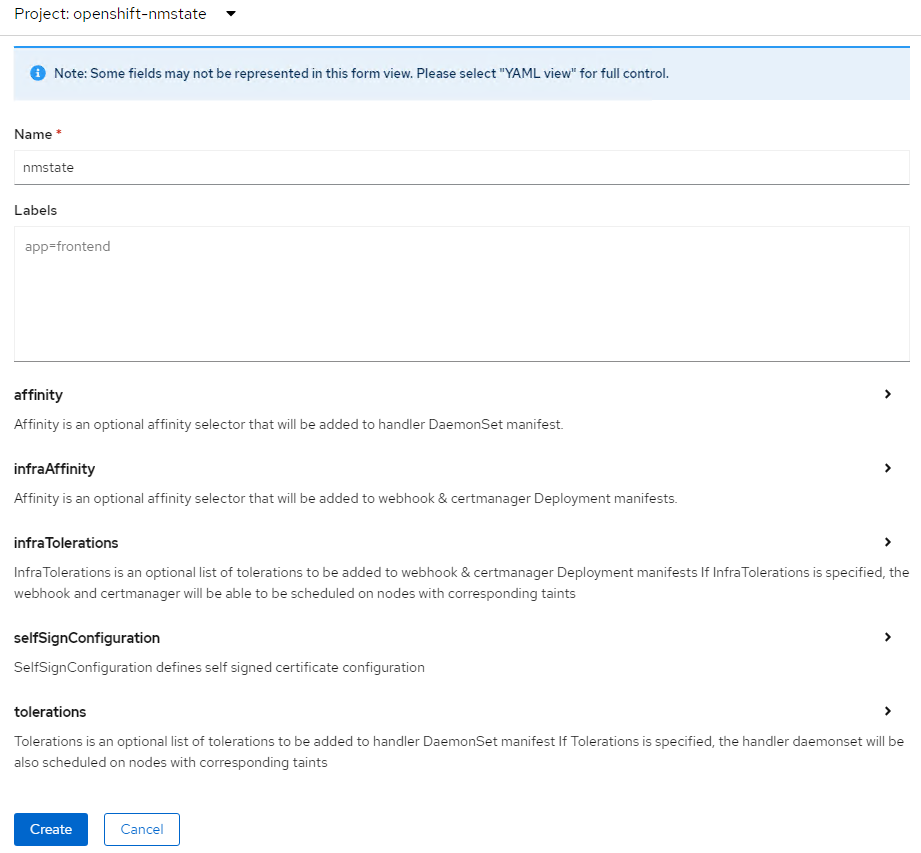

- Once the NMState operator is installed, Create a instance for "nmstate"

FIGURE 37. Inputs for NMState in operator

Creating a Linux bridge NNCP

Create a NodeNetworkConfigurationPolicy (NNCP) manifest for a Linux bridge network for network interface card(enp1s0) and apply the created NNCP manifest

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br1-policy

spec:

desiredState:

interfaces:

- name: bridge1

type: linux-bridge

state: up

ipv4:

dhcp: true

enabled: true

bridge:

options:

stp:

enabled: false

port:

- name: enp1s0 #NIC/Bond

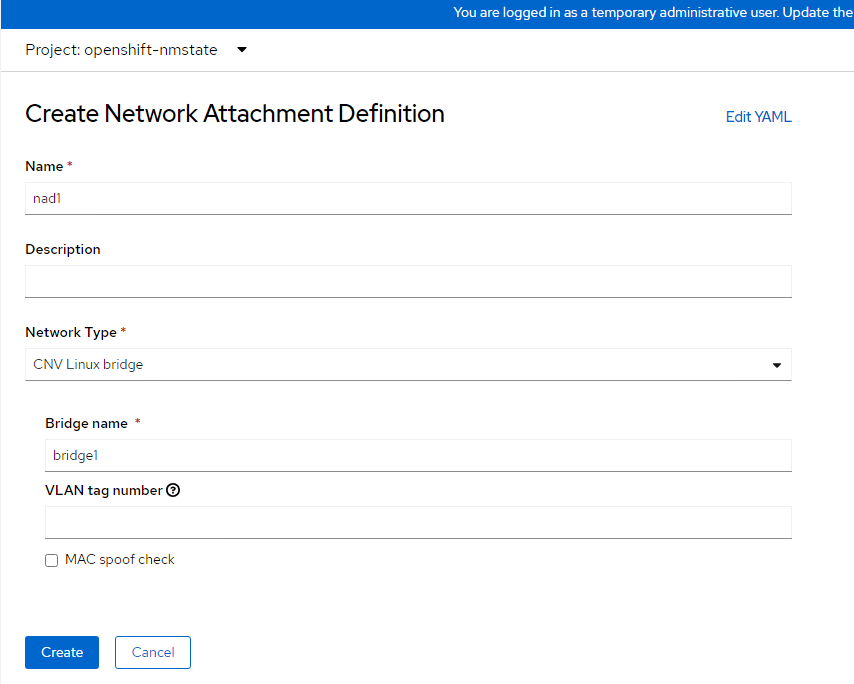

Creating a Linux bridge NAD

Log in to the OpenShift Container Platform web console and click Networking → NetworkAttachmentDefinitions

Click Create Network Attachment Definition (NAD). And provide the required details

Click the Network Type list and select CNV Linux bridge

Enter the name of the bridge (Previously created NodeNetworkConfigurationPolicy object as interfaces name ex: bridge1) in the Bridge Name field

Click Create

FIGURE 38. Creation of NAD

Creating a dedicated network for live migration

- Administrators have to create additional NAD for creating a dedicated live migration network. Create a NAD manifest as below

apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: migration-network

namespace: openshift-cnv

spec:

config: '{

"cniVersion": "0.3.1",

"name": "migration-bridge",

"type": "macvlan",

"master": "enp1s1",

"mode": "bridge",

"ipam": {

"type": "whereabouts",

"range": "20.0.0.0/24"

}

}'

- Goto the custom resource of Hydercoverged that was created during "OpenShift Virtualization" operator deployment and Specify the created network name for "spec.liveMigrationConfig"

apiVersion: hco.kubevirt.io/v1beta1

kind: HyperConverged

metadata:

name: kubevirt-hyperconverged

spec:

liveMigrationConfig:

completionTimeoutPerGiB: 800

network: migration-network #specify migration network name

parallelMigrationsPerCluster: 5

parallelOutboundMigrationsPerNode: 2

progressTimeout: 150

Create a virtual machine

The web console features an interactive wizard that guides you through General, Networking, Storage, Advanced, and Review steps to simplify the process of creating virtual machines. All required fields are marked by a *. When the required fields are completed, you can review and create your virtual machine.

Network Interface Cards (NICs) and storage disks can be created and attached to virtual machines after they have been created.

Use one of these procedures to create a virtual machine:

Creating virtual machines from templates

Creating virtual machines from instance types

Creating virtual machines from CLI

Creating virtual machines from templates

You can create virtual machines from templates provided by Red Hat using web console. You can also create customized templates as per requirements.

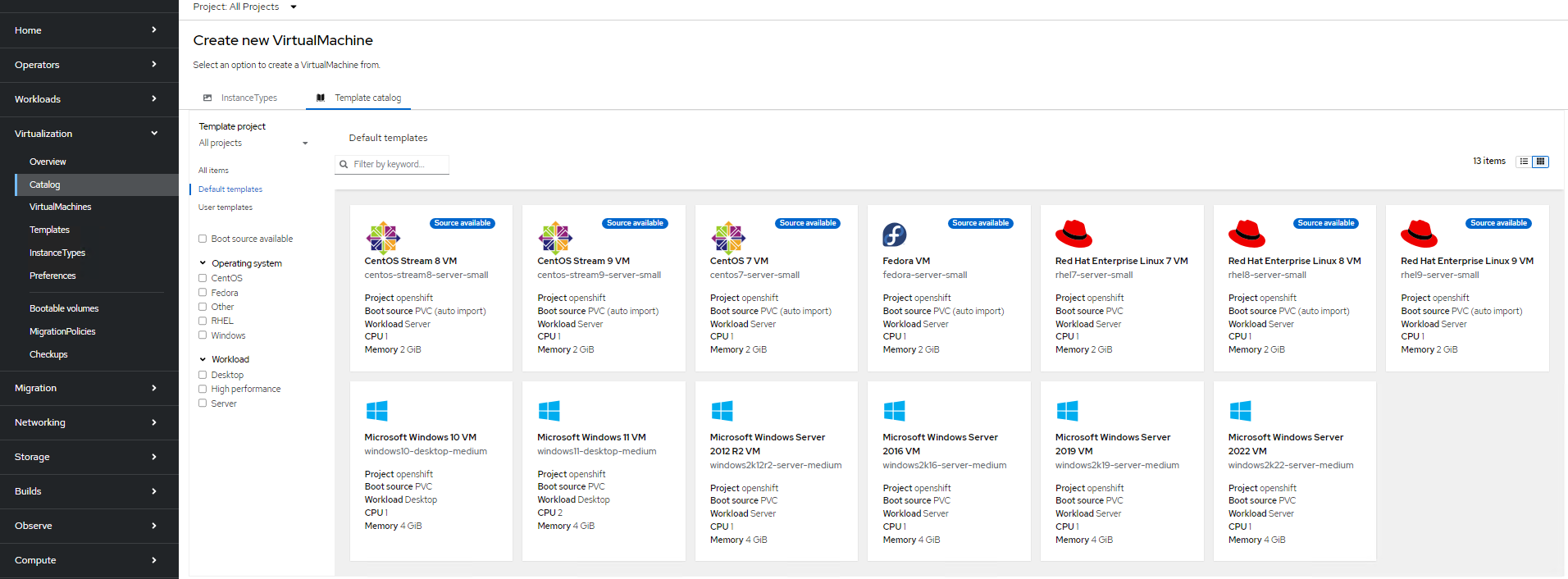

- Log into the OpenShift Container Platform web console and navigate to Virtualization → Catalog → Template Catalog

FIGURE 39. Templates available by default in Virtualization

Apply the filter "Boot source available".

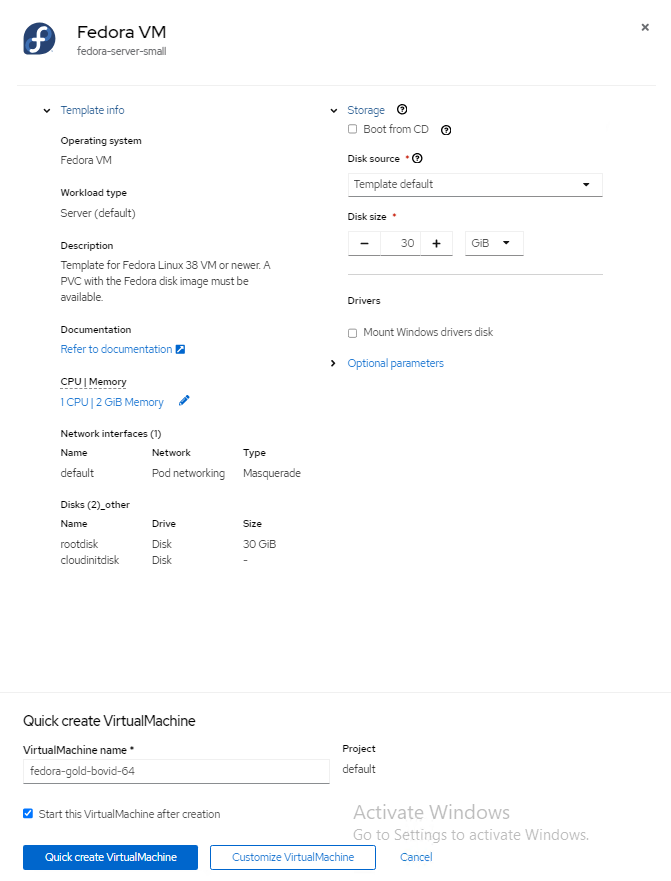

Click the required template to view the details (for example: fedora)

Click "Quick create VirtualMachine" to create a VM from the template. You can customize the CPU/Memory/Storage as required.

FIGURE 40. Sample deployment of fedora VM using templates

Creating virtual machines from instance types

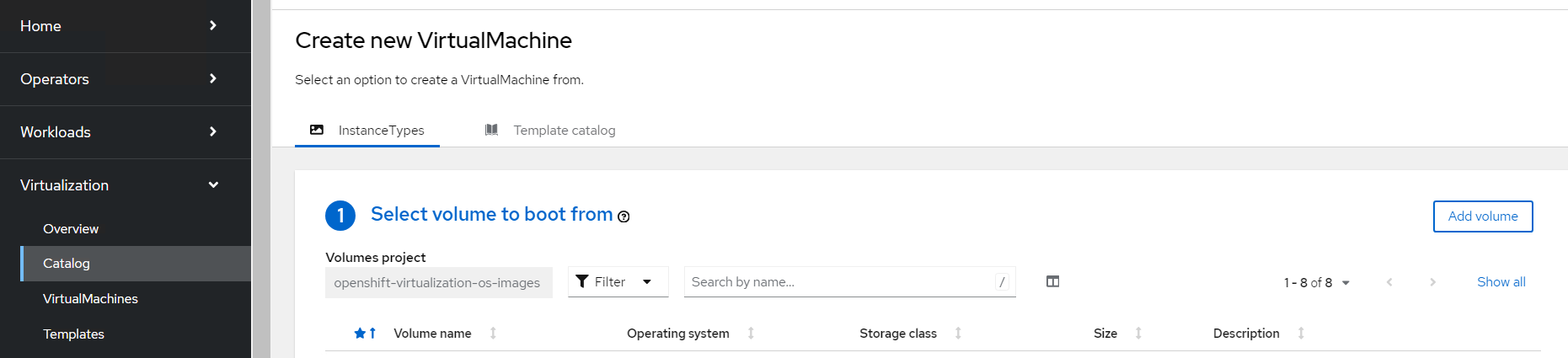

- Log into the OpenShift Container Platform web console and navigate to Virtualization → Catalog → Instance Types

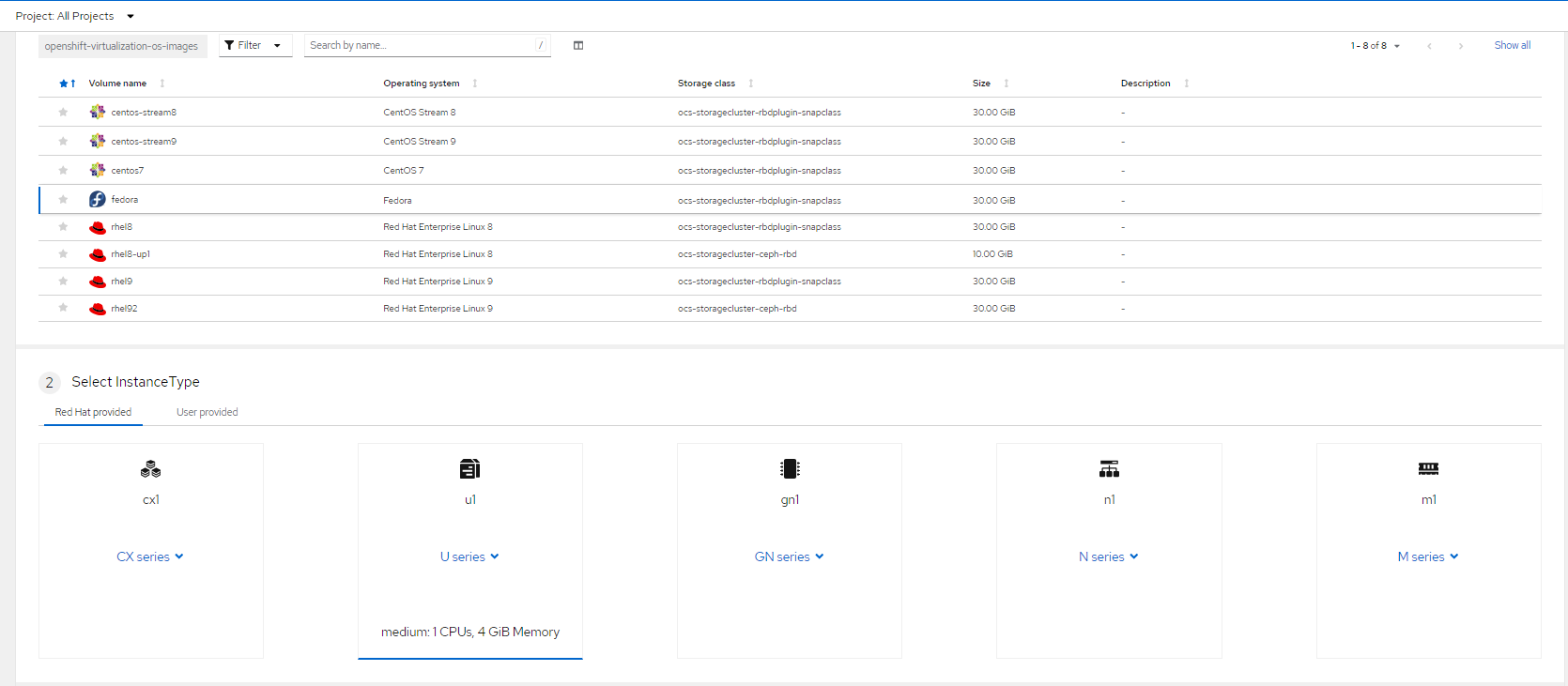

FIGURE 41. Virtual Machine creation from Catalog

Select the bootable volumes

- Images provided by RedHat, these images are available in "openshift-virtualization-os-images" namespace.

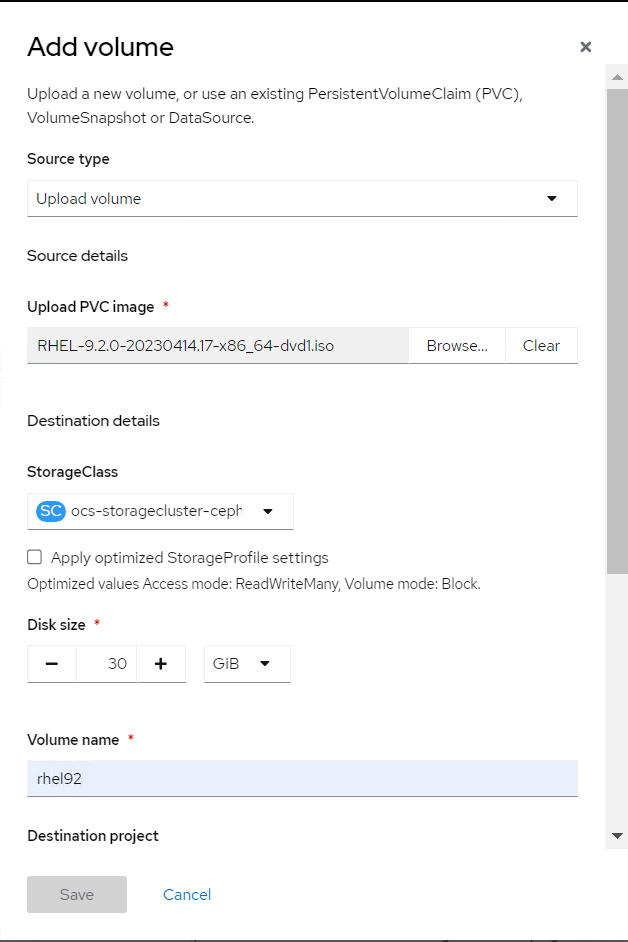

- Click Add Volume. You can either use any of the existing volume where you have the boot images or you can upload images and create a new volume(PVC) and provide the required parameter.

FIGURE 42. Add volumes to import OS images

Click the required boot volume.

Select the required Instance type

FIGURE 43. Selection of Instance type and boot image

- Click "Create VirtualMachine" to create a VM from the instance types.

You can Customize and create user Instance type as required by navigating to Virtualization → Instance Types → Create.

Creating virtual machines from CLI

- Create a VirtualMachine manifest required for creating a VM

Below is an example manifest for creating fedora VM

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: my-vm

spec:

running: false

template:

metadata:

labels:

kubevirt.io/domain: my-vm

spec:

domain:

devices:

disks:

- disk:

bus: virtio

name: containerdisk

- disk:

bus: virtio

name: cloudinitdisk

resources:

requests:

memory: 8Gi

volumes:

- name: containerdisk

containerDisk:

image: kubevirt/fedora-cloud-registry-disk-demo

- name: cloudinitdisk

cloudInitNoCloud:

userData: |

#cloud-config

password: fedora

chpasswd: { expire: False }

- Apply the created manifest file for virtual machine creation.

oc apply -f < file-name >.yaml

Reading/Viewing virtual machine

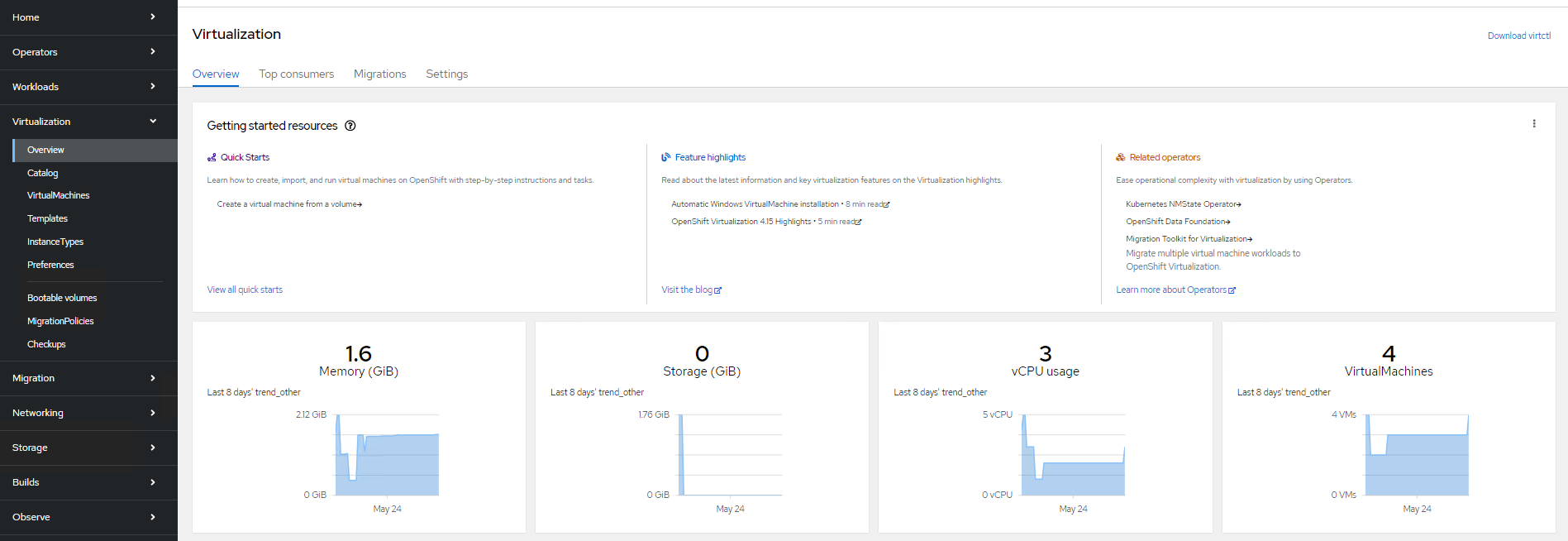

You can check the virtual machine status, Metrics , resources utilization (CPU, memory, storage) for overall cluster by navigate to Virtualization → Overview.

FIGURE 44. Overview Virtual machines in the Cluster

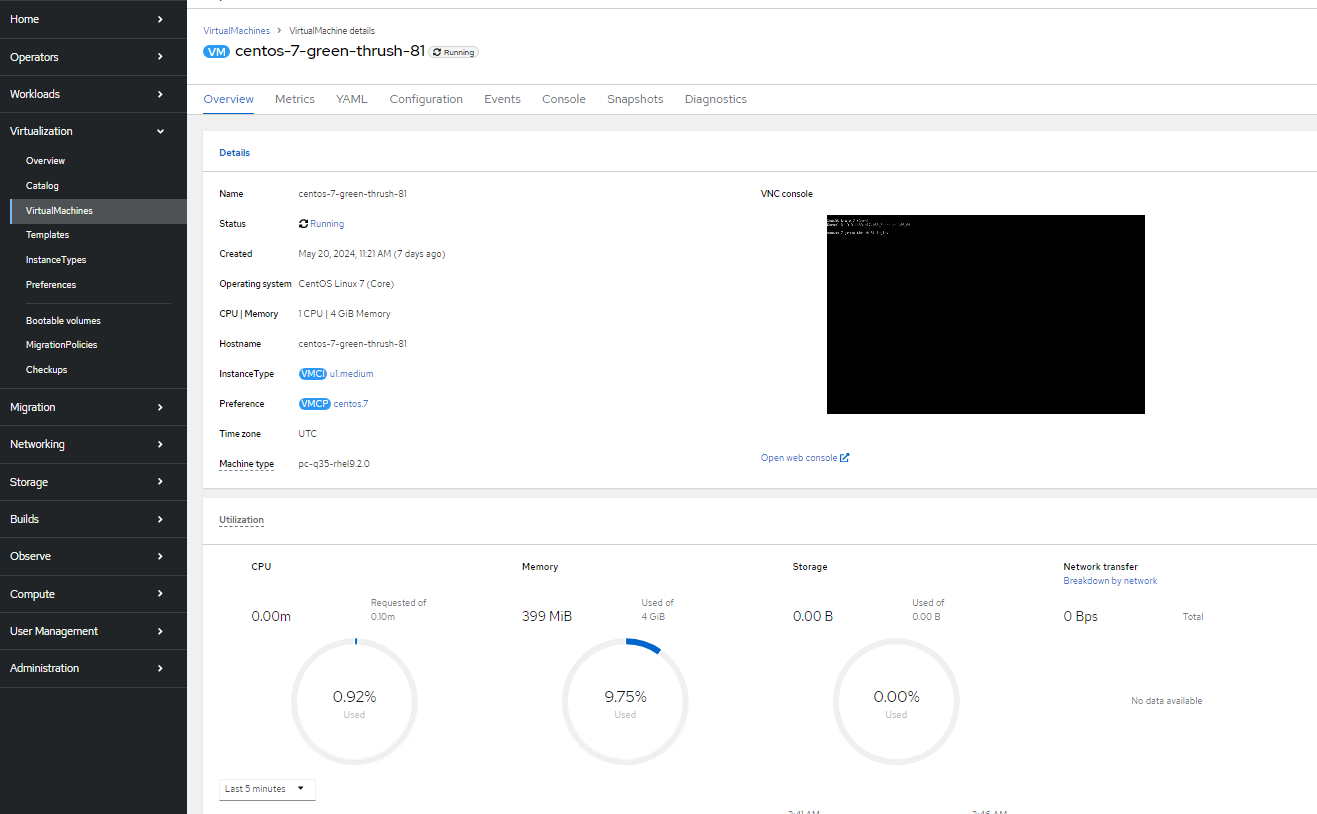

and access configuration details of VM's, networks and storage volumes.

FIGURE 45. Overview of Sample Virtual machines deployed in the RHOCP platform

Updating a virtual machine

You can update virtual machine configuration using CLI or from the web console.

virtual machine configuration using CLI

- Virtual machine configuration can be edit/updated

oc edit vm < vm-name > -n < namespace >

- Apply the updated configuration

oc apply vm < vm_name > -n < namespace >

virtual machine configuration using web console

Log into the OpenShift Container Platform web console and navigate to Virtualization → VirtualMachines

Select the virtual machine For example: addition of the disk navigate to Configuration → Storage → Add disk

FIGURE 46. Storage disk addition

Specify the fields like Source, Size, Storage class as required

Click Add we can add additional networks, secrets, config map to virtual machines.

Some of the changes are applied once the virtual machine are restarted only. For restarting of any virtual machines navigate to Virtualization → VirtualMachines Click the Options menu beside a virtual machine name and select Restart or select the virtual machine → Actions → Restart

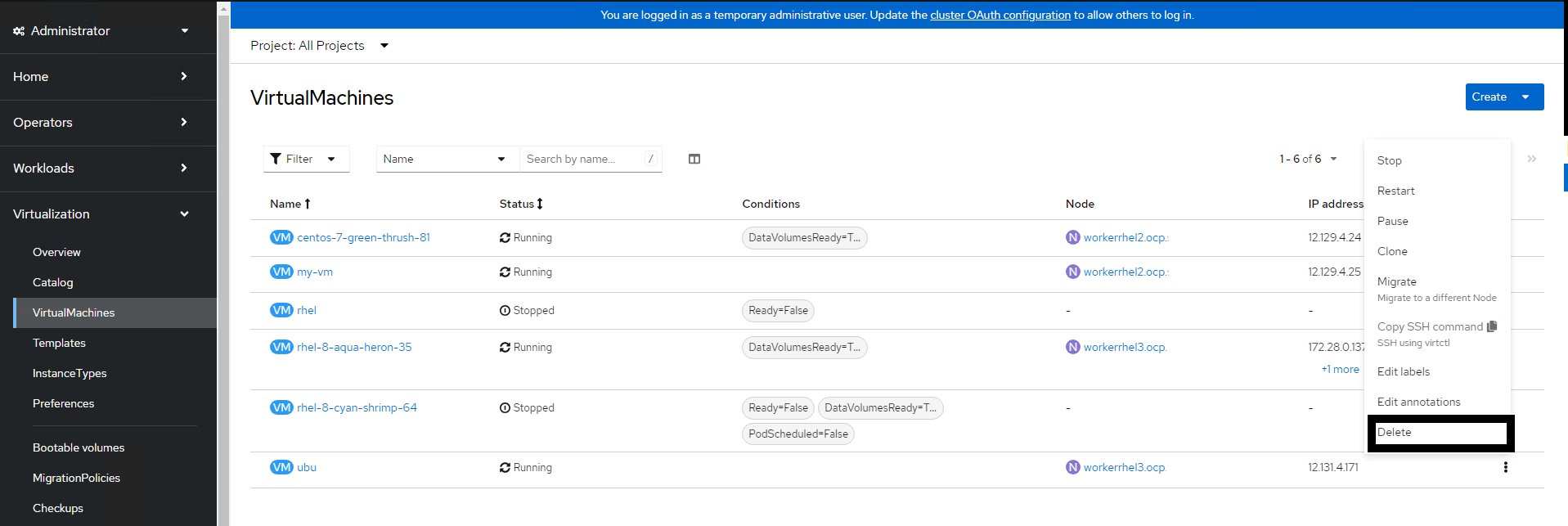

Deleting a virtual machine

You can delete a virtual machine by using CLI or from the web console.

Delete a virtual machine using CLI

- Delete the virtual machine by executing the below command:

oc delete vm < vm_name > -n < namespace >

Delete a virtual machine using web console

Log into the OpenShift Container Platform web console and navigate to Virtualization → VirtualMachines

Click the Options menu

beside a virtual machine name and select Delete or Select the virtual machine → Actions → Delete

FIGURE 47. Delete a virtual machine

Hot Plugging

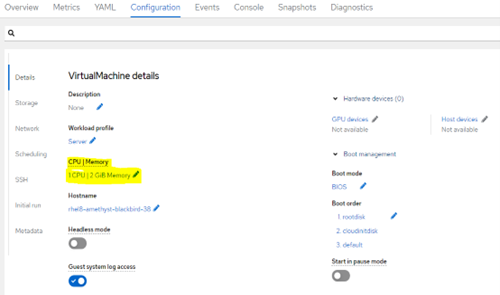

Hot Plugging vCPUs

Hot plugging means enabling or disabling devices while a virtual machine is running.

Prerequisites:

Required minimum 2 worker nodes in cluster

Steps:

In the Virtualization tab, Navigate to VirtualMachine.

Select a running virtual machine that required changes

Navigate to Configuration tab and Click Details.

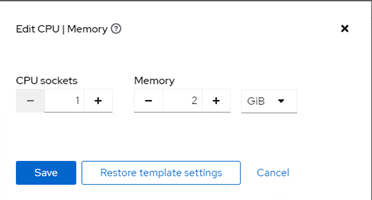

Edit the CPU | Memory details

FIGURE 48. Paramter for hot plugging vCPU- 1

- Change the value of Virtual Sockets as required.

FIGURE 49. Paramter for hot plugging vCPU- 2

- Click Save.

Note: Hot Plugging of CPU and Memory is not possible when Virtual machines are created from the Instance type.

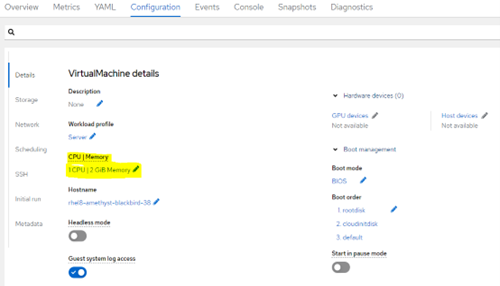

Hot Plugging Memory

You can hot plug virtual memory. Each time memory is hot plugged, it appears as a new memory device in the VM Devices tab in the details view of the virtual machine, up to a maximum of 16 available slots. When the virtual machine is restarted, these devices are cleared from the VM Devices tab without reducing the virtual machine’s memory, allowing you to hot plug more memory devices.

Prerequisites:

Required minimum 2 worker nodes in cluster

Steps:

In the Virtualization tab, Navigate to VirtualMachine.

Select a running virtual machine that required changes

Navigate to Configuration tab and Click Details.

Edit the CPU | Memory details

FIGURE 50. Paramter for hot plugging Memory

Change the value of Virtual Sockets as required.

Click Save.

Note: Hot Plugging of CPU and Memory is not possible when Virtual machines are created from the Instance type.

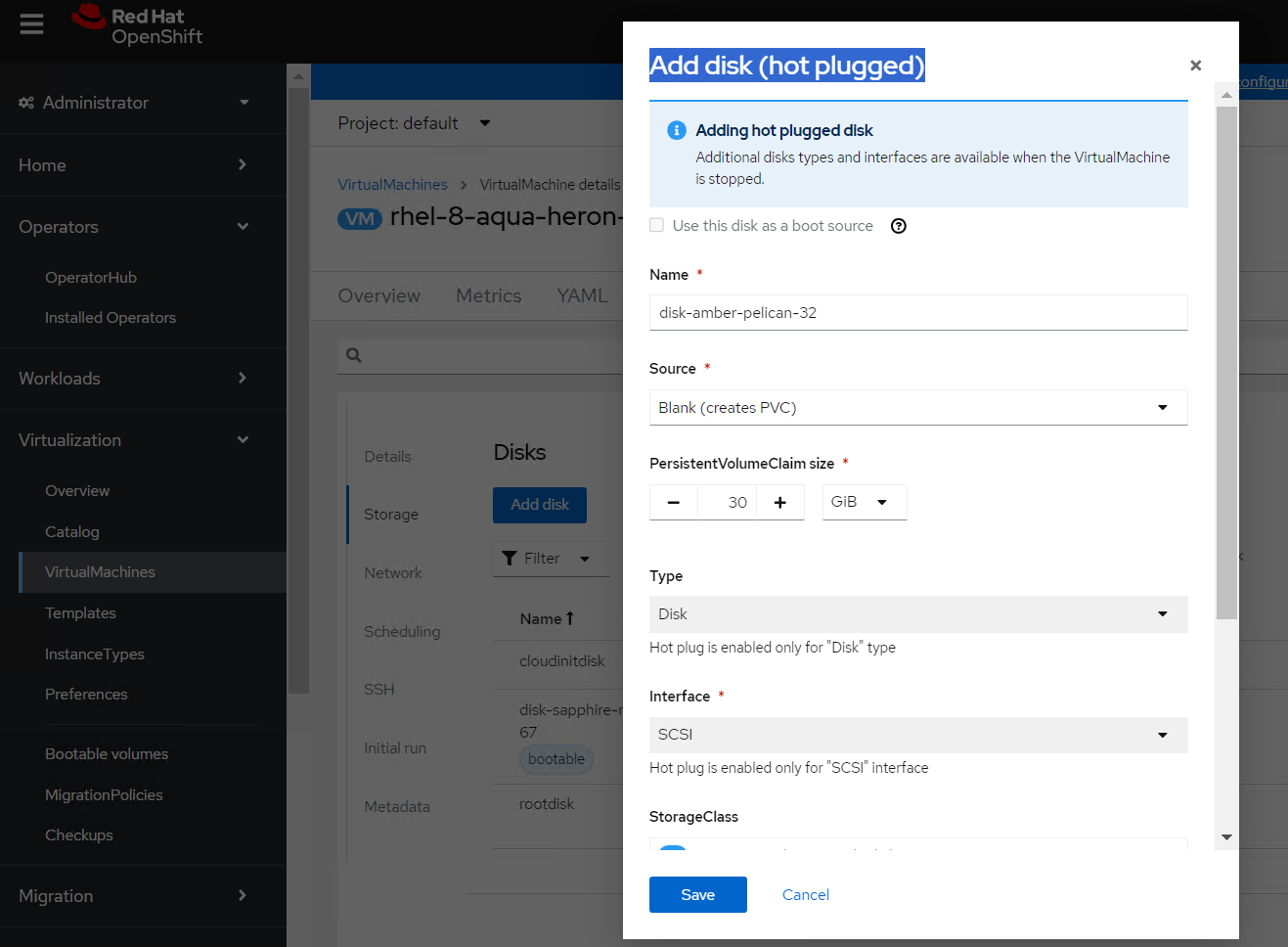

Hot-plugging VM disks

You can add or remove virtual disks without stopping your virtual machine (VM) or virtual machine instance (VMI). Only data volumes and persistent volume claims (PVCs) can be hot plugged and hot-unplugged. You cannot hot plug or hot-unplug container disks.

Prerequisites

You must have a data volume or persistent volume claim (PVC) available for hot plugging.

Steps:

In the Virtualization tab, Navigate to VirtualMachines

Select a running virtual machine that required changes

On the VirtualMachine details page, click Configuration Storage

Add a hot plugged disk:

i. Click Add disk.

ii. In the Add disk (hot plugged) window, select the disk from the Source list and click Save.

Note: Each VM must have Virtio-scsi Controller so that Hot Plug disk can use SCSI bus.

FIGURE 51. Parameter's for hot plugging VM disks

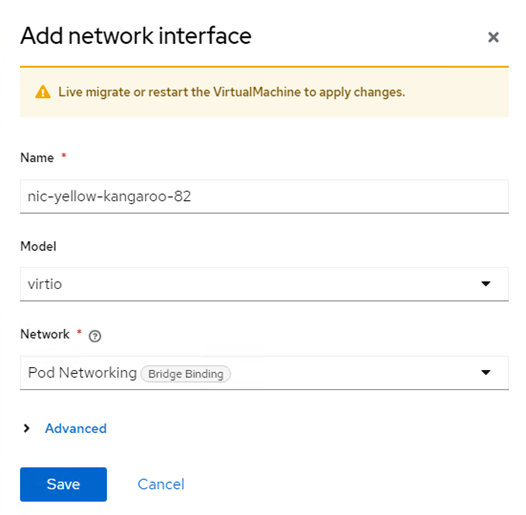

Hot-plugging Network Interface

You can add or remove network interfaces without stopping your virtual machine (VM). OpenShift Virtualization supports hot plugging for interfaces that use the VirtIO device driver.

Prerequisites

• A network attachment definition is configured in the same namespace as your VM.

• Required minimum 2 nodes in cluster

Steps:

In the Virtualization tab, Navigate to VirtualMachines

Select a running virtual machine that required changes

On the VirtualMachine details page, click Configuration Network Add network interface

FIGURE 52. Parameter's for hot plugging Network Interface

Add the respective Pod Networking (NAD) that needs to be added to network interface

Click Save the network setting.

Navigate to Actions Click Migrate

Once the VM is Successfully migrated to different node. The newly added network interface is available in the virtual machine.

Live migration

Introduction

Live migration is the process of moving a running virtual machine (VM) to another node in the cluster without interrupting the virtual workload. By default, live migration traffic is encrypted using Transport Layer Security (TLS).

An enhanced web console provides a graphical portal to manage these virtualized resources alongside the OpenShift Container Platform cluster containers and infrastructure.

Requirements of Live migration

A dedicated Multus network for live migration is highly recommended.

Persistent Volume Claim (Storage) must have ReadWriteMany (RWX) access mode.

All CPU in cluster which hosts Virtual machines must support the host model.

Configuring live migration

You can configure the following live migration settings to ensure the migration process do not saturate the cluster.

Limits and timeouts

Maximum number of migrations per node or cluster

Edit the HyperConverged CR and add the necessary live migration parameters:

oc edit hyperconverged kubevirt-hyperconverged -n openshift-cnv

sample configuration parameters

apiVersion: hco.kubevirt.io/v1beta1

kind: HyperConverged

metadata:

name: kubevirt-hyperconverged

namespace: openshift-cnv

spec:

liveMigrationConfig:

bandwidthPerMigration: 64Mi

completionTimeoutPerGiB: 800

parallelMigrationsPerCluster: 5

parallelOutboundMigrationsPerNode: 2

progressTimeout: 150

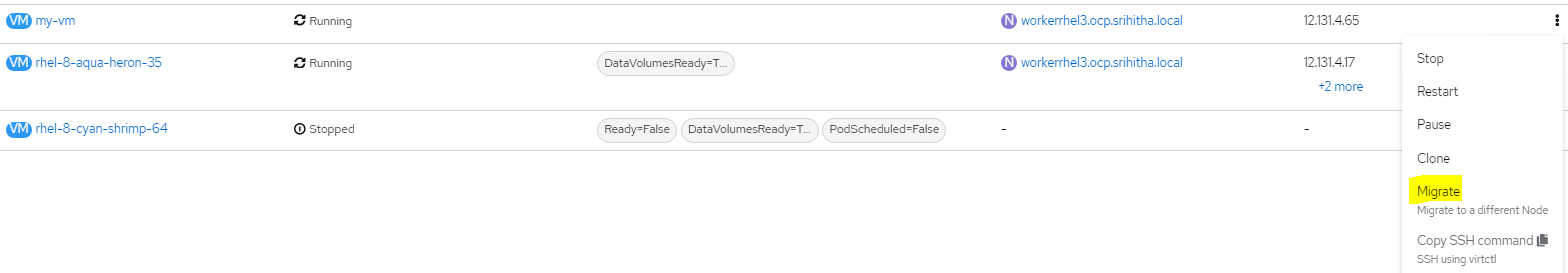

Initiating Live Migration

Live migration of virtual machines can be done using web console or CLI. prerequisites must be met for the live migration of any virtual machines.

Initiating Live Migration using web console

Navigate to Virtualization → VirtualMachines in the web console.

Select Migrate from the Options menu

beside a VM.

FIGURE 53. Live Migration between nodes

- Click Migrate.

Initiating Live Migration using CLI

- Create and apply the VirtualMachineInstanceMigration manifest for the VM

apiVersion: kubevirt.io/v1

kind: VirtualMachineInstanceMigration

metadata:

name: < migration_name >

spec:

vmiName: < vm_name >

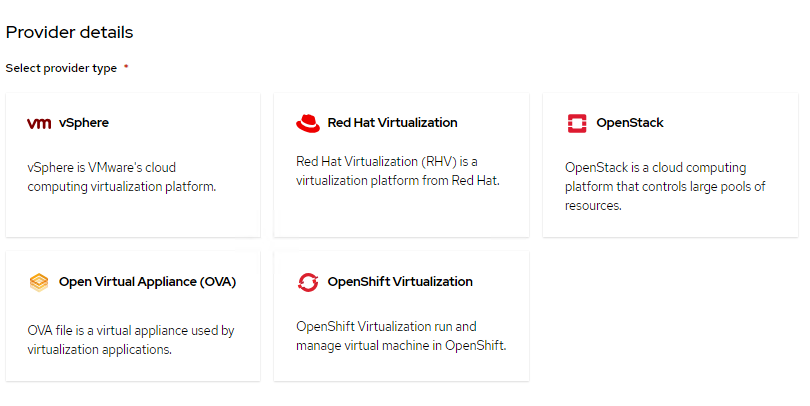

Migration Toolkit for Virtualization

Introduction

The Migration Toolkit for Virtualization (MTV) enables you to migrate virtual machines from VMware vSphere, Red Hat Virtualization, or OpenStack to OpenShift Virtualization running on Red Hat OpenShift Virtualization platform.

FIGURE 54. Migration Toolkit for Virtualization supported providers

FIGURE 54. Migration Toolkit for Virtualization supported providers

MTV simplifies the migration process, allowing you to seamlessly move VM workloads to OpenShift Virtualization

MTV supports Cold migration which is the default migration type. The source virtual machines are shutdown while the data is copied. Cold migration from

VMware vSphere

Red Hat Virtualization (RHV)

OpenStack

Remote OpenShift Virtualization clusters

MTV supports warm migration from VMware vSphere and from RHV. In warm migration most of the data is copied during the pre-copy stage while the source virtual machines (VMs) are running. Then the VMs are shut down and the remaining data is copied during the cutover stage

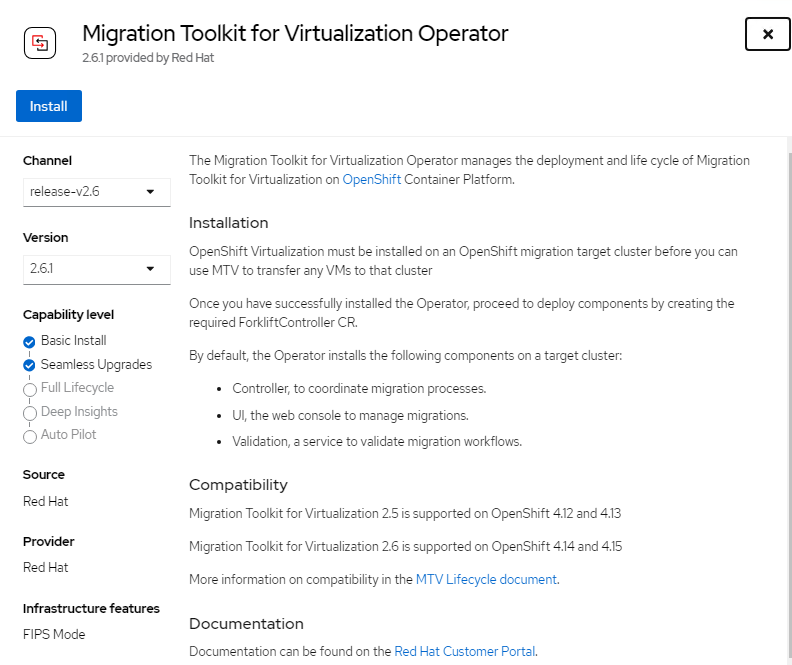

Installing MTV Operator

You can install MTV operator using web console and CLI

Installing MTV Operator using web console

- In the web console, navigate to Operators → OperatorHub.

- Use the Filter by keyword field to search for mtv-operator.

- Click the Migration Toolkit for Virtualization Operator tile and then click Install.

FIGURE 55. Migration Toolkit Operator deployment

FIGURE 55. Migration Toolkit Operator deployment

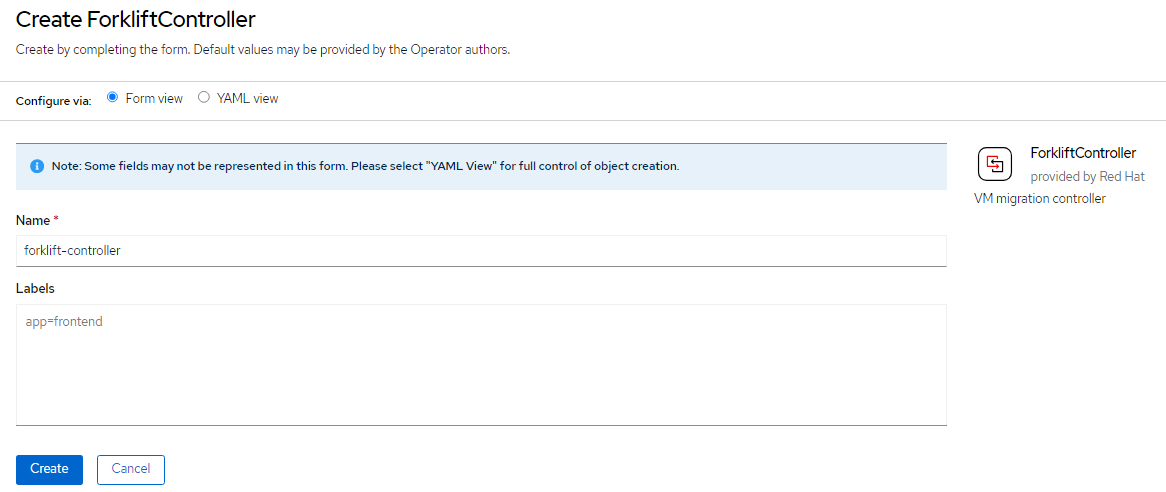

- After the Migration Toolkit for Virtualization Operator is installed successfully create ForkliftController Instance.

FIGURE 56. ForliftController Instance creation

FIGURE 56. ForliftController Instance creation

Installing MTV Operator using CLI

- Create the openshift-mtv project:

$ cat << EOF | oc apply -f -

apiVersion: project.openshift.io/v1

kind: Project

metadata:

name: openshift-mtv

EOF

- Create an OperatorGroup CR called migration:

$ cat << EOF | oc apply -f -

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: migration

namespace: openshift-mtv

spec:

targetNamespaces:

- openshift-mtv

EOF

- Create a Subscription CR for the Operator:

$ cat << EOF | oc apply -f -

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

name: mtv-operator

namespace: openshift-mtv

spec:

channel: release-v2.6

installPlanApproval: Automatic

name: mtv-operator

source: redhat-operators

sourceNamespace: openshift-marketplace

startingCSV: "mtv-operator.v2.6.1"

EOF

- Create a ForkliftController CR:

$ cat << EOF | oc apply -f -

apiVersion: forklift.konveyor.io/v1beta1

kind: ForkliftController

metadata:

name: forklift-controller

namespace: openshift-mtv

spec:

olm_managed: true

EOF

Migration of Virtual Machines

You can migrate virtual machines to OpenShift Virtualization by using the MTV web console from following providers:

- VMware vSphere

- Red Hat Virtualization

- OpenStack

- Open Virtual Appliances (OVAs) that were created by VMware vSphere

- OpenShift Virtualization

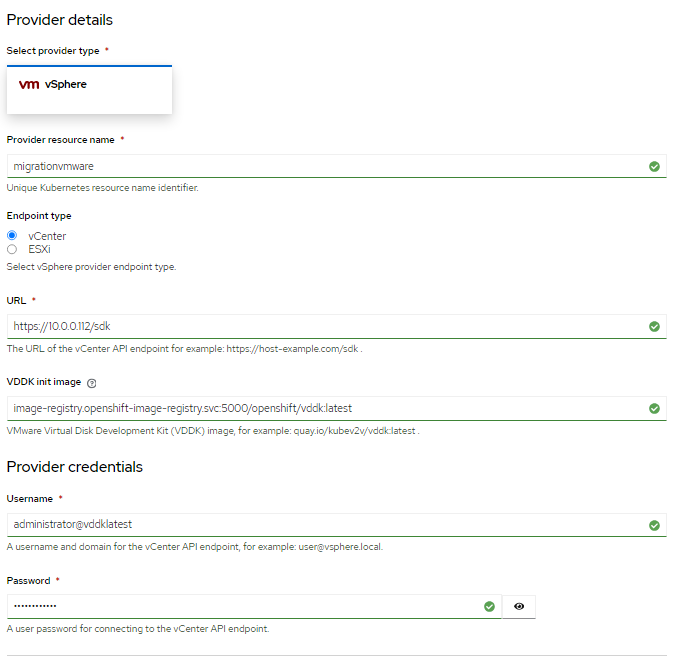

Adding providers

In web console, navigate to Migration → Providers of Migration → Create Provider

Select the virtual machine provider (in this example we will go with VMWare)

Specify the required provider details

FIGURE 57. Additon of VMWare vSphere

FIGURE 57. Additon of VMWare vSphere

Note: Provider the VDDK init image to accelerate migrations.

Choose one of the following options for validating CA certificates

- Custom CA certificate

- System CA certificate

- Skip certificate validation

Click Create Provider

Selecting a migration network for a VMware source provider

In the web console, navigate to Migration → Providers for virtualization.

Click the host in the Hosts column beside a provider to view a list of hosts.

Select one or more hosts and click Select migration network.

Specify the following fields:

- Network: Network name

- ESXi host admin username: For example, root

- ESXi host admin password: Password

- Click Save.

Verify that the status of each host is Ready.

Migration plan creation

Creating and running a migration plan starting on Providers for virtualization

In the web console, navigate to Migration → Providers for virtualization.

In the row of the appropriate source provider. And navigate to Virtual Machines tab.

Select the VMs you want to migrate and click Create migration plan.

Create migration plan displays the source provider's name and suggestions for a target provider and namespace, a network map, and a storage map.

Provide the required details to the editable fields.

Add mapping to edit a suggested network mapping or a storage mapping, or to add one or more additional mappings.

Mapped storage and network mapping can be viewed in Migration → StorageMaps for virtualization / Migration → NetworkMaps for virtualization

- Create migration plan.

You can create the migration plan for the Source provider as well.

Running a migration plan

In the web console, navigate to Migration → Plans for virtualization.

You can see the list of migration plans created for source and target providers, the number of virtual machines (VMs) being migrated, the status, and the description of each plan.

Click Start beside a migration plan to start the migration.

Click Start in the confirmation window that opens.

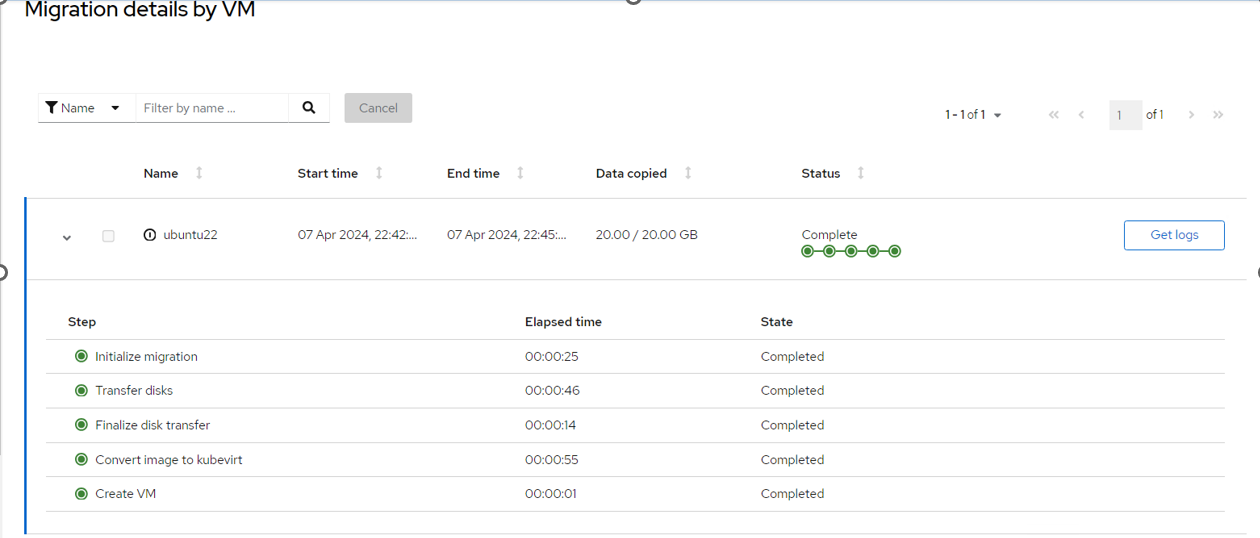

The Migration details by VM screen opens, displaying the migration's progress Warm migration only: The pre-copy stage starts. Click Cutover to complete the migration.

Click a migration's Status to view the details of the migration.

The Migration details by VM screen opens, displaying the start and end times of the migration, the amount of data copied for each VM being migrated.

Warm Migration

Warm migration copies most of the data during the precopy stage. Then the VMs are shut down and the remaining data is copied during the cutover stage. Results of a sample ubuntu virtual machine migration with 10GB OS disk and 10GB data disk.

FIGURE 58. Warm Migration output

FIGURE 58. Warm Migration output

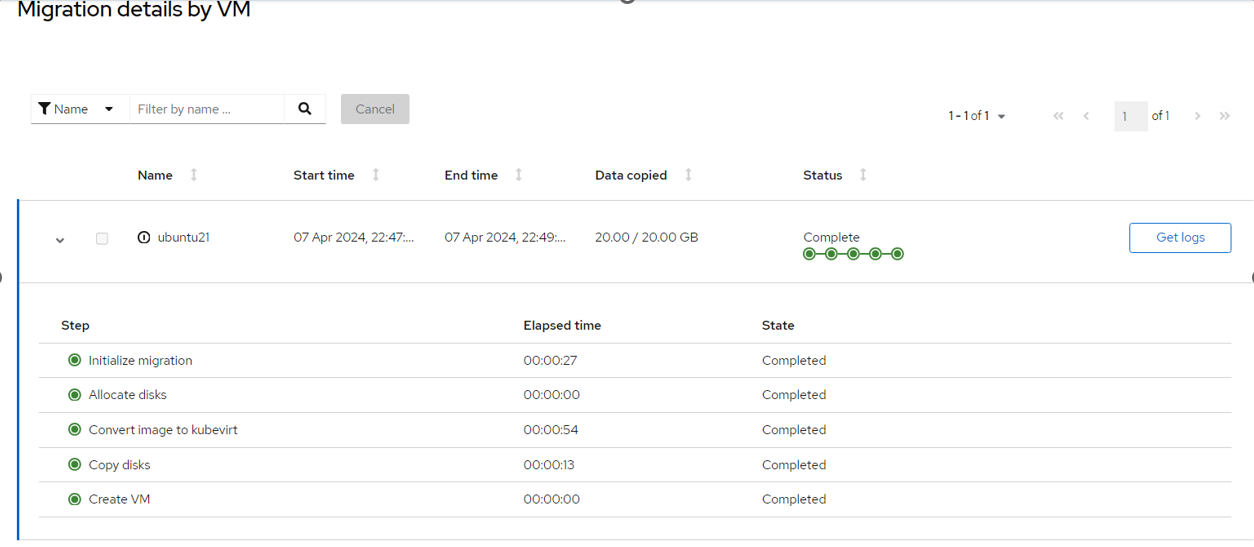

Cold Migration

During Cold migration the Virtual Machines (VMs) are shut down while the data is copied. Results of a sample ubuntu virtual machine migration with 10GB OS disk and 10GB data disk.

FIGURE 59. Cold Migration output

FIGURE 59. Cold Migration output