# Red Hat OpenShift Container Platform Cluster deployment

This section describes the process to deploy Red Hat OpenShift Container Platform 4.

Pre-requisites

Bootstrap node should be up.

Master nodes should be up and ready.

# Deploying an OpenShift cluster

This section covers the steps to deploy a Red Hat OpenShift Container Platform 4 cluster with master nodes and worker nodes running RHCOS. After the operating system is installed on the nodes, verify the installation and then perform the following steps:

Login to the installer VM as non-root user.

Execute the following command to bootstrap the nodes.

> $BASE_DIR/installer/library/openshift_components/openshift-install wait-for bootstrap-complete --log-level=debugRESULT

DEBUG OpenShift Installer v4.4 DEBUG Built from commit 425e4ff0037487e32571258640b39f56d5ee5572 INFO Waiting up to 30m0s for the Kubernetes API at https://api.ocp.pxelocal.local:6443... INFO API v1.14.4+76aeb0c up INFO Waiting up to 30m0s for bootstrapping to complete... DEBUG Bootstrap status: complete INFO It is now safe to remove the bootstrap resourcesNOTE

You can shut down or remove the bootstrap node after the completion of step 1 and step 2.

Run the following command to append the kubeconfig path to environment variables.

> export KUBECONFIG=$BASE_DIR/installer/ignitions/auth/kubeconfigRun the following command to append the oc utility path to environment variables.

> export PATH=$PATH:$BASE_DIR/installer/library/openshift_componentsProvide the PV storage for the registry. Execute the following command to set the image registry storage to an empty directory.

> oc patch configs.imageregistry.operator.openshift.io cluster --type merge --patch '{"spec":{"storage":{"emptyDir":{}}}}'Execute the following command to complete the RedHat OpenShift Container Platform 4 cluster installation.

> $BASE_DIR/installer/library/openshift_components/openshift-install wait-for install-complete --log-level=debugThe result should appear like what appears below.

DEBUG OpenShift Installer v4.4 DEBUG Built from commit 6ed04f65b0f6a1e11f10afe658465ba8195ac459 INFO Waiting up to 30m0s for the cluster at https://api.rrocp.pxelocal.local:6443 to initialize... DEBUG Still waiting for the cluster to initialize: Working towards 4.4: 99% complete DEBUG Still waiting for the cluster to initialize: Working towards 4.4: 99% complete, waiting on authentication, console,image-registry DEBUG Still waiting for the cluster to initialize: Working towards 4.4: 99% complete DEBUG Still waiting for the cluster to initialize: Working towards 4.4: 100% complete, waiting on image-registry DEBUG Still waiting for the cluster to initialize: Cluster operator image-registry is still updating DEBUG Still waiting for the cluster to initialize: Cluster operator image-registry is still updating DEBUG Cluster is initialized INFO Waiting up to 10m0s for the openshift-console route to be created... DEBUG Route found in openshift-console namespace: console DEBUG Route found in openshift-console namespace: downloads DEBUG OpenShift console route is created INFO Install complete! INFO To access the cluster as the system:admin user when using 'oc', run 'export KUBECONFIG=/opt/hpe/solutions/ocp/hpe-solutions-openshift/synergy/scalable/installer/library/ignitions/auth/kubeconfig' INFO Access the OpenShift web-console here: https://console-openshift-console.apps.ocp.pxelocal.local INFO Login to the console with user: kubeadmin, password: a6hKv-okLUA-Q9p3q-UXLc3NOTE

Make a note of the cluster URL and the username for future access.

If the password is lost or forgotten, view the file kubeadmin-password located in the installer machine at $BASE_DIR/installer/library/ignitions/auth/.

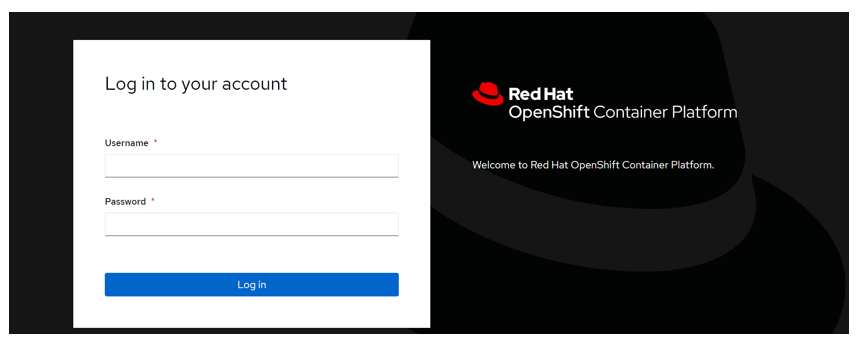

Figure 18 shows the OpenShift Container Platform console view upon successful deployment.

Figure 18. OpenShift Web Console login screen

# Validating OpenShift Container Platform deployment

After the cluster is up and running with OpenShift Local Storage Operator, the cluster configuration is validated by deploying a MongoDB pod with persistent volume and Yahoo Cloud Service Benchmarking (YCSB). This section covers the steps to validate the OpenShift Container Platform deployment.

Prerequisites

OCP 4 cluster must be installed.

Use local storage or OCS to claim persistent volume (PV).

MongoDB instance will only support local file system storage or OCS file system.

Note

Block storage is not supported.

# Deploying MongoDB application

Login to the installer VM as a non-root user.

Use the following command to download the Red Hat scripts specific to the MongoDB application at https://github.com/red-hat-storage/SAWORF (opens new window).

$ sudo git clone <https://github.com/red-hat-storage/SAWORF.git>From within the red-hat-storage repository, navigate to the folder SAWORF/OCS3/MongoDB/blog2.

Update the create_and_load.sh script with local storage, OCS , Nimble storage, and 3PAR storage in place of glusterfs content. Example is as follows.

mongodb_ip=$(oc get svc -n ${PROJECT_NAME} | grep -v **local storage** | grep mongodb | awk '{print $3}'Create MongoDB and YCSB pods and load the sample data. Update the following command with appropriate values for the command line parameters and execute the command to create the MongoDB and YCSB pods and also to load the sample data.

> ./create_and_load_mongodb $PROJECT_NAME $OCP_TEMPLATE $MONGODB_MEMORY_LIMIT $PV_SIZE $MONGODB_VERSION $YCSB_WORKLOAD $YCSB_DISTRIBUTION $YCSB_RECORDCOUNT $YCSB_OPERATIONCOUNT $YCSB_THREADS $LOG_DIRExample command is shown as follows.

> ./create_and_load_mongodb dbtest mongodb-persistent 4Gi 10Gi 3.6 workloadb uniform 4000 4000 8 root /mnt/data/The output is as follows.

> Deploying template "openshift/mongodb-persistent" to project dbtest MongoDB --------- > MongoDB database service, with persistent storage. For more information about using this template, including OpenShift considerations, see documentation in the upstream repository: <https://github.com/sclorg/mongodb-container>. The following service(s) have been created in the project: mongodb. Username: redhat Password: redhat Database Name: redhatdb Connection URL: mongodb://redhat:redhat@mongodb/redhatdb For more information about using this template, including OpenShift considerations, see documentation in the upstream repository: <https://github.com/sclorg/mongodb-container>. With parameters: * Memory Limit=4Gi * Namespace=openshift * Database Service Name=mongodb * MongoDB Connection Username=redhat * MongoDB Connection Password=redhat * MongoDB Database Name=redhatdb * MongoDB Admin Password=redhat * Volume Capacity=10Gi * Version of MongoDB Image=3.6 > Creating resources ... secret "mongodb" created service "mongodb" created error: persistentvolumeclaims "mongodb" already exists deploymentconfig.apps.openshift.io "mongodb" created > Failed pod/ycsb-pod created

Note

Scaling to more than one replica is not supported. You must have persistent volume available in your cluster to use this template.

Execute the following command to run the check_db_size script.

$ ./check_db_size $PROJECT_NAMEThe output is as follows.

MongoDB shell version v3.6.12 connecting to: mongodb://172.x.x.x:27017/redhatdb?gssapiServiceName=mongodb Implicit session: session {"id" : UUID("c0a76ddc-ea0b-4fc-88fd-045d0f98b2") } MongoDB server version: 3.6.3 { "db" : "redhatdb", "collections" : 1, "views" : 0, "objects" : 4000, "avgObjSize" : 1167.877, "dataSize" : 0.004350680857896805, "storageSize" : 0.00446319580078125, "numExtents" : 0, "indexes" : 1, "indexSize" : 0.0001068115234375, "fsUsedSize" : 1.0311393737792969, "fsTotalSize" : 99.951171875, "ok" : 1 }

# Verifying MongoDB deployment

Execute the following command to verify the persistent volume associated with MongoDB pods.

$ oc get pv|grep mongodbThe output is as follows.

local-pv-e7f10f65 100Gi RWO Delete Bound dbtest/mongodb local-sc 26hExecute the following command to verify the persistent volume claim (PVC) associated with MongoDB pods.

$ oc get pvcThe output is as follows.

local-pv-e7f10f65 100Gi RWO Delete Bound dbtest/mongodb local-sc 26hExecute the following command to ensure MongoDB and YCSB pods are up and running.

$ oc get podThe output is as follows.

NAME READY STATUS RESTARTS AGE mongodb-1-deploy 0/1 Completed 0 3m40s mongodb-1-skbwq 1/1 Running 0 3m36s ycsb-pod 1/1 Running 0 3m41s

Note

For more information about deploying MongoDB application along with YCSB, refer to the Red Hat documentation at https://www.redhat.com/en/blog/multitenant-deployment-mongodb-using-openshift-container-storage-and-using-ycsb-test-performance (opens new window).