# OpenShift Container Platform at the edge

Edge computing enables businesses become more proactive and dynamic by deploying applications and IT processing power closer to the devices and sensors that create or consume data, enabling them to gather, analyze and turn large data flows into actionable insights, faster. To make edge computing successful, consistency of application development and IT resource management is essential, especially when operating potentially hundreds to thousands of locations and/or clusters.

- Many edge sites are smaller in physical size when compared to their larger core or regional data center counterparts, and trying to install hardware in a space that was not designed for it needs to be carefully planned out.

- Heat, weather, radio-electrical emissions, lack of peripheral security, and the potential of having a limited supply of reliable power and cooling for equipment must also be addressed.

- Network connectivity at remote locations that can vary greatly and often be slow or unreliable.

- Minimal to no IT staff on-site to managed the IT solution.

Red Hat OpenShift lets IT function operate consistently and innovate continuously regardless of where the applications reside today or where to place them tomorrow. It extends the capabilities of Kubernetes in smaller footprint options including 3-node cluster, remote worker node topology or a combination of both.

# Remote worker nodes at the network edge

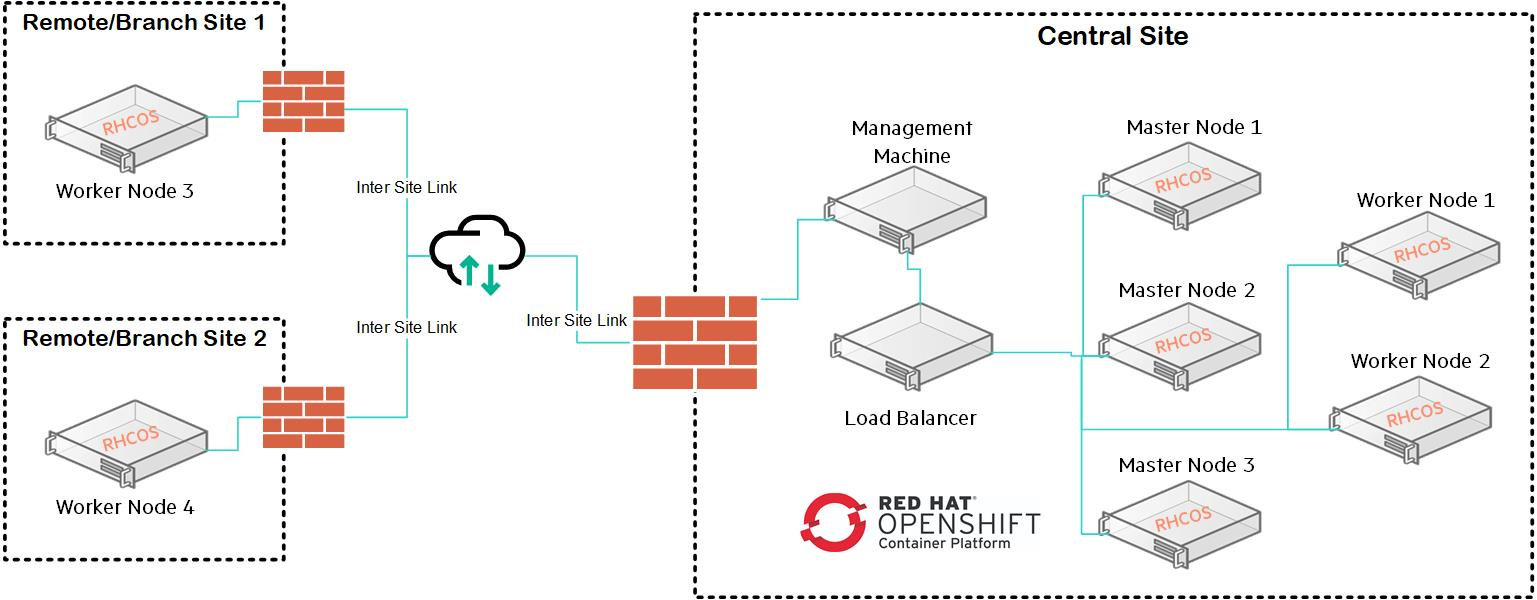

A typical cluster with remote worker nodes combines on premise master and worker nodes with worker nodes in other locations that connect to the cluster. There are multiple use cases across different industries, such as telecommunications, retail, manufacturing, and government, for using a deployment pattern with remote worker nodes.

# Limitation Remote worker nodes at the network edge

Remote worker nodes can introduce higher latency, intermittent loss of network connectivity, and other issues. Among the challenges in a cluster with remote worker node are:

- Network separation: The OpenShift Container Platform control plane and the remote worker nodes must be able communicate with each other. Because of the distance between the control plane and the remote worker nodes, network issues could prevent this communication.

- Power outage: Because the control plane and remote worker nodes are in separate locations, a power outage at the remote location or at any point between the two can negatively impact your cluster.

- Latency spikes or temporary reduction in throughput: As with any network, any changes in network conditions between your cluster and the remote worker nodes can negatively impact your cluster

# Three Node Edge Cluster at the Network Edge

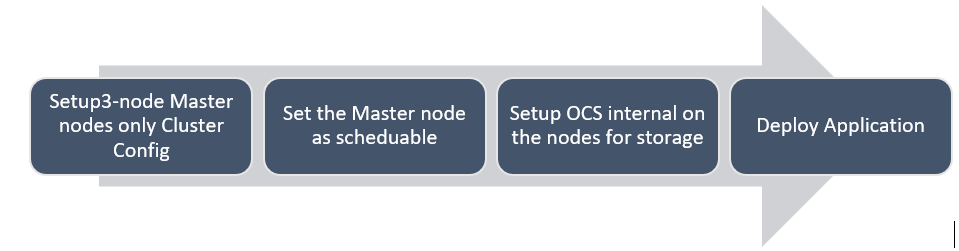

This solution involves building the smallest possible cluster delivering a local control plane, local storage, and compute to meet the requirements of demanding edge workloads while ensuring continuity & deliver true high availability.

- Continue to fully operate, regardless of WAN connection state.

- Pack this into the smallest footprint possible.

- Be cost effective at scale.

Deploying 3 Node OCP Cluster

- Create install-config.yaml file by setting worker nodes to “0” as mentioned below.

apiVersion: v1

baseDomain: name of the base domain>

compute:

- hyperthreading: Enabled

name: worker

replicas: 0

controlPlane:

hyperthreading: Enabled

name: master

replicas: 3

metadata:

name: <name of the cluster, same as the new domain under the base domain created>

networking:

clusterNetworks:

- cidr: 12.128.0.0/14

hostPrefix: 23

networkType: OpenShiftSDN

serviceNetwork:

- 172.30.0.0/16

platform:

none: {}

pullSecret: ‘pull secret provided as per the Red Hat account’

sshKey: ‘ ssh key of the installer VM ’

NOTE

Refer to the “Setting up installer vm” section to setup a installer vm.

- Execute the following command on the installer VM to create the manifest files and the ignition files required to install Red Hat OpenShift

$ cd $BASE_DIR/installer

$ ansible-playbook playbooks/create_manifest_ignitions.yml

$ sudo chmod +r installer/igninitions/*.ign

The ignition files are generated on the installer VM within the folder /opt/hpe/solutions/ocp/hpe-solutions-openshift/DL/scalable/installer/ignitions.

- Follow the ipxe approach to deploy 3 node cluster by setting worker node ip addres and mac address empty in “secret.yaml” file

NOTE

Please refer to the “Deploying OCP using ipxe method” section to deploy ocp cluster. Make sure workers mac addresses and ip addresses are set to empty before proceeding with installation.

- Once the cluster is up and running, user can go ahead deploy the sample application as mentioned in section “deploying wordpress”.

NOTE

Additional worker node can be added by setting master schdulable to “false” in scheduler file. Scheduler file can be edited using following command. “oc edit scheduler”