# Solution Physical components

This section outlines the hardware, software, and service components utilized in this solution.

# Hardware

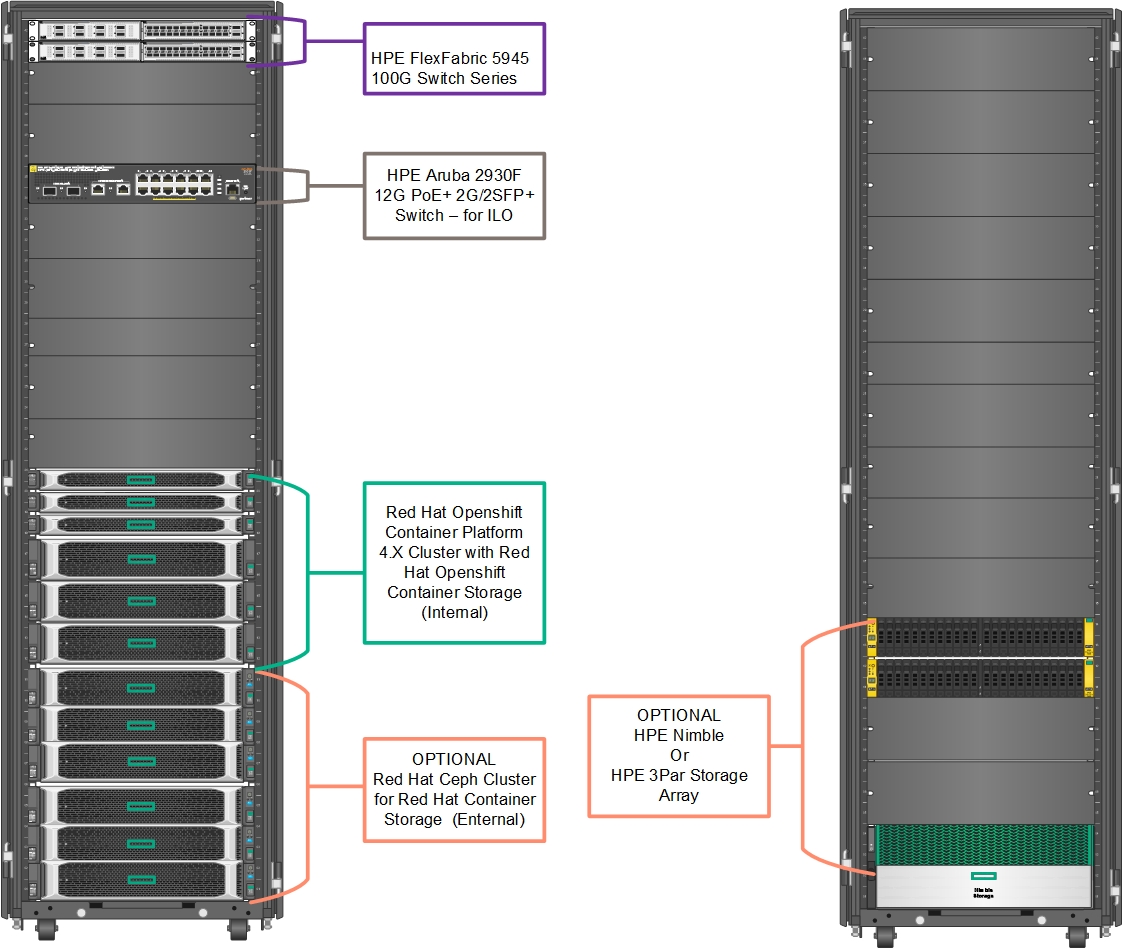

Figure 4 shows the physical configuration of the racks along with storage devices used in this solution. Figure 4 depicts the hardware layout in the test environment. However, this is subject to change based on the customer's requirements.

Figure 4. Hardware layout within the rack along with HPE Storage systems

The configuration outlined in this document is based on the design guidance of an HPE Converged Architecture 750 Foundation model which offers an improved time to deployment and tested firmware recipe.

The installation user has the flexibility to customize the HPE components throughout this stack in accordance with the unique IT and workload requirements or to build the solution with individual components rather than using HPE CS750.

Table 1 highlights the individual components and their quantities as deployed within the solution.

Table 1. Components utilized in the creation of this solution.

| Component | Quantity | Description |

|---|---|---|

| HPE ProLiant DL360 Gen10 server | 3 | OpenShift master and bootstrap/compute node |

| HPE ProLiant DL380 Gen10 server | 3 | OpenShift worker nodes |

| HPE ProLiant DL380 Gen10 server | 3 | Ceph storage cluster nodes – External storage mode (Optional) |

| HPE Nimble storage (AF40) | 1 | External iSCSI storage (Optional – Only for External storage mode) |

| HPE FlexFabric 2-Slot Switch(Aruba 5945 switch) | 1 | Network switch for datacenter network |

| HPE Aruba 2930F switch | 1 | Network switch for iLO Management network |

| HPE ProLiant DL380 Gen10 server | 1 | Host the virtual machines for: Installer Machine, Haproxy Load balancer (Expected to be available in the customer environment) |

| HPE 3PAR StoreServ | 1 | One (1) HPE 3PAR array |

NOTE

The HPE Storage systems mentioned in Table 1 is for representational purpose. Use the required amount of storage system based on the deployment requirements.

# Software

Table 2 describes the versions of important software utilized in the creation of this solution. The installation user should ensure that they download or have access to this software. Ensure that the appropriate subscriptions and licensing are in place to use within the planned time frame.

Table 2. Major software versions used in the creation of this solution

| Component | Version |

|---|---|

| Red Hat Enterprise Linux CoreOS (RHCOS) | 4.9 |

| Red Hat OpenShift Container Platform | 4 |

| HPE Nimble OS | 5.0.8 |

| HPE 3PAR OS | 3.3.1 |

NOTE

The latest sub-version of each component listed in Table 2 should be installed.

When utilizing virtualized nodes, the software version used in the creation of this solution are shown in Table 3.

Table 3. Software versions used with virtualized implementations

| Component | Version |

|---|---|

| VMware vSphere | ESXi 6.7 U2 (Build: 13981272) |

| VMware vCenter Server Appliance | 6.7 Update 2c (Build: 14070457) |

Software installed on the installer machine is shown in Table 4.

Table 4. Software installed on the installer machine

| Component | Version |

|---|---|

| Ansible | 2.9 |

| Python | 3.6 |

| Java | 1.8 |

| Openshift Container Platform packages | 4.9 |

# Services

This document is built with assumptions about services and network ports available within the implementation environment. This section discusses those assumptions.

Table 5 disseminates the services required in this solution and provides a high-level explanation of their function.

Table 5. Services used in the creation of this solution.

| Service | Description/Notes |

|---|---|

| DNS | Provides name resolution on management and data center networks |

| DHCP | Provides IP address leases on PXE, management and usually for data center networks |

| NTP | Ensures consistent time across the solution stack |

| PXE | Enables booting of operating systems |

# DNS

Domain Name Services must be in place for the management and data center networks. Ensure that both forward and reverse lookups are working for all hosts.

# DHCP

DHCP should be present and able to provide IP address leases on the PXE, management, and data center networks.

# NTP

A Network Time Protocol (NTP) server should be available to hosts within the solution environment.

# PXE

Because all nodes in this solution are booted using PXE, a properly configured PXE server is essential.

# Network port

The port information listed in Table 6 allows cluster components to communicate with each other. This information can be retrieved from bootstrap, master, and worker nodes by running the following command.

> netstat –tupln

Table 6 shows list of network ports used by the services under OpenShift Container Platform 4.9.

Table 6. List of network ports.

| Protocol | Port Number/Range | Service Type | Other details |

|---|---|---|---|

| TCP | 80 | HTTP Traffic | The machines that run the Ingress router pods, compute, or worker by default. |

| 443 | HTTPS traffic | ||

| 2379-2380 | etcd server, peer and metrics ports | ||

| 6443 | Kubernetes API | The Bootstrap machine and masters. | |

| 9000-9999 | Host level services, including the node exporter on ports9100-9101and the Cluster Version Operator on port9099. | ||

| 10249-10259 | The default ports that Kubernetes reserves | ||

| 10256 | openshift-sdn | ||

| 22623 | Machine Config Server | The Bootstrap machine and masters. | |

| UDP | 4789 | VXLAN and GENEVE | |

| 6081 | VXLAN and GENEVE | ||

| 9000-9999 | Host level services, including the node exporter on ports9100-9101. | ||

| 30000-32767 | Kubernetes NodePort |

For more information on the network port requirements for Red Hat OpenShift 4, refer to the documentation from Red Hat at