# Solution storage

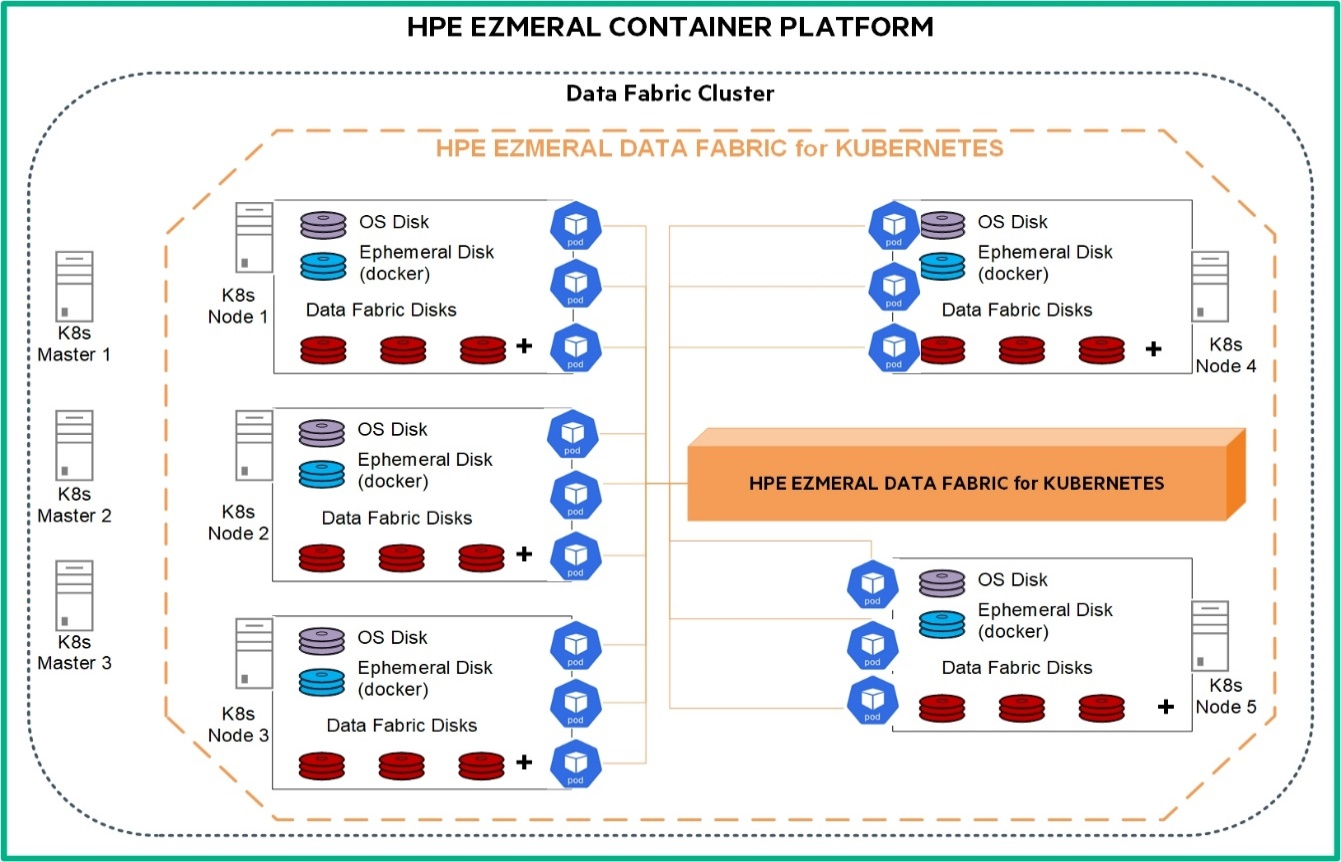

The HPE Ezmeral Data Fabric for Kubernetes provides the ability to run HPE Data Fabric services on top of Kubernetes as a set of pods. It allows you to create Data Platform Clusters for storing data and Tenants for running application such as spark jobs inside pods. Installing both the Tenant components and the Data Platform Cluster components, the HPE Data Fabric Platform runs as a fully native Kubernetes application. Deploying the Data Platform Cluster as part of the HPE Data Fabric for Kubernetes provides the following benefits:

Independent and elastic scaling of storage and compute

Ability to run different versions of Spark applications using the same data platform

Deployment of multiple environments with resource isolation and sharing as required

The HPE Synergy D3940 Storage Module provides solid-state disks and hard disk drives to local systems where it is consumed by HPE Ezmeral Container Platform ephemeral disk for Docker and optionally by compute nodes as boot devices. Kubernetes Storage Cluster running with HPE Synergy D3940 Storage dynamically provides Persistent Volume (PV) for containers using the HPE CSI Driver.

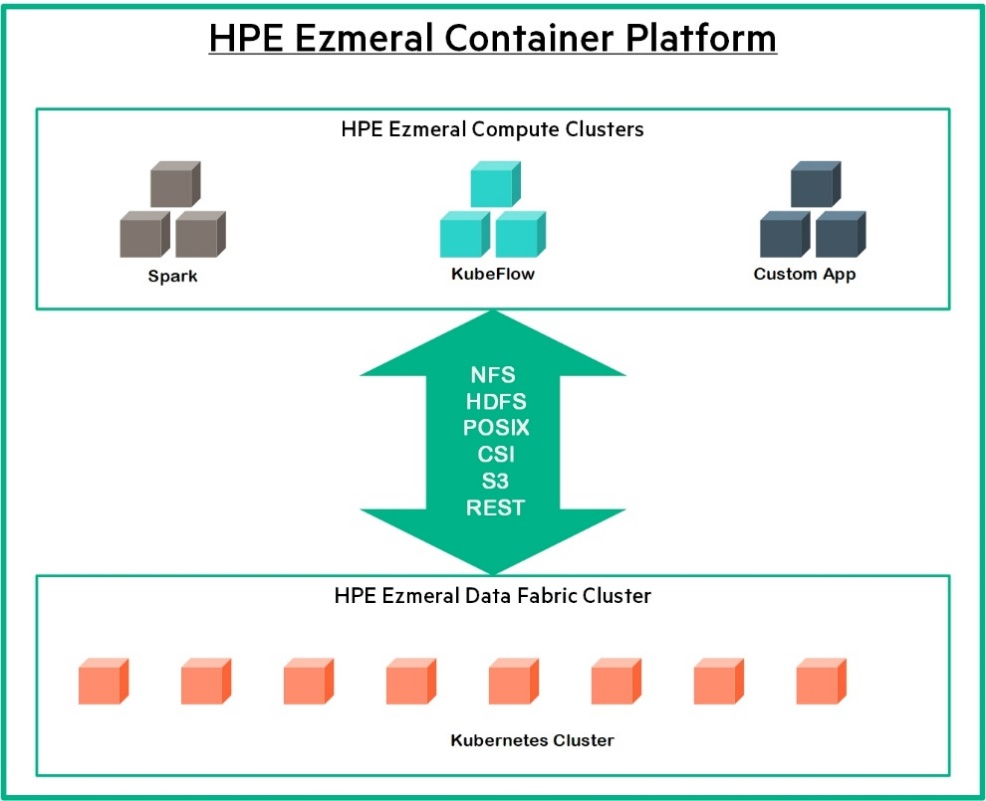

Solution components HPE Ezmeral Data Fabric for Kubernetes are described

at a high-level in Figure.

Figure 9. High-level HPE Ezmeral Data Fabric for Kubernetes architecture

Figure 9. High-level HPE Ezmeral Data Fabric for Kubernetes architecture

By default, HPE Data Platform Clusters on Kubernetes require a minimum of 5 nodes with at least 16 Cores, 128GB Memory, and 500GB of ephemeral storage available to the OS. Additional 3X 500 GB disks for configuring storage for HPE Data Fabric for Kubernetes.

# Configuring solution storage

The HPE Synergy D3940 Storage Module provides SSDs consumed for Node Storage.

Figure 10 describes the logical storage layout used in the solution. The

HPE Synergy D3940 Storage Module provides SAS volume and JBOD. In this

solution, JBOD is used.

Figure 10. Logical storage layout within the solution