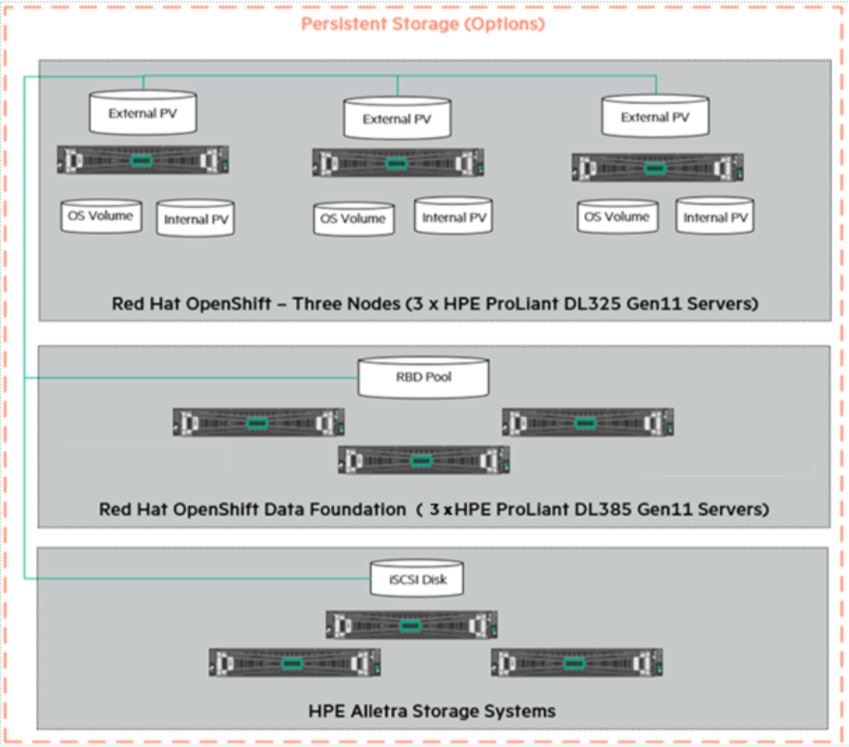

Storage Options

Alletra Storage MP

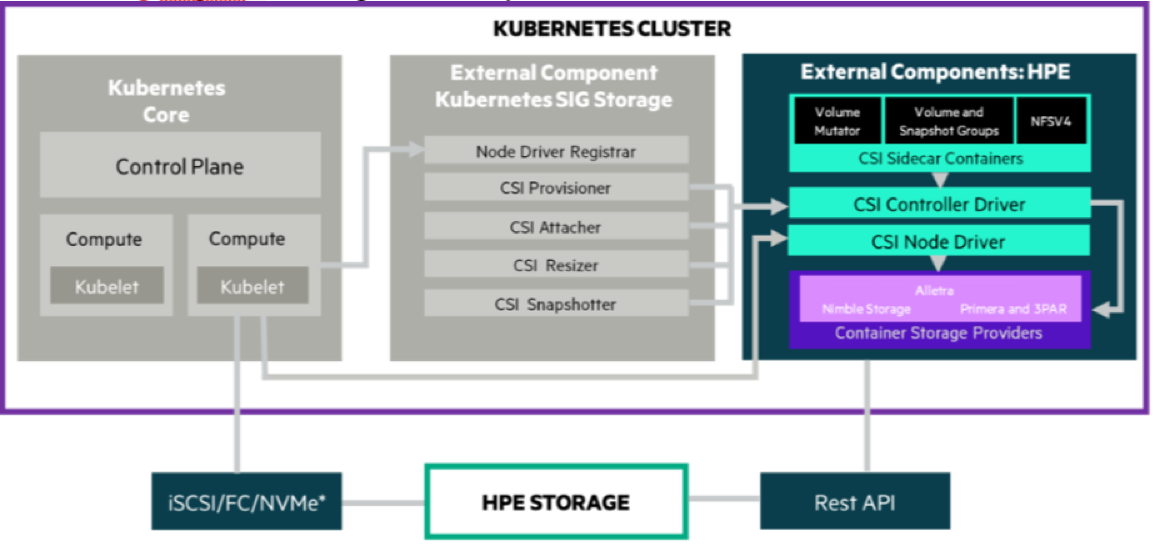

HPE CSI Driver Architecture

A diagramatic representation of the HPE CSI driver architecture is illustrated in the figure 16.

Figure 16: CSI Driver Architecture

The OpenShift Container Platform 4.16 cluster comprises the master and worker nodes (physical and virtual) with CoreOS deployed as the operating system. The iSCSI interface configured on the host nodes establishes the connection with the HPE Alletra array to the cluster. Upon successful deployment of HPE CSI Driver, the CSI controller, CSI Driver, and 3PAR CSP and Nimble CSP gets deployed which communicates with the HPE Alletra array via REST APIs. The associated features on Storage Class such as CSI Provisioner, CSI Attacher, and others are configured on the Storage Class.

Deploying HPE CSI Driver for HPE Alletra storage on RHOCP 4.16

This section describes how to deploy HPE CSI Driver for HPE Alletra storage on an existing RHOCP 4.16.

Prerequisites:

Before configuring the HPE CSI Driver, the following prerequisites must be met:

RHOCP 4.16 must be successfully deployed, and console should be accessible.

iSCSI interface must be configured on the HPE Alletra Storage array.

Additional iSCSI network interfaces must be configured on physical worker nodes.()

Deploy scc.yaml file to enable Security Context Constraints (SCC).

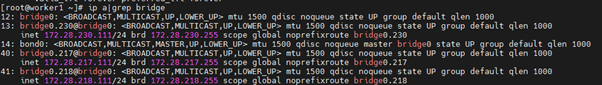

Configuring iSCSI interface on worker nodes

The RHOCP 4.16 cluster comprises the master and physical worker nodes with RHEL 9.4 deployed as the operating system. The iSCSI interface is configured on the host nodes to establish the connection with the HPE Alletra array to the cluster. Apart from the host nodes, additional iSCSI interface needs to be configured on all the worker (physical and virtual) nodes for establishing the connection between the RHOCP cluster and HPE Alletra arrays.

To configure iSCSI interface on physical RHEL worker nodes:

- Configure iSCSI A connection as a storage interface and iSCSI B connection as an additional storage for redundancy

For example, the iSCSI_A and iSCSI_B interface connection is configured on worker1 node, as shown in Figure 17.

FIGURE 17 iSCSI_A and iSCSI_B interface connection

Creating namespace

To create a namespace, in this case, hpe-csi:

Open Red Hat OpenShift Container Platform Console on a supported web browser.

Click Administration → Namespaces on the left pane.

Click Create Namespaces.

On the Create Namespace dialog box, enter hpe-csi.

Click Create.

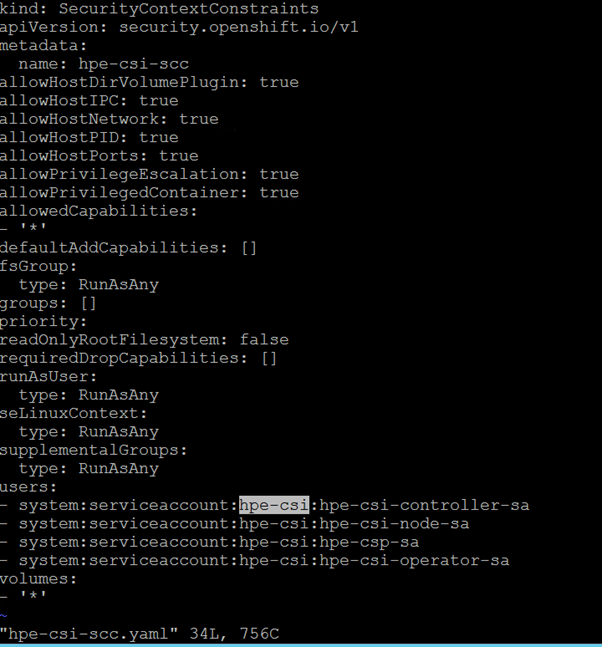

Deploying Security Context Constraints (SCC)

The HPE CSI Driver needs to run in the privileged mode to get access to the host ports, host network, and to mount the host path volume. Before deploying HPE CSI Driver operator on RHOCP cluster, deploy SCC to allow HPE CSI Driver to run with these privileges.

Prerequisites:

- Ensure that you can access the scc.yaml file from the following GitHub link:

https://scod.hpedev.io/partners/redhat_openshift/examples/scc/hpe-csi-scc.yaml

To deploy SCC:

- On the installer VM, download scc.yaml file from the GitHub link using the following command:

$ curl -sL https://scod.hpedev.io/partners/redhat_openshift/examples/scc/hpe-csi-scc.yaml > hpe-csi-scc.yaml

Edit relevant parameters such as project name or namespace in the hpe-csi-scc.yaml file.

Change my-hpe-csi-operator to the name of the project (in this case, hpe-csi) where the CSI Operator is being deployed using the following command:

$ oc new-project hpe-csi --display-name="HPE CSI Driver for Kubernetes"

$ sed -i'' -e 's/my-hpe-csi-driver-operator/hpe-csi/g' hpe-csi-scc.yaml

- Save the file.

The following figure illustrates the parameter that needs to be edited (project name) where the HPE CSI Driver operator is deployed:

FIGURE 18: Editing hpe-cs-scc.yaml file

- Deploy SCC using the following command and check the output:

$ oc create -f hpe-csi-scc.yaml

The following output is displayed:

securitycontextconstraints.security.openshift.io/hpe-csi-scc created

Installing and configuring HPE CSI Driver

NOTE

HPE CSI Driver version 2.4.2 is used for deploying HPE Alletra Storage on the RHOCP 4.16.

Prerequisites:

Before installing the HPE CSI Driver from the Red Hat OpenShift Container Platform Console:

- Create a namespace for HPE CSI Driver

- Deploy SCC for the created namespace

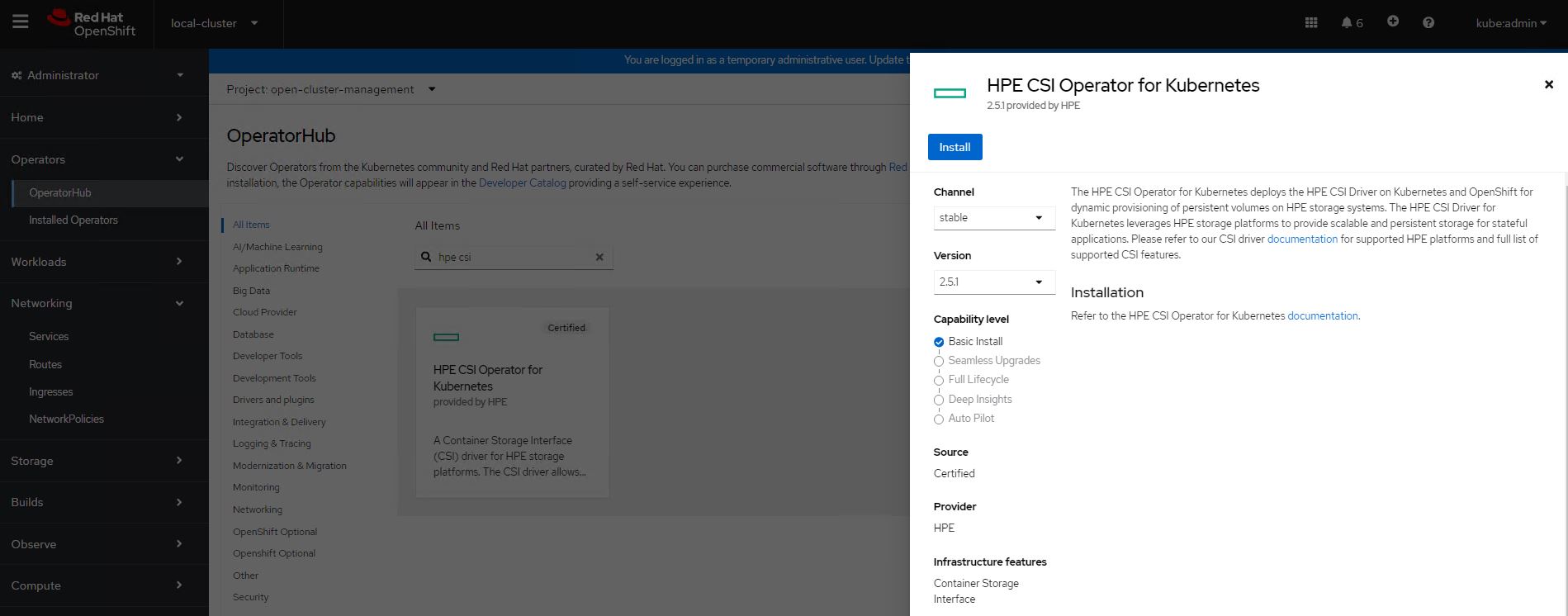

Installing HPE CSI Driver Operator using Red Hat OperatorHub

To install HPE CSI Driver Operator from the Red Hat OperatorHub:

Log in to the Red Hat OpenShift Container Platform Console.

Navigate to Operators → OperatorHub.

Search for HPE CSI Driver Operator from the list of operators and click HPE CSI Driver Operator.

On the HPE CSI Operator for Kubernetes page, click Install.

FIGURE 19: HPE CSI Driver Operator search

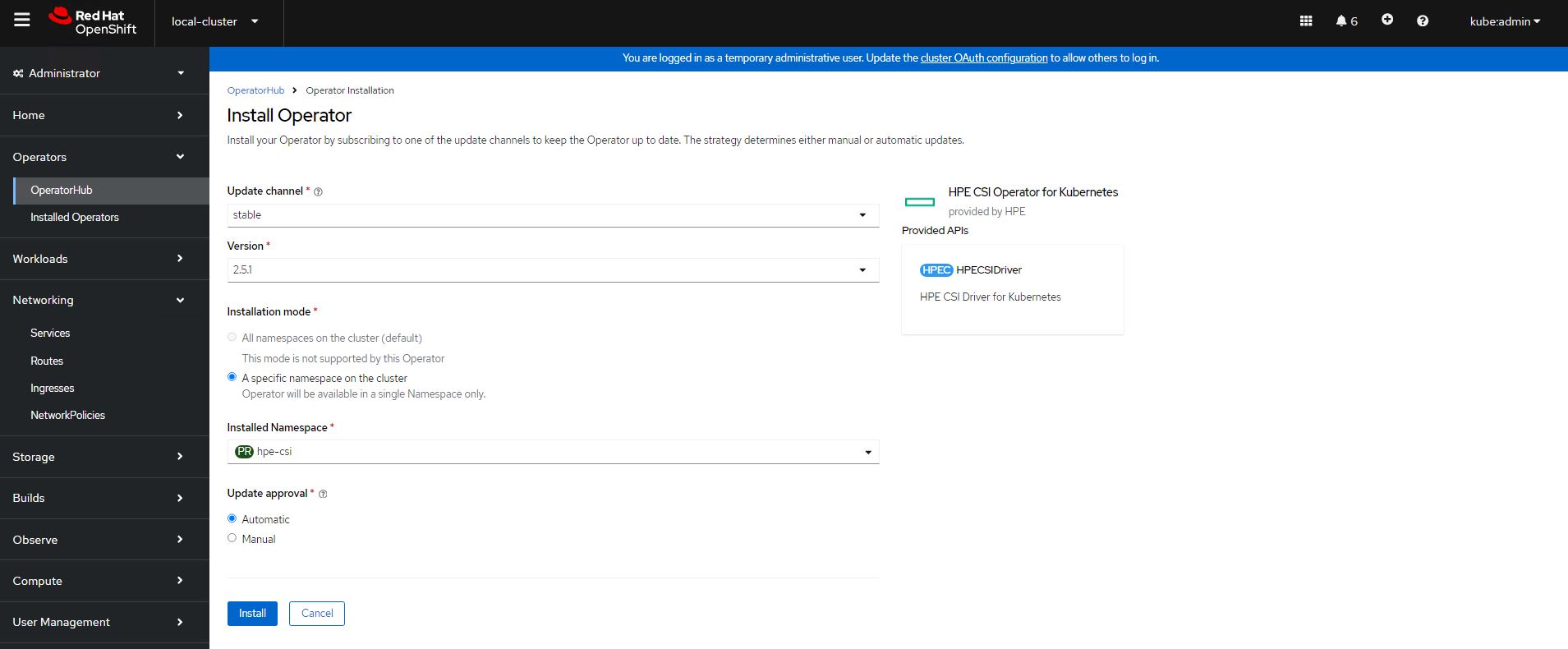

On the Create Operator Subscription page, select the appropriate options:

Select "A specific namespace on the cluster" in the Installation Mode option.

Select the appropriate namespace (in this case, hpe-csi) in the Installed Namespace option.

Select "stable" in the Update Channel option.

Select "Automatic" in the Approval Strategy option.

FIGURE 20: Create Operator Subscription

- Click Install.

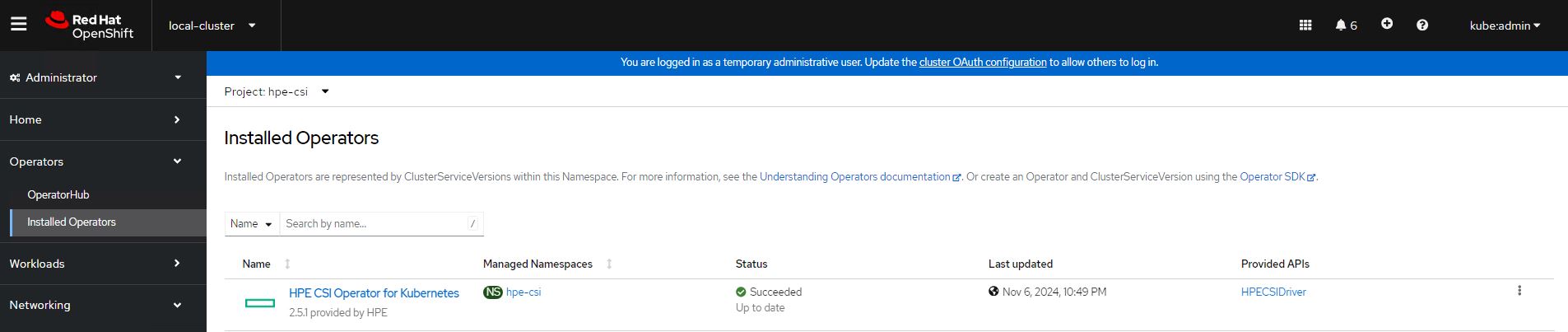

The Installed Operators page is displayed with the status of the operator.

FIGURE 21: Installed Operators

Creating HPE CSI Driver

The HPE CSI Driver is a multi-vendor and multi-backend driver where each implementation has a Container Storage Provider (CSP). The HPE CSI Driver for Kubernetes uses CSP to perform data management operations on storage resources such as searching for a logical unit number (lun) and so on. The HPE CSI Driver allows any vendor or project to develop its own CSP using the CSP specification. It enables the third parties to integrate their storage solution into Kubernetes and takes care of all the intricacies.

To create the HPE CSI Driver:

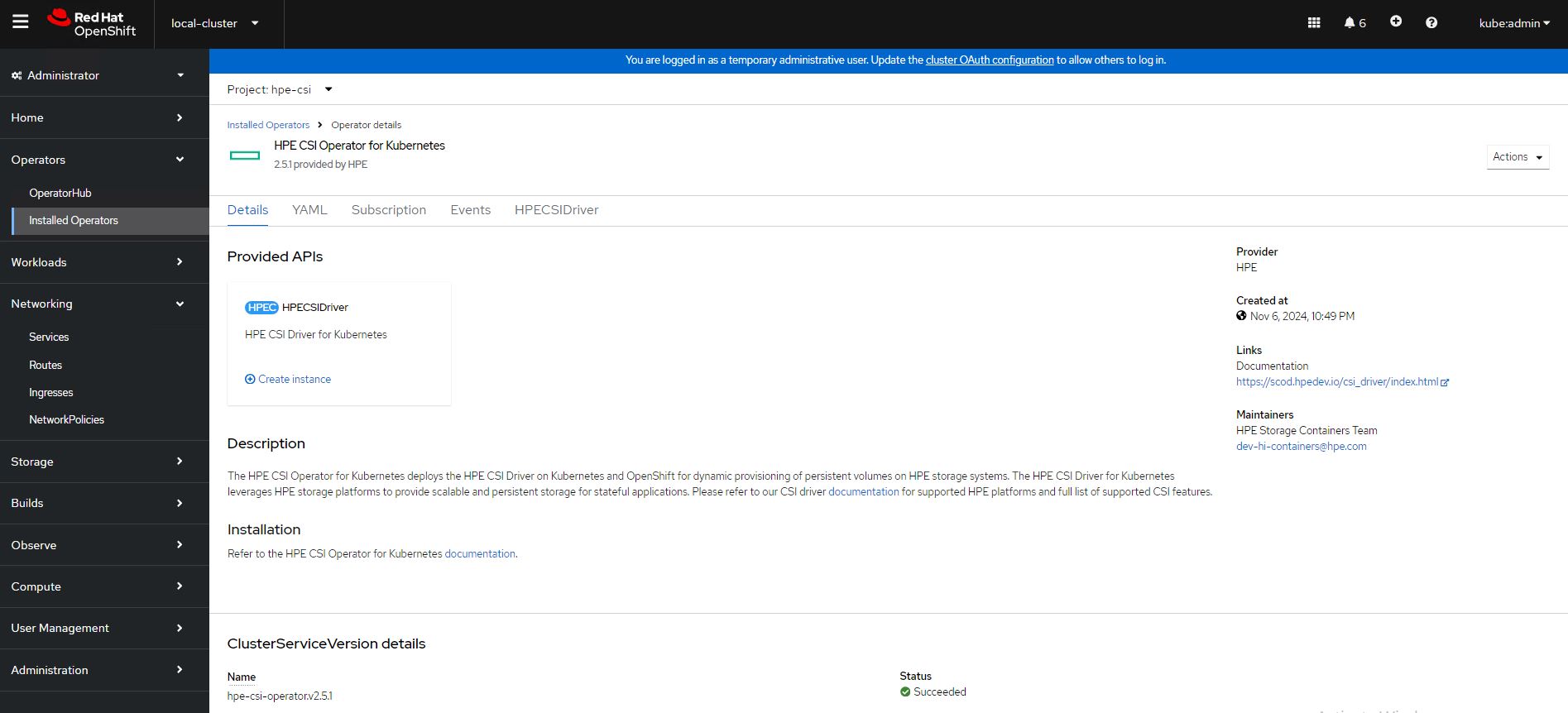

Log in to the Red Hat OpenShift Container Platform Console.

Navigate to Operators → Installed Operators on the left pane to view the installed operators.

On the Installed Operators page, select HPECSIDriver from the Project drop-down list to switch to the hpe-csi project.

On the hpe-csi project, select HPECSIDriver tab.

Click Create HPECSIDriver.

Click Create.

FIGURE 22: HPE CSI Driver creation

Verifying HPECSIDriver configuration

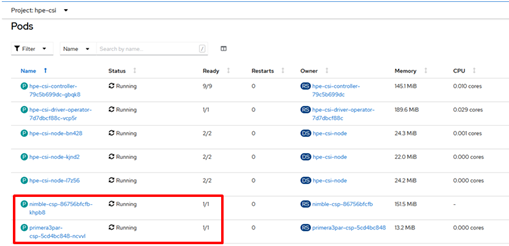

After the HPECSIDriver is deployed, the deployment pods such as hpe-csi-controller, hpe-csi-driver, primera3par-csp, and Nimble-csp are displayed on the Pods page.

FIGURE 23: Deployment pods for HPECSIDriver

NOTE

The Nimble Storage CSP also supports HPE Alletra 6000.

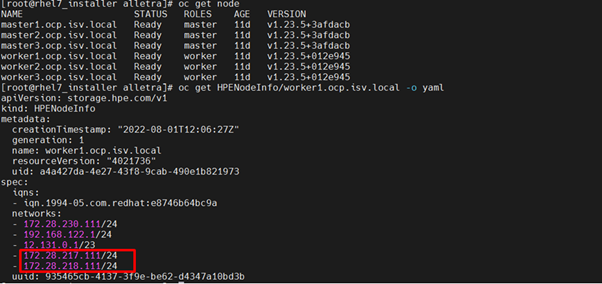

To verify the HPE CSI node information:

- On the installer VM, check HPENodeInfo and network status of worker nodes with the following commands.

$ oc get HPENodeInfo

$ oc get HPENodeInfo/<workernode fqdn> -o yaml

The following output is displayed:

FIGURE 24: HPENodeInfo on the cluster

Creating HPE Alletra StorageClass

After HPE CSI Driver is deployed, two additional objects, Secret and StorageClass, must be created to initiate the provisioning of persistent storage.

Creating Alletra Secret

To create a new Secret via CLI that will be used with HPE Alletra:

- Add the name, Namespace, backend username, backend password and the backend IP address in the Alletra-secret.yaml file and save it to be used by the CSP.

The following details are provided in the Alletra-secret.yaml file:

apiVersion: v1

kind: Secret

metadata:

name: alletra-secret

namespace: hpe-csi

stringData:

serviceName: alletra-csp-svc

servicePort: "8080"

backend: alletramgmtip # update alletramgmt ip

username: admin

password: admin

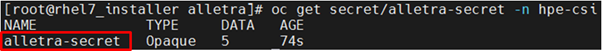

- Create the Alletra-secret.yaml file with the following command:

$ oc create -f Alletra-secret.yaml

- The following output displays the status of the alletra-secret with the "hpe-csi" namespace.

FIGURE 25: HPE Alletra Secret status

Creating StorageClass with HPE Alletra Secret

This section describes how to create a new StorageClass using the existing Alletra-secret and the necessary StorageClass parameters.

To create a new StorageClass using the Alletra-secret:

- Edit the following parameters in the Alletra-storageclass.yaml file:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

storageclass.kubernetes.io/is-default-class: "true"

name: Alletra-storageclass

provisioner: csi.hpe.com

parameters:

csi.storage.k8s.io/fstype: xfs

csi.storage.k8s.io/controller-expand-secret-name: alletra-secret

csi.storage.k8s.io/controller-expand-secret-namespace: hpe-csi

csi.storage.k8s.io/controller-publish-secret-name: alletra-secret

csi.storage.k8s.io/controller-publish-secret-namespace: hpe-csi

csi.storage.k8s.io/node-publish-secret-name: alletra-secret

csi.storage.k8s.io/node-publish-secret-namespace: hpe-csi

csi.storage.k8s.io/node-stage-secret-name: alletra-secret

csi.storage.k8s.io/node-stage-secret-namespace: hpe-csi

csi.storage.k8s.io/provisioner-secret-name: alletra-secret

csi.storage.k8s.io/provisioner-secret-namespace: hpe-csi

description: "Volume created by the HPE CSI Driver for Kubernetes"

reclaimPolicy: Delete

allowVolumeExpansion: true

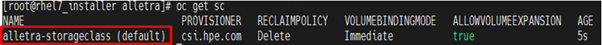

- Create the Alletra-storageclass.yml file with the following command:

$ oc create -f Alletra-storageclass.yml

- Verify the name of the storage class (in this case, Alletra-storageclass).

FIGURE 26: HPE Alletra StorageClass status

Deploying Internal Openshift Data Foundation

This section covers deploying OpenShift Data Foundation 4.16 on existing Red Hat OpenShift Container Platform 4.16 worker nodes.

The OpenShift Data Foundation operator installation will be using Local Storage operator which will use file system storage of 10GB for monitoring purpose and block storage of 500GB/2TB for OSD (Object Storage Daemon) volumes. These OSD are useful for configuring any application on top of OCP cluster using ODF configuration.

Figure 27. Logical storage Layout in Solution

The below operators are required to create ODF cluster and deployed through automation fashion.

Local Storage Operator

OpenShift Data Foundation Operator

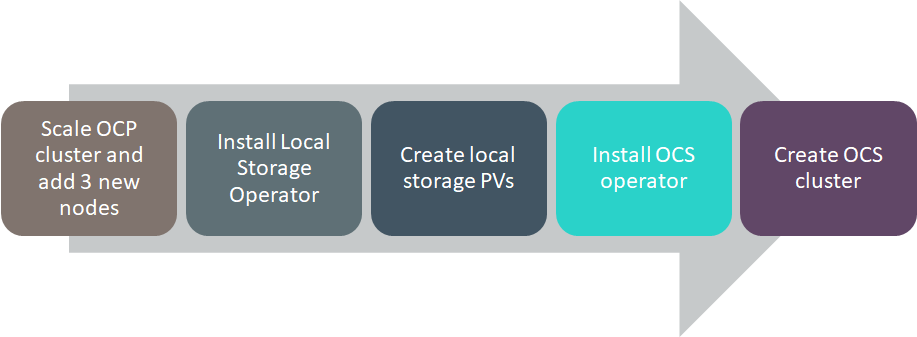

Flow Diagram

Figure 28. Deploying OpenShift Container Storage Solution Flow Diagram

Configuration requirements

The below table shows about all required worker node configuration.

| Server Role | CPU | RAM | HardDisk1 | HardDisk2 | HardDisk3 |

|---|---|---|---|---|---|

| Worker | 16 | 64 | 120 GB | 10 GB | 500 GB/2 TB |

Pre-requisites

Red Hat OpenShift Container Platform 4.16 cluster console is required with the login credentials.

Availability of any local storage from any storage (i.e Nimble,Alletra,Local Storage) in OpenShift Container Platform.

ODF installation on OCP 4.16 cluster requires a minimum of 3 worker nodes but ODF should have exact 3 worker nodes which use two more hard disks with 10GB for mon POD (3 in total using always a PVC) + 500GB (or more than 500GB) volume (a PVC using the default "thin" storage class) for the OSD volumes. It also requires 16 CPUs and 64GB RAM for each node and worker node hard disk configuration as shown in above figure.

Scripts for deploying ODF cluster

NOTE

BASE_DIR - is a base directory path for all automated scripts directories are in place and path is /opt/hpe-solutions-openshift/DL-LTI-Openshift/

This section provides details on the scripts developed to automate the installation of ODF operator on the OCP cluster. The scripts used to deploy ODF can be found in the installer VM at $BASE_DIR/odf_installation.

install_odf_operator.py - main python script which installs Local Storage operator, OpenShift Container Storage operators, creates file system & block storage and also creates SCs, PVs, PVCs.

config.py - This python script is used to convert user input values into program variables for usage by the install_odf_operator.py script.

userinput.json - The userinput.json file needs to be modified as per user configuration and requirements for installing and scaling ODF cluster.

create_local_storage_operator.yaml -- Creates Local Storage operator's Namespace, installs Local Storage operator.

auto_discover_devices.yaml -– Creates the LocalVolumeDiscovery resource using this file.

localvolumeset.yaml -– Creates the LocalVolumeSets

odf_operator.yaml -- This playbook creates OpenShift Container Storage Namespace and OpenShift Data Foundation Operator.

storagecluster.yaml -– This playbook creates storage classes, PVCs (Persistent Volume Claim), pods to bring up the ODF cluster.

Installing OpenShift Container Storage on OpenShift Container Platform

Login to the installer machine as non-root user and browse to python virtual environment as per DG.

Update the userinput.json file is found at $BASE_DIR/odf_installation with the following setup configuration details:

OPENSHIFT_DOMAIN: "<OpenShift Server sub domain fqdn (api.domain.base_domain)>", OPENSHIFT_PORT: "<OpenShift Server port number (OpenShift Container Platform runs on port 6443 by default)>", LOCAL_STORAGE_VERSION": "<OCP_cluster_version>", OPENSHIFT_CONTAINER_STORAGE_VERSION": "", OPENSHIFT_CLIENT_PATH: "<Provide oc absolute path ending with / OR leave empty in case oc is available under /usr/local/bin>", "OPENSHIFT_CONTAINER_PLATFORM_WORKER_NODES": <Provide OCP worker nodes fqdn list ["sworker1.fqdn", "sworker2.fqdn","sworker3.fqdn"]>, "OPENSHIFT_USERNAME": "", "OPENSHIFT_PASSWORD": "", "DISK_NUMBER": ""Execute the following command to deploy ODF cluster.

> cd $BASE_DIR/odf_installation> python -W ignore install_odf_operator.pyThe output of the above command as shown below:

> python -W ignore install_odf_operator.pyEnter key for encrypted variables: Logging into your OpenShift Cluster Successfully logged into the OpenShift Cluster Waiting for 1 minutes to 'Local Storage' operator to be available on OCP web console..!! 'Local Storage' operator is created..!! Waiting for 2 minutes to OCS operator to be available on OCP web console..!! 'OpenShift Container Storage' operator is created..!! INFO: 1) Run the below command to list all PODs and PVCs of OCS cluster. 'oc get pod,pvc -n openshift-storage' 2) Wait for 'pod/ocs-operator-xxxx' pod to be up and running. 3) Log into OCP web GUI and check Persistant Stoarge in dashboard. $

Validation of the OpenShift Data Foundation cluster

The required operators will be created after the execution of the script and they will be reflected in the OpenShift console. This section outlines the steps to verify the operators created through script and are reflected in the GUI:

Login to the OpenShift Container Platform web console as the user with administrative privileges.

Navigate to Operators -> Project (local-storage) -> Installed Operators select your project name.

The Openshift data foundation operator will be available in the OpenShift web console as shown in below Figure.

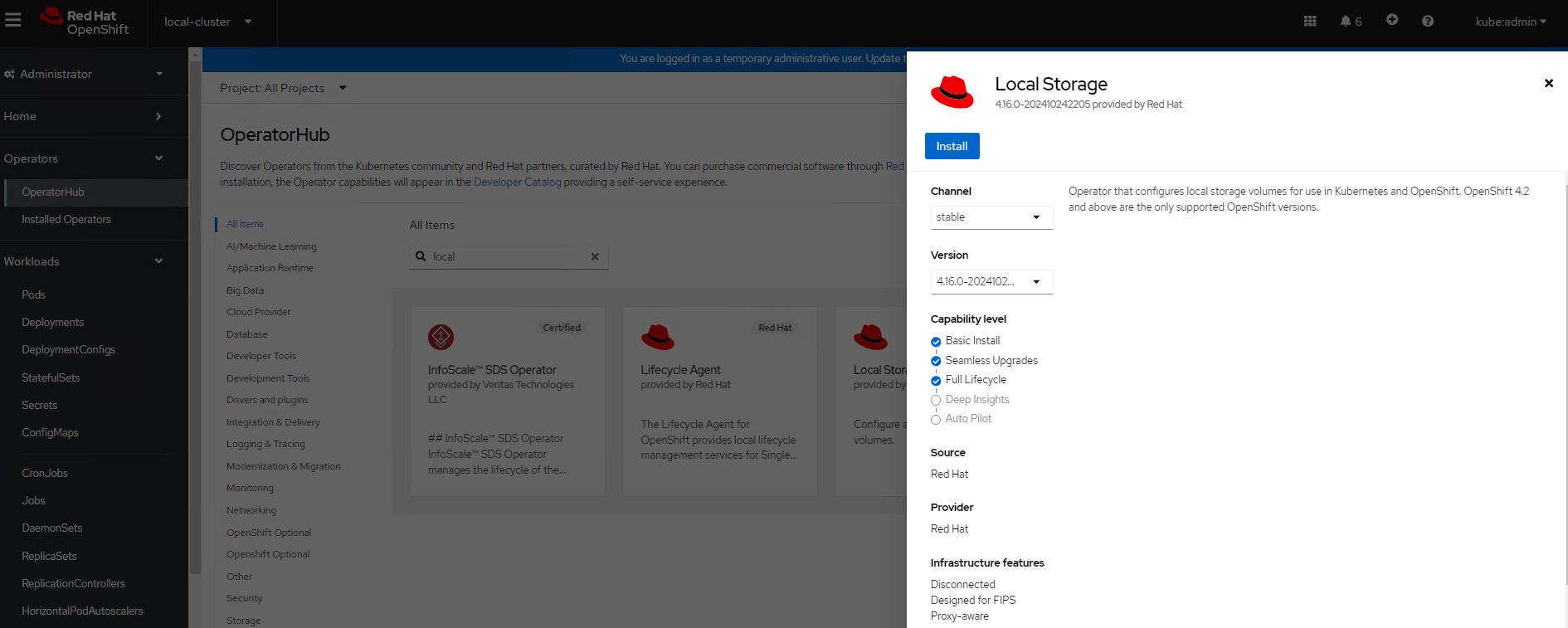

Figure 29. Deploying local storage operator

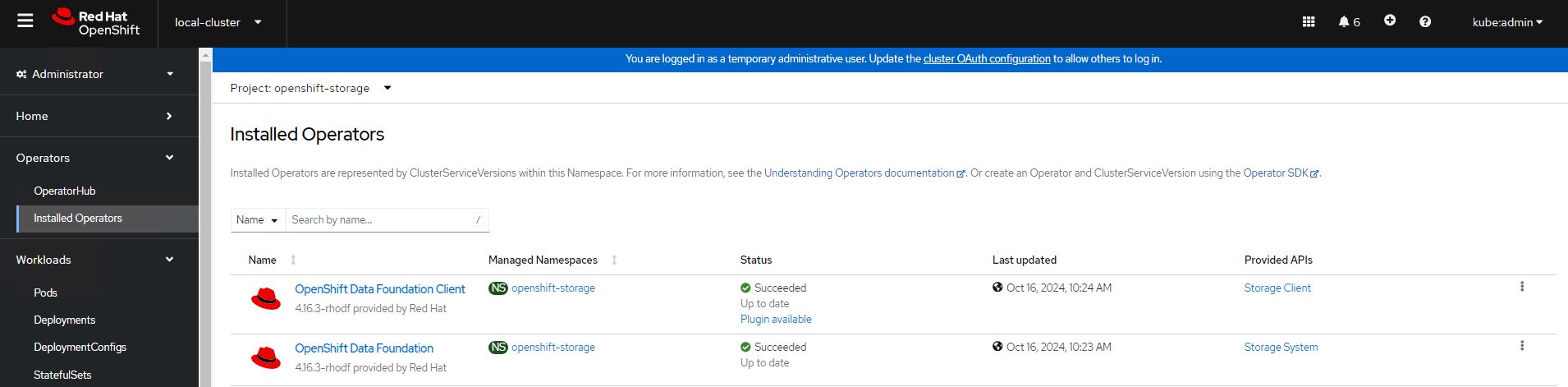

Navigate to Operators -> Installed Operators select your project name openshift-storage for OpenShift Container Storage operator.

The OpenShift Container Storage operator will be available on the OpenShift Container Platform web console as shown in below Figure.

Figure 30. Deployed OpenShift Data Foundation operator

SCs of OpenShift Data Foundation operator on CLI as shown in below.

> oc get scNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE local-sc kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 45h localblock-sc kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 45h odf-storagecluster-ceph-rbd openshift-storage.rbd.csi.ceph.com Delete Immediate false 45h odf-storagecluster-cephfs openshift-storage.cephfs.csi.ceph.com Delete Immediate false 45h openshift-storage.noobaa.io openshift-storage.noobaa.io/obc Delete Immediate false 45hPVs of OpenShift Data Foundation operator on CLI as shown in below.

> oc get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE local-pv-2febb788 500Gi RWO Delete Bound openshift-storage/odf-deviceset-1-0-lhtvh localblock-sc 45h local-pv-5f19c0e5 10Gi RWO Delete Bound openshift-storage/rook-ceph-mon-b local-sc 45h local-pv-b73b1cd5 500Gi RWO Delete Bound openshift-storage/odf-deviceset-2-0-jmmck localblock-sc 45h local-pv-b8ba8c38 10Gi RWO Delete Bound openshift-storage/rook-ceph-mon-a local-sc 45h local-pv-c3a372f6 10Gi RWO Delete Bound openshift-storage/rook-ceph-mon-c local-sc 45h local-pv-e5e3d596 500Gi RWO Delete Bound openshift-storage/odf-deviceset-0-0-5jxg7 localblock-sc 45h pvc-8f3e3d8b-6be7-4ba8-8968-69cbc866c89f 50Gi RWO Delete Bound openshift-storage/db-noobaa-db-0 ocs-storagecluster-ceph-rbd 45h $PVCs of OpenShift Data Foundation operator on CLI as shown in below.

> oc get pvc -n openshift-storageNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE db-noobaa-db-0 Bound pvc-8f3e3d8b-6be7-4ba8-8968-69cbc866c89f 50Gi RWO odf-storagecluster-ceph-rbd 45h odf-deviceset-0-0-5jxg7 Bound local-pv-e5e3d596 500Gi RWO localblock-sc 45h odf-deviceset-1-0-lhtvh Bound local-pv-2febb788 500Gi RWO localblock-sc 45h odf-deviceset-2-0-jmmck Bound local-pv-b73b1cd5 500Gi RWO localblock-sc 45h rook-ceph-mon-a Bound local-pv-b8ba8c38 10Gi RWO local-sc 45h rook-ceph-mon-b Bound local-pv-5f19c0e5 10Gi RWO local-sc 45h rook-ceph-mon-c Bound local-pv-c3a372f6 10Gi RWO local-sc 45h $PODs of OpenShift Data Foundation operator on CLI as shown in below.

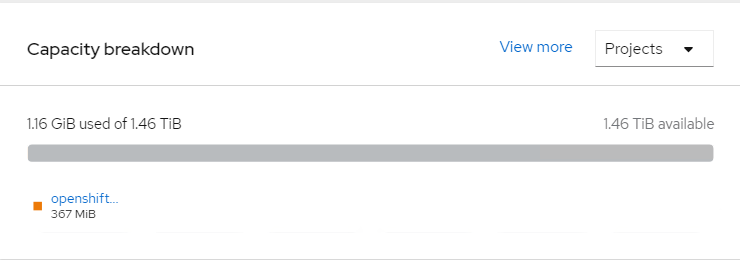

> oc get pod -n openshift-storageNAME READY STATUS RESTARTS AGE csi-cephfsplugin-6xpsk 3/3 Running 0 45h csi-cephfsplugin-7khm6 3/3 Running 0 17m csi-cephfsplugin-bb48n 3/3 Running 0 45h csi-cephfsplugin-cfzx6 3/3 Running 0 15m csi-cephfsplugin-provisioner-79587c64f9-2dpm6 5/5 Running 0 45h csi-cephfsplugin-provisioner-79587c64f9-hf46x 5/5 Running 0 45h csi-cephfsplugin-w6p6v 3/3 Running 0 45h csi-rbdplugin-2z686 3/3 Running 0 45h csi-rbdplugin-6tv5m 3/3 Running 0 45h csi-rbdplugin-jgf5z 3/3 Running 0 17m csi-rbdplugin-provisioner-5f495c4566-76rqm 5/5 Running 0 45h csi-rbdplugin-provisioner-5f495c4566-pzvww 5/5 Running 0 45h csi-rbdplugin-v7lfx 3/3 Running 0 45h csi-rbdplugin-ztdjs 3/3 Running 0 15m noobaa-core-0 1/1 Running 0 45h noobaa-db-0 1/1 Running 0 45h noobaa-endpoint-6458fc874f-vpznd 1/1 Running 0 45h noobaa-operator-7f4495fc6-lmk9k 1/1 Running 0 45h odf-operator-5d664769f-59v8j 1/1 Running 0 45h rook-ceph-crashcollector-sworker1.socp.twentynet.local-84dddddb 1/1 Running 0 45h rook-ceph-crashcollector-sworker2.socp.twentynet.local-8b5qzzsz 1/1 Running 0 45h rook-ceph-crashcollector-sworker3.socp.twentynet.local-699n9kzp 1/1 Running 0 45h rook-ceph-drain-canary-sworker1.socp.twentynet.local-85bffzm66m 1/1 Running 0 45h rook-ceph-drain-canary-sworker2.socp.twentynet.local-66bcfjjfkr 1/1 Running 0 45h rook-ceph-drain-canary-sworker3.socp.twentynet.local-5f6b57c9nt 1/1 Running 0 45h rook-ceph-mds-ocs-storagecluster-cephfilesystem-a-67fbb67dkb4kz 1/1 Running 0 45h rook-ceph-mds-ocs-storagecluster-cephfilesystem-b-db66df7dtqkmx 1/1 Running 0 45h rook-ceph-mgr-a-6f5f7b58dc-fjvjc 1/1 Running 0 45h rook-ceph-mon-a-76cc49c944-5pcgf 1/1 Running 0 45h rook-ceph-mon-b-b9449cdd7-s6mct 1/1 Running 0 45h rook-ceph-mon-c-59d854cd8-gn6sd 1/1 Running 0 45h rook-ceph-operator-775cd6cd66-sdfph 1/1 Running 0 45h rook-ceph-osd-0-7644557bfb-9l7ns 1/1 Running 0 45h rook-ceph-osd-1-7694c74948-lc9sf 1/1 Running 0 45h rook-ceph-osd-2-794547558-wjcpz 1/1 Running 0 45h rook-ceph-osd-prepare-ocs-deviceset-0-0-5jxg7-t89zh 0/1 Completed 0 45h rook-ceph-osd-prepare-ocs-deviceset-1-0-lhtvh-f2znl 0/1 Completed 0 45h rook-ceph-osd-prepare-ocs-deviceset-2-0-jmmck-wsrb2 0/1 Completed 0 45h rook-ceph-rgw-ocs-storagecluster-cephobjectstore-a-67b7865qx276 1/1 Running 0 45h $Storage capacity of ODF cluster with 3 worker nodes (3x500Gi) on OCP web cluster as shown below figure.

Figure 31. Storage capacities of workers

Validating ODF with deploying WordPress application

This section covers the steps to validate the OpenShift Data Foundation deployment (ODF) by deploying 2-tier application along with MySQL database.

Prerequisites

OCP 4.16 cluster must be installed.

ODF to claim persistent volume (PV).

Deploying External Openshift Data Foundation

This section covers deploying External OpenShift Data Foundation 4.16 on existing Red Hat OpenShift Container Platform 4.16 platform.

Lite touch Installation of external ODF(CEPH) cluster using Ansible playbook

Installer Machine Prerequisite:

RHEL 9.4 Installer machine the following configurations(we can utilise the same installer machine used for RHOCP cluster deployment).

At least 500 GB disk space (especially in the "/" partition), 4 CPU cores and 16GB RAM.

RHEL 9.4 Installer machine should be subscribed with valid Redhat credentials.

Sync time with NTP server.

SSH Key pair should be there on the installer machine, if not kindly generate a new SSH key pair using the below command.

ssh-keygen

- Use the following commands to create and activate a Python3 virtual environment for deploying this solution.

'python3 -m venv \<virtual_environment_name\> Example:virtual_environment_name=ocp

source \<virtual_environment_name\>/bin/activate'

- Execute the following commands in the Ansible Engine to download the repositories.

'mkdir /opt

cd /opt

yum install -y git

git clone <https://github.com/HewlettPackard/hpe-solutions-openshift.git>>'

- Setup the installer machine to configure the nginx, development tools and other python packages required for LTI installation. Navigate to the directory, /opt/hpe-solutions-openshift/DL-LTI-Openshift/external-ceph_deployment and run the below command.

'sh setup.sh'

As part of setup.sh script it will create nginx service, so user must download and copy Rhel DVD ISO to /usr/share/nginx/html/

Minimum Storage requirement for external odf cluster servers

Servers Host OS disk Storage Pool disk Server 1 2 x 1.6 TB 8 x 1.6 TB Server 2 2 x 1.6 TB 8 x 1.6 TB Server 3 2 x 1.6 TB 8 x 1.6 TB Host OS disk – raid1 for redundancy

For Creating and deleting of logical drives refer to the Readme of the Openshift platform installation

Playbook Execution:- To delete all the existing logical drives in the server in case if any and to create new logical drives named 'RHEL Boot Volume' in respective ILO servers run the site.yml playbook inside create_delete_logicaldrives directory with the below mentioned command

# ansible-playbook site.yml --ask-vault-pass

*Note: If you do not have proxy or VLAN based setup leave these variables empty as shown below

servers:

Host_VlanID:

SquidProxy_IP:

SquidProxy_Port:

squid_proxy_IP:

corporate_proxy:

squid_port:

Input files

We can provide the input variables in below method

Input.yaml: Manually editing input file

Go to the directory $BASE_DIR(/opt/hpe-solutions-openshift/DL-LTI-Openshift/external-ceph_deployment), here we will have input.yaml and hosts files.

- A preconfigured Ansible vault file (input.yaml) is provided as a part of this solution, which consists of sensitive information to support the host and virtual machine deployment.

cd $BASE_DIR

Run the following commands on the installer VM to edit the vault to match the installation environment.

ansible-vault edit input.yaml

NOTE

The default password for the Ansible vault file is changeme

Sample input_sample.yml can be found in the $BASE_DIR along with description of each input variable.

A sample input.yaml file is as follows with a few filled parameters.

'- Server_serial_number: 2M20510XXX

ILO_Address: 172.28.*.*

ILO_Username: admin

ILO_Password: *****

Hostname: ceph01.XX.XX #ex. ceph01.isv.local

Host_Username: root

Host_Password: ******

HWADDR1: XX:XX:XX:XX:XX:XX #mac address for server physical interface1

HWADDR2: XX:XX:XX:XX:XX:XX #mac address for server physical interface2

Host_OS_disk: sda

Host_VlanID: 230 #solution VLAN ID ex. 230

Host_IP: 172.28.*.*

Host_Netmask: 255.*.*.*

Host_Prefix: XX #ex. 8,24,32

Host_Gateway: 172.28.*.*

Host_DNS: 172.28.*.*

Host_Domain: XX.XX #ex. isv.local

SquidProxy_IP: #provide Squid proxy, ex. proxy.houston.hpecorp.net

SquidProxy_Port:

Note

The First Machine details provided in the server section of input file will be considered for Manager/admin node in the ceph Cluster.

config:

HTTP_server_base_url: http://172.28.*.*/ #Installer IP address

HTTP_file_path: /usr/share/nginx/html/

OS_type: rhel

OS_image_name: rhel-9.4-x86_64-dvd.iso

base_kickstart_filepath: '/opt/hpe-solutions-openshift/DL-LTI-Openshift/external-ceph_deployment/playbooks/roles/rhel9_os_deployment/tasks/ks_rhel9.cfg'

dashboard_user: xxx # provide the ceph user who needs admin access

dashboard_password: xxxxxxxxxxx # provide the temporary password that needs to be setup for user

client_user: xxxx # provide the user who needs access with read only permission to dashboard.

client_pwd: xxxxxxxxxxx # provide the password for user who needs access with read only permission to dashboard.

ceph_adminuser: xxxxxxxxx # provide the ceph user who will deploy the cluster

ceph_adminuser_password: xxxxxxx # provide the password for the user who willdeploy the cluster.

rbd_pool_name: xxxxxx # provide the block name which will be created in cluster

rgw_pool_name: xxxx # provde the gateway name which will be created inside the cluster

ceph_fs:

- fs_pool_name: xxxxxx # provide the ceph file system name which will be created in cluster

number_of_daemons: x # provide the number of daemons which has to be created for mds

hosts:

- xxxx.xxxxxx.xxxx # provide the fqdn name of the nodes where mds damons has to run. If number of daemons are provide 3 fqdn details of the nodes in cluster.

- xxxx.xxxxxx.xxxx

- xxxx.xxxxxx.xxxx

- The installation user should review hosts file (located on the installer VM at $BASE_DIR/hosts) and ensure that the information within the file accurately reflects the information in their environment.

Use an editor such as vi or nano to edit the inventory file.

vi $BASE_DIR/hosts

[all]

installer.isv.local

ceph01.isv.local

ceph02.isv.local

ceph03.isv.local

[OSD]

ceph01.isv.local

ceph02.isv.local

ceph03.isv.local

[admin]

ceph01.isv.local

In the above sample file: ,Installer is the Asnbile machine, ceph01 is the ceph adminstration machine and ceph01,ceph02,ceph03 are the machines with Object storage daemon disk machines.

Playbook execution

External OpenShift Data Foundation(CEPH) cluster can be deployed by running site.yml or by running individual playbooks. Each playbook description can be found further in this document.

Run the below command to execute the Lite Touch Installation.

'ansible-playbook -i hosts site.yml --ask-vault-pass'

In case if user want to deploy through individual playbooks. Sequence of playbooks to be followed are:

'- import_playbook: playbooks/rhel9_os_deployment.yaml

- import_playbook: playbooks/ssh.yaml

- import_playbook: playbooks/subscriptions.yaml

- import_playbook: playbooks/ntp.yaml

- import_playbook: playbooks/user_req.yaml

- import_playbook: playbooks/deploy_cephadm.yaml

- import_playbook: playbooks/registry.yaml

- import_playbook: playbooks/preflight.yaml

- import_playbook: playbooks/bootstrap.yaml

- import_playbook: playbooks/add_hosts.yaml

- import_playbook: playbooks/add_osd.yaml

- import_playbook: playbooks/client_user.yaml

- import_playbook: playbooks/copy_script.yaml

- import_playbook: playbooks/rbd.yaml # execute this playbook for rbd storage

- import_playbook: playbooks/fs.yaml # execute this playbook for cephfs

- import_playbook: playbooks/rgw.yaml' # execute this playbook for rgw

we should excute the last three playbooks as per the customer requirement.

Playbook description

site.yaml

- This playbook contains the script to deploy external Openshift Data Foundation(ODF) platform starting from the OS_deployment until cluster up.

rhel9_os_deployment.yaml

- This playbook contains the scripts to deploy rhel9.4 OS on baremetal servers.

ssh.yaml

- This playbook contains the script to create and copy the ssh public key from installer machine to other nodes in the cluster.

subscriptions.yaml

- This playbook contains the script to RHEL subscription and enables the required repositories required for the building the ceph cluster.

ntp.yaml

- This playbook contains the script to create NTP setup on head nodes to make sure time synchronization.

user_req.yaml

- This playbook contains the script to create a user on all nodes and copy the required ssh keys to the newly created user.

deploy_cephadm.yaml

- This playbook contains the script to deploy the cephadm packages to the administration node.

registry.yaml

- This playbook contains the script to create the registry on the installer node.

preflight.yaml

- This playbook contains the script to call the Redhat provided playbooks to execute the some more prerequites required for the environment.

bootstrap.yaml

- This playbook contains the script to bootstrap the ceph cluster.

add_hosts.yaml

- This playbook contains the script to add the servers to the ODF cluster.

add_osd.yaml

- This playbook contains the script to add the OSD disks.

client_user.yaml

- This playbook contains the script to create a user with read only access to the cluster.

copy_script.yaml

- This playbook contains the script to copy scrpits to administration node

rbd.yaml

- This playbook contains the script to create rados block device in cluster.

fs.yaml

- This playbook contains the script to create ceph fs.

rgw.yaml

- This playbook contains the script to create Ceph Object Gateway.

Integration of external ODF with OpenShift Container Platform

Prerequisites:

- OpenShift Data Foundation operator is deployed in the Openshift container platform and dashboard is available.

Steps for integration:

Click Operators --> Installed Operators to view all the installed operators.

Ensure that the Project selected is openshift-storage.

Click OpenShift Data Foundation and then click Create StorageSystem.

Select Connect an external storage platform from the available options.

Select Red Hat Ceph Storage for Storage platform and click Next

Click on the Download Script link to download the python script for extracting Ceph cluster details.

Execute the python script by providing the ceph cluster details.

In the below example we are executing the script to extract the details from a ceph cluster for rbd data pool "odf-rbd"

python3 ceph-external-cluster-details-exporter.py --rbd-data-pool-name odf-rbd

Upload the output in the Openshift container platform container dashboard and click Next.

After sucessful importing of the ceph details, required storage class are created.

For validation deploy the wordpress application as demonstrate above.

Validating Persistent storage

Validating Persistent Storage (ODF) by deploying WordPress application

This section covers the steps to validate the OpenShift Data Foundation deployment (ODF) by deploying 2-tier application along with MySQL database.

Prerequisites

OCP 4.16 cluster must be installed.

ODF to claim persistent volume (PV).

NOTE

BASE_DIR - is a base directory path for all automated scripts directories are in place and path is /opt/hpe-solutions-openshift/DL-LTI-Openshift

Login to the installer machine as a non-root user.

From within the repository, navigate to the WordPress script folder

> cd $BASE_DIR/odf_installation/wordpressRun below script to deploy Wordpress application along with MySQL

> ./deploy_wordpress.sh

The deploy_wordpress.sh scripts does the following activities.

Creates project

Sets default storage class

Deploys Wordpress and MySQL app

Create routes

Below is the output of the scripts

> ./deploy_wordpress.shOutput of the command follows:

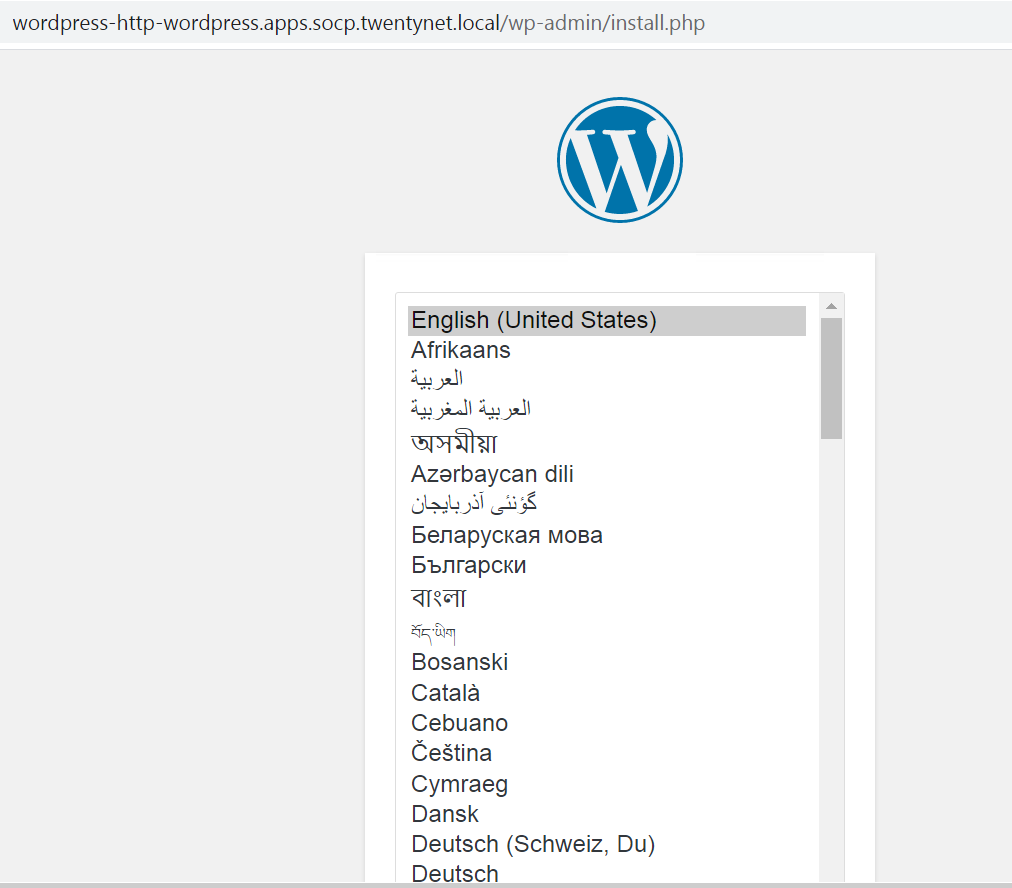

Already on project "wordpress" on server "https://api.socp.twentynet.local:6443". You can add applications to this project with the 'new-app' command. For example, try: oc new-app ruby~https://github.com/sclorg/ruby-ex.git to build a new example application in Ruby. Or use kubectl to deploy a simple Kubernetes application: kubectl create deployment hello-node --image=gcr.io/hello-minikube-zero-install/hello-node Already on project "wordpress" on server "https://api.socp.twentynet.local:6443". clusterrole.rbac.authorization.k8s.io/system:openshift:scc:anyuid added: "default"error: --overwrite is false but found the following declared annotation(s): 'storageclass.kubernetes.io/is-default-class' already has a value (true) service/wordpress-http created service/wordpress-mysql created persistentvolumeclaim/mysql-pv-claim created persistentvolumeclaim/wp-pv-claim created secret/mysql-pass created deployment.apps/wordpress-mysql created deployment.apps/wordpress created route.route.openshift.io/wordpress-http created URL to access application wordpress-http-wordpress.apps.socp.twentynet.local $

Verifying the WordPress deployment

Execute the following command to verify the persistent volume associated with WordPress application and MySQL database.

> oc get pods,pvc,route -n wordpressNAME READY STATUS RESTARTS AGE pod/wordpress-6f69797b8f-hqpss 1/1 Running 0 5m52s pod/wordpress-mysql-8f4b599b5-cd2s2 1/1 Running 0 5m52sNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/mysql-pv-claim Bound pvc-ccf2a578-9ba3-4577-8115-7c80ac200a9c 5Gi RWO ocs-storagecluster-ceph-rbd 5m50s persistentvolumeclaim/wp-pv-claim Bound pvc-3acec0a0-943d-4138-bda9-5b57f8c35c5d 5Gi RWO ocs-storagecluster-ceph-rbd 5m50sNAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD route.route.openshift.io/wordpress-http wordpress-http-wordpress.apps.socp.twentynet.local wordpress-http 80-tcp None $Access the route url in browser to access the WordPress application as shown below.

Figure 32. Sample application (WordPress)