# Deploying HPE CSI Driver for Nimble Storage on HPECP Container Platform 5.3

Prior to Container Storage Integration (CSI), Kubernetes provided in-tree plugins to support volume. This posed a problem as storage vendors had to align to the Kubernetes release process to fix a bug or to release new features. This also means every storage vendor had their own process to present volume to Kubernetes.

CSI was developed as a standard for exposing block and file storage systems to containerized workloads on Container Orchestrator Systems (COS) like Kubernetes. Container Storage Interface (CSI) is an initiative to unify the storage interface of COS combined with storage vendors. This means, implementing a single CSI for a storage vendor is guaranteed to work with all COS. With the introduction of CSI, there is a clear benefit for the COS and storage vendors. Due to its well-defined interfaces, it also helps developers and future COS to easily implement and test CSI. Volume plugins served the storage needs for container workloads in case of Kubernetes, before CSI existed.

The HPE CSI Driver is a multi-vendor and multi-backend driver where each implementation has a Container Storage Provider (CSP). The HPE CSI Driver for Kubernetes uses CSP to perform data management operations on storage resources such as searching for a logical unit number (lun) and so on. Using the CSP specification, the HPE CSI Driver allows any vendor or project to develop its own CSP, which makes it very easy for third- parties to integrate their storage solution into Kubernetes as all the intricacies are taken care of by the HPE CSI Driver. This document contains details on how to configure the HPE CSI Driver storage for Nimble on an existing HPECP Container Platform 5.3.

# Configuring CSI Driver

Prior to configuring the HPE CSI driver, the following prerequisites needs to be met.

Pre-requisites

HPECP Container Platform 5.3 must be successfully deployed.

Additional iSCSI network interfaces must be configured on Kubernetes Comupte Cluster (physical and virtual)

# Installing CSI Drivers

These object configuration files are common for version of Kubernetes.

Worker node IO settings:

> kubectl create -f <https://raw.githubusercontent.com/hpe-storage/co-deployments/master/yaml/csi-driver/v1.3.0/hpe-linux-config.yaml>

Container Storage Provider:

> kubectl create -f <https://raw.githubusercontent.com/hpe-storage/co-deployments/master/yaml/csi-driver/v1.3.0/nimble-csp.yaml>

Kubernetes 1.18

> kubectl create -f <https://raw.githubusercontent.com/hpe-storage/co-deployments/master/yaml/csi-driver/v1.3.0/hpe-csi-k8s-1.18.yaml>

# Add a HPE storage backend

Once the CSI driver is deployed, two additional objects needs to be created to get started with dynamic provisioning of persistent storage, a Secret and a StorageClass.

# Secret parameters

All parameters are mandatory and described below.

| Parameter | Description |

|---|---|

| serviceName | This hostname or IP address where the Container Storage Provider (CSP) is running, usually a Kubernetes Service, such as "nimble-csp-svc" or "primera3par-csp-svc" |

| servicePort | This is port the serviceName is listening to. |

| Backend | This is the management hostname or IP address of the actual backend storage system, such as a Nimble or 3PAR array. |

| Username | Backend storage system username with the correct privileges to perform storage management. |

| Password | Backend storage system password. |

# Create the secret

> vi custom-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: custom-secret

namespace: kube-system

stringData:

serviceName: nimble-csp-svc

servicePort: "8080"

backend: 10.x.x.x

username: xxxxx

password: xxxxx

create the secret using kubectl

> kubectl create -f custom-secret.yaml

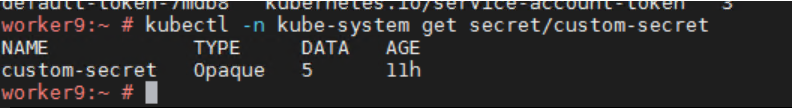

We can see the secret in the "kube-system" Namespace

# Create the StorageClass with the custom Secret

To use the new Secret "custom-secret", create a new StorageClass using the Secret and the necessary StorageClass parameters.

> vi StorageClass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: hpe-custom

provisioner: csi.hpe.com

parameters:

csi.storage.k8s.io/fstype: xfs

csi.storage.k8s.io/controller-expand-secret-name: custom-secret

csi.storage.k8s.io/controller-expand-secret-namespace: kube-system

csi.storage.k8s.io/controller-publish-secret-name: custom-secret

csi.storage.k8s.io/controller-publish-secret-namespace: kube-system

csi.storage.k8s.io/node-publish-secret-name: custom-secret

csi.storage.k8s.io/node-publish-secret-namespace: kube-system

csi.storage.k8s.io/node-stage-secret-name: custom-secret

csi.storage.k8s.io/node-stage-secret-namespace: kube-system

csi.storage.k8s.io/provisioner-secret-name: custom-secret

csi.storage.k8s.io/provisioner-secret-namespace: kube-system

description: "Volume created by using a custom Secret with the HPE CSI

Driver for Kubernetes"

reclaimPolicy: Delete

allowVolumeExpansion: true

> kubectl create -f StorageClass.yaml

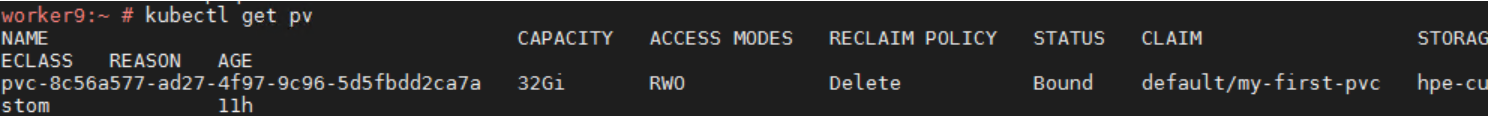

# Create the PersistentVolumeClaim

Create a PersistentVolumeClaim. This object declaration ensures a PersistentVolume is created and provisioned.

> vi PersistentVolumeClaim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-first-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 32Gi

storageClassName: hpe-custom

> kubectl create -f **PersistentVolumeClaim.yaml

# Create the Pods

> vi Pods.yaml

kind: Pod

apiVersion: v1

metadata:

name: my-pod

spec:

containers:

- name: pod-datelog-1

image: nginx

command: ["bin/sh"]

args: ["-c", "while true; do date >> /data/mydata.txt; sleep 1;

done"]

volumeMounts:

- name: export1

mountPath: /data

- name: pod-datelog-2

image: debian

command: ["bin/sh"]

args: ["-c", "while true; do date >> /data/mydata.txt; sleep 1; done"]

volumeMounts:

- name: export1

mountPath: /data

volumes:

- name: export1

persistentVolumeClaim:

claimName: my-first-pvc

Once the pods are deployed we can list the running pods.

> Kubectl get pods

These pods will be using the above created pv's for their data storage which reside on HPE Nimble Storage in the backend.