# Importing plain K8s cluster into HPECP cluster

# Introduction

This section covers about deploying a plain kubernetes cluster on open SUSE linux OS and then importing into HPECP cluster, so we can manage all clusters from single pane of glass

NOTE

The imported cluster can be made up of physical servers or it can be a virtual machine setup.

# Hardware Requirements

| PLATFORM | VMWare |

|---|---|

| O.S | Open Suse Leap 15.2 |

| MEMORY | 8 GB |

| CPU | 4 vCpu's |

| HDD | 150 GB |

| NETWORK | Twentynet |

| VM's | 3 (1 Master & 2 Worker) |

# Installation Process for Creating Kubernetes Cluster.

Follow below steps on all nodes.

Assign IP Address, Hostnames & make entries in DNS

Get the latest updates of your server

> zypper ref && zypper dup -yInstall Docker

> zypper in docker-18.09.6_ceAdd the following lines after "{" to /etc/docker/daemon.json file

> vi /etc/docker/daemon.json"exec-opts": ["native.cgroupdriver=systemd"], "storage-driver": "overlay2",Restart & Enable Docker service

> systemctl restart docker && systemctl enable docker

Follow below steps on master nodes.

Add firewall rules to the ports

> firewall-cmd --permanent --add-port=6443/tcp > firewall-cmd --permanent --add-port=2379-2380/tcp > firewall-cmd --permanent --add-port=10250/tcp > firewall-cmd --permanent --add-port=10251/tcp > firewall-cmd --permanent --add-port=10252/tcp > firewall-cmd --permanent --add-port=10255/tcp > firewall-cmd --reloadRun modprobe command

> modprobe overlay > modprobe br_netfilterEdit /etc/sysctl.conf & apply

> vi /etc/sysctl.confnet.ipv4.ip_forward = 1 net.ipv4.conf.all.forwarding = 1 net.bridge.bridge-nf-call-iptables = 1> sysctl -pAdd K8s repository

> zypper addrepo --type yum --gpgcheck-strict --refresh https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 google-k8sAdd gpg key for repository

> rpm --import [https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg](https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg%20%20)

If the command throws an error, execute 2 or more times until it get succeed

> rpm --import <https://packages.cloud.google.com/yum/doc/yum-key.gpg>

Refresh the repository

> zypper refresh google-k8sInstall kubeadm, kubelet, kubectl

> zypper in -y kubelet-1.18.4 kubeadm-1.18.4 kubectl-1.18.4(When prompted press 2, 3, y)

Disable swap

> swapon -s > swapoff -aEnable kubelet service

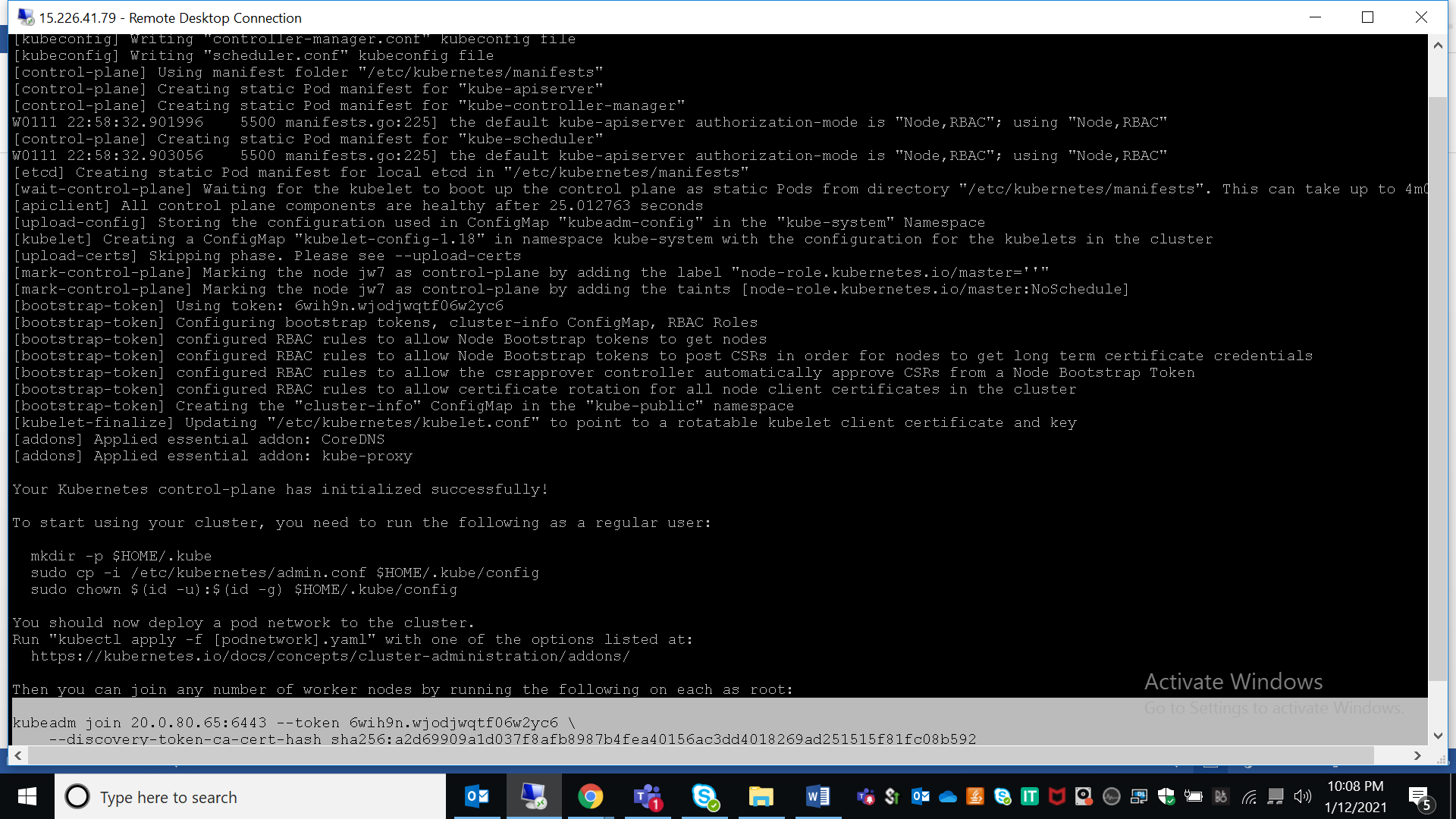

> systemctl enable kubeletInitialize kubeadm & copy the token, so it will be useful to run in worker nodes for joining the cluster

> kubeadm init(If got an error, then execute "zypper install conntrack-tools" again run kubeadm init)

Allow root user to access kubectl commands

> mkdir -p $HOME/.kube > sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config > sudo chown $(id -u):$(id -g) $HOME/.kube/configDeploy the pod network (weavenet)

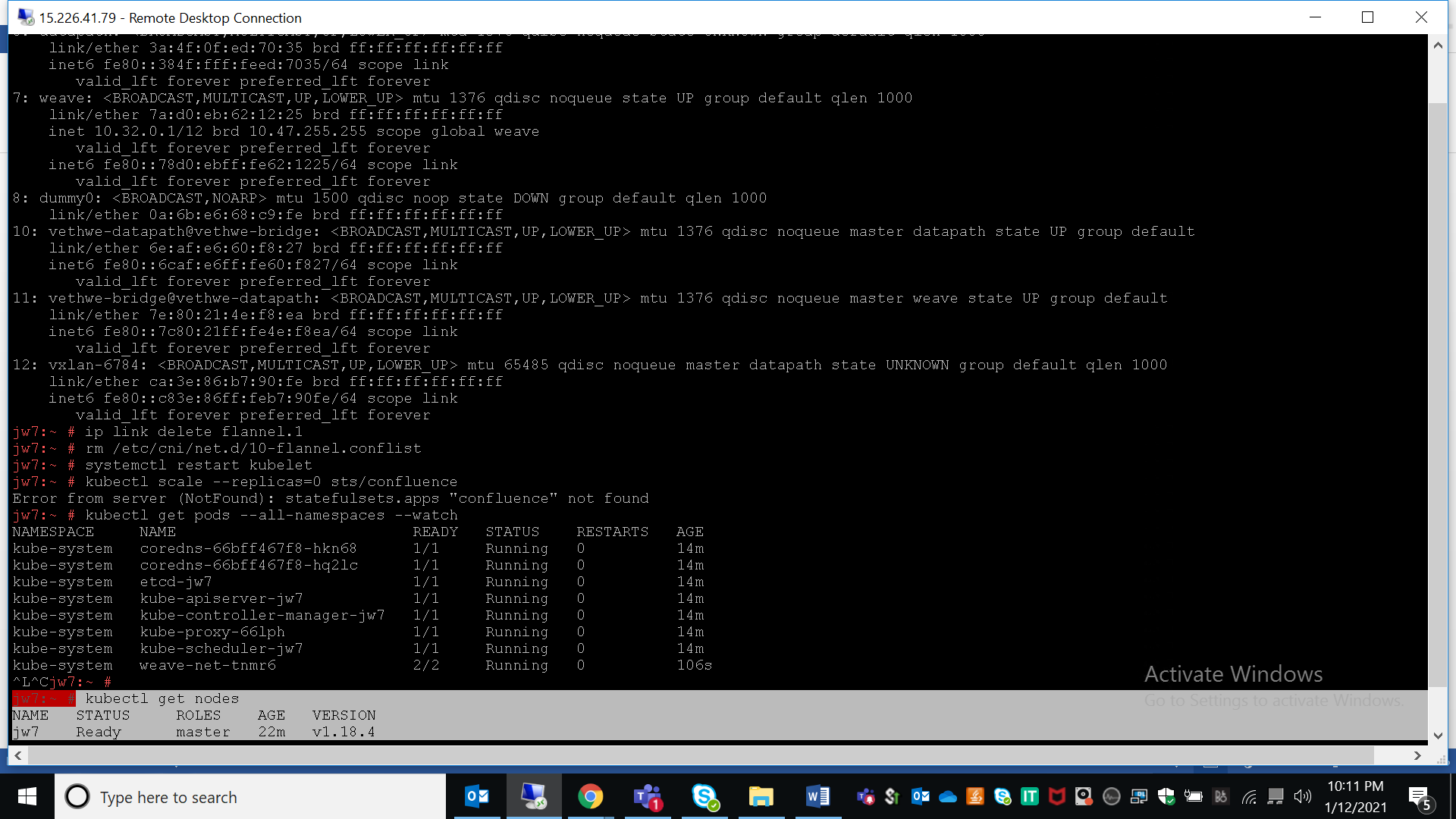

> kubectl apply -f [https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d'\n')](https://cloud.weave.works/k8s/net?k8s-version=$(kubectl%20version%20|%20base64%20|%20tr%20-d%20'\n'))Check the status of master node & pods

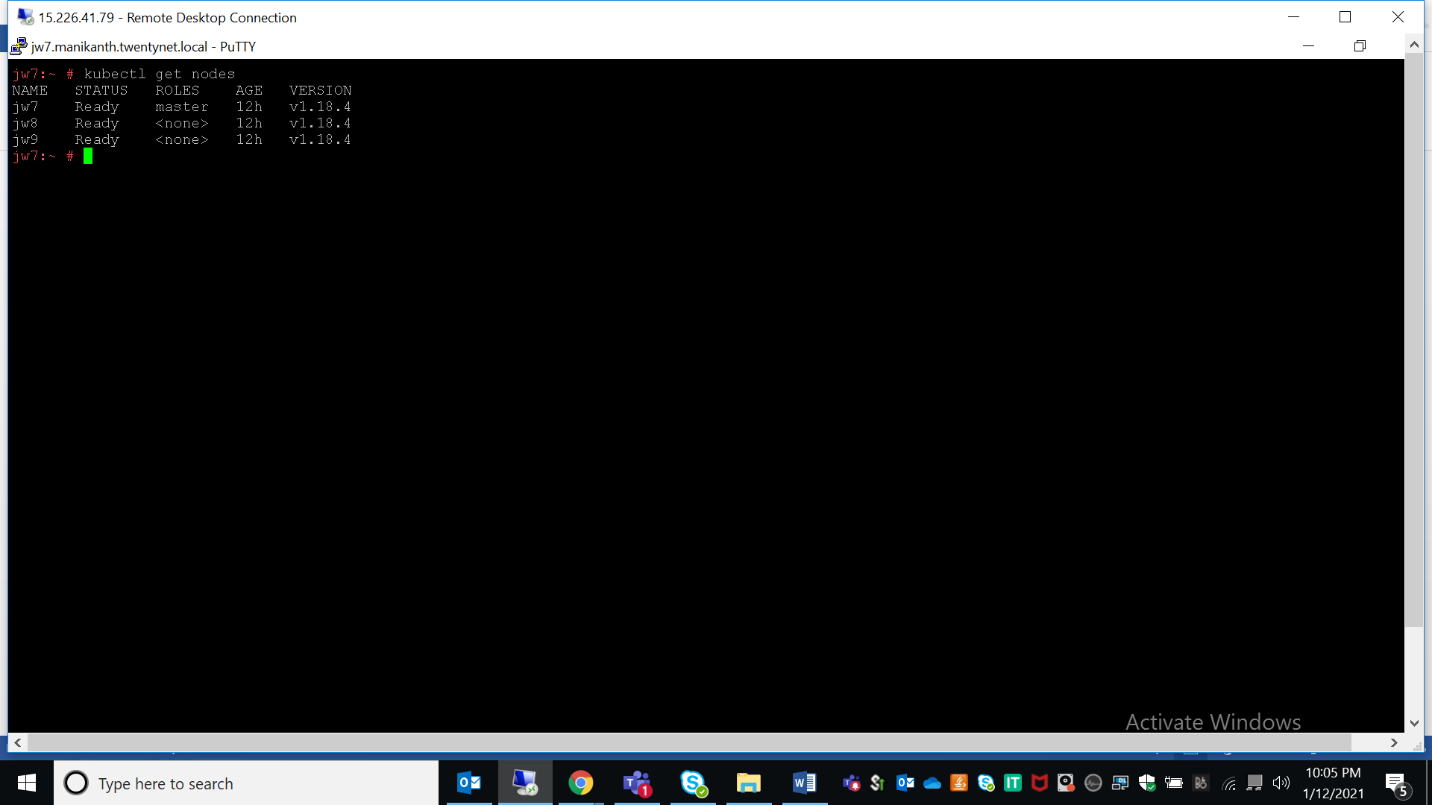

> kubectl get nodes

Follow below steps on worker nodes:

Add firewall rules on the ports

> firewall-cmd --permanent --add-port=6783/tcp > firewall-cmd --permanent --add-port=10250/tcp > firewall-cmd --permanent --add-port=10255/tcp > firewall-cmd --permanent --add-port=30000-32767/tcp > firewall-cmd --reloadRun modprobe command

> modprobe overlay > modprobe br_netfilterEdit /etc/sysctl.conf & apply

> vi /etc/sysctl.confnet.ipv4.ip_forward = 1 net.ipv4.conf.all.forwarding = 1 net.bridge.bridge-nf-call-iptables = 1> sysctl -pAdd K8s repository

> zypper addrepo --type yum --gpgcheck-strict --refresh https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 google-k8sAdd gpg key for repository

> rpm --import [https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg](https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg%20%20)If the command throws an error, execute 2 or more times until it get succeed

> rpm --import <https://packages.cloud.google.com/yum/doc/yum-key.gpg>Refresh the repository

> zypper refresh google-k8sInstall kubeadm, kubelet, kubectl

> zypper in -y kubelet-1.18.4 kubeadm-1.18.4 kubectl-1.18.4(When prompted press 2, 3, y)

Disable swap

> swapon --s > swapoff --aEnable kubelet service

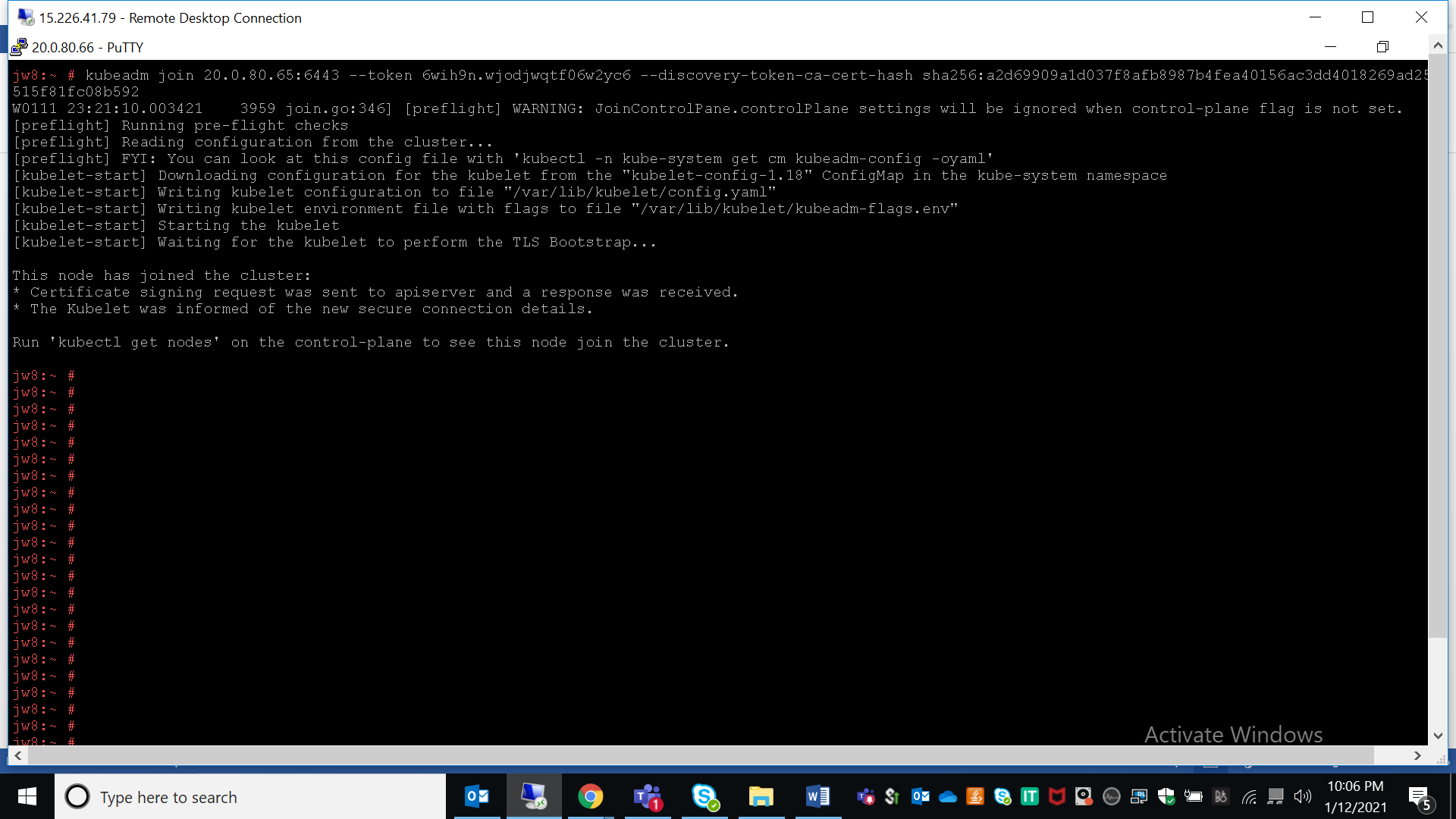

> systemctl enable kubeletPaste the token copied from master node

Now execute following command on master node to see if worker nodes joined the cluster

> kubectl get nodes

# Installation Process for Importing Kubernetes Cluster

Follow below steps on Master nodes:

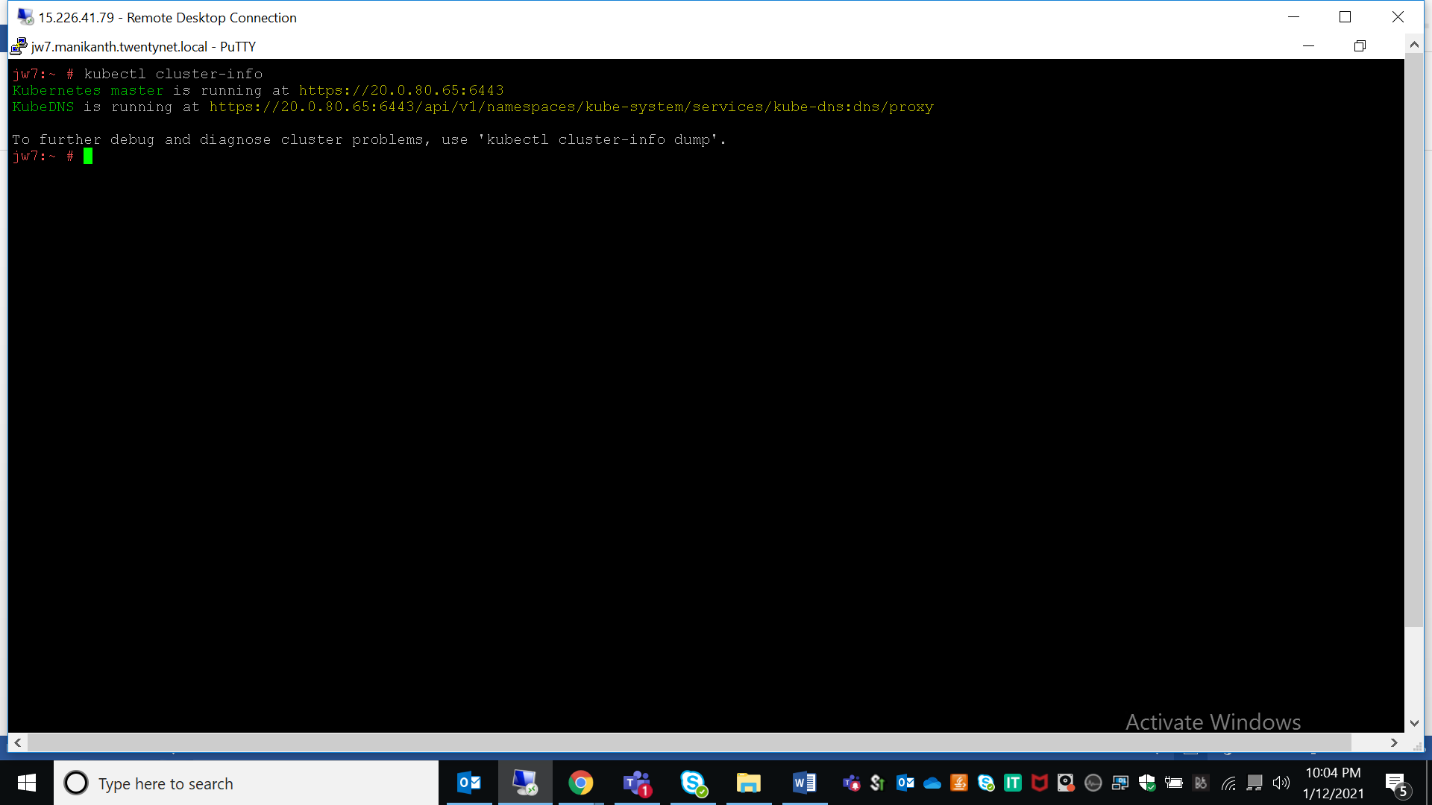

1.Execute the following command to get Kubernetes cluster URL

> kubectl cluster-info

2.Create service account, clusterrolebinding, ca-certs, tokens

> kubectl create serviceaccount NAME_OF_YOUR_CHOICE

Ex: - kubectl create serviceaccount hpe

> kubectl create clusterrolebinding add-on-cluster-admin --clusterrole=cluster-admin --serviceaccount=default:NAME_OF_YOUR_CHOICE

Ex: - kubectl create clusterrolebinding add-on-cluster-admin --clusterrole=cluster-admin --serviceaccount=default:hpe

> SA_TOKEN=`kubectl get serviceaccount/ NAME_OF_YOUR_CHOICE -o jsonpath={.secrets[0].name}`

Ex: - SA_TOKEN=kubectl get serviceaccount/hpe -o jsonpath={.secrets[0].name}

> kubectl get secret $SA_TOKEN -o jsonpath={.data.token} > token.base64

> kubectl get secret $SA_TOKEN -o jsonpath={'.data.ca\.crt'} > ca.crt.base64

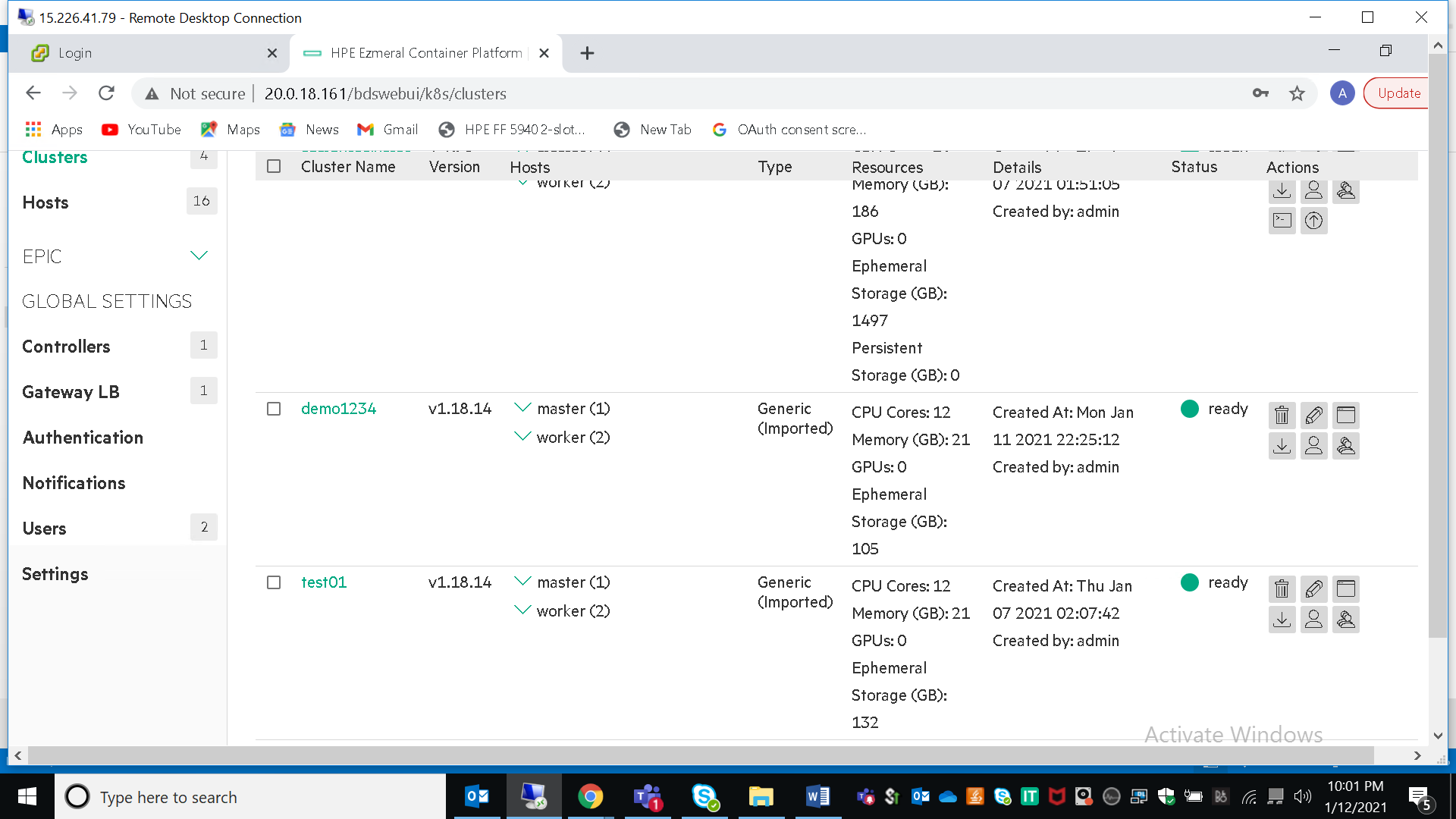

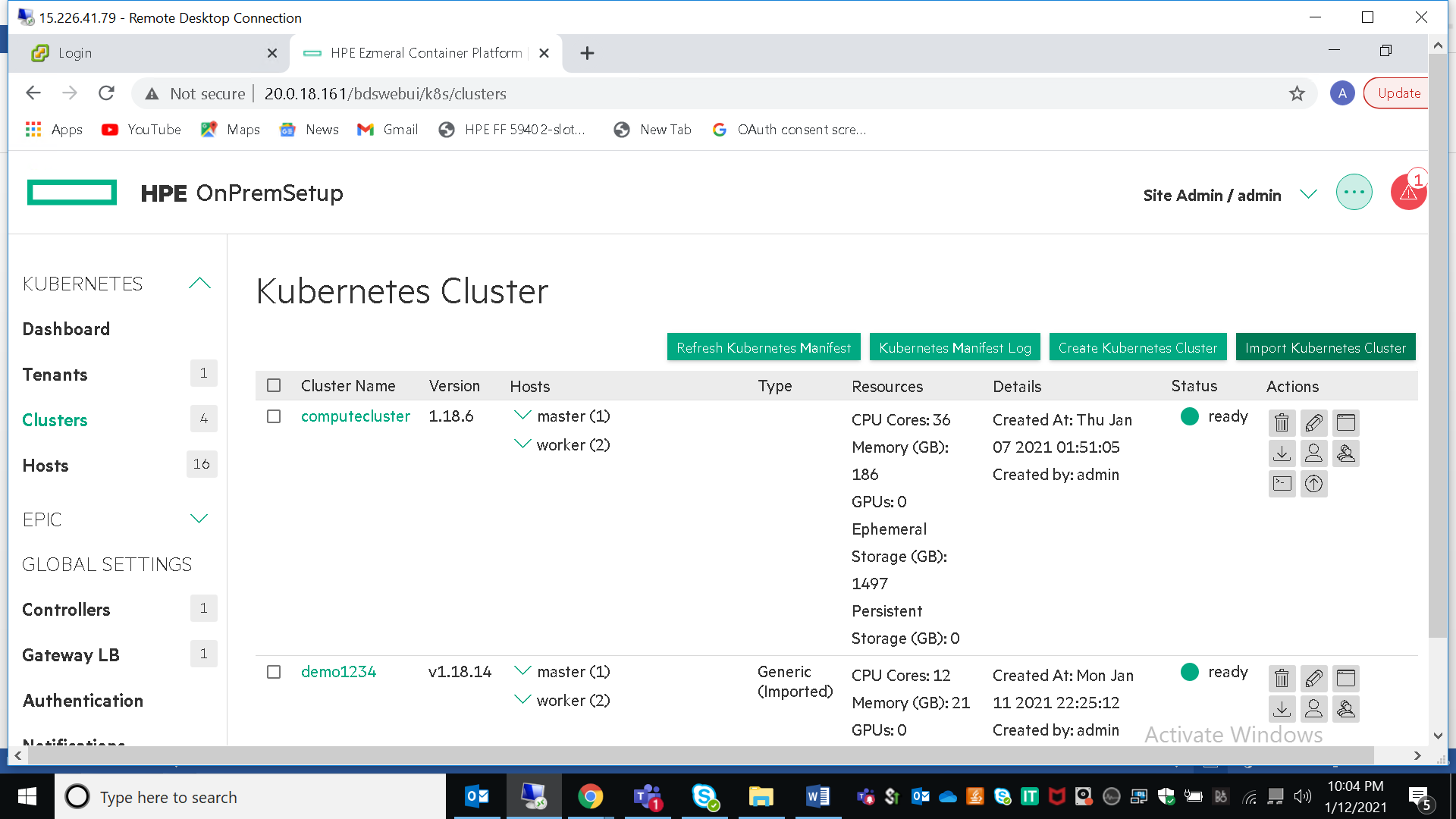

3.Open hpecp controller UI, on the left side navigate to kubernetes & click on clusters, then click on "import cluster"

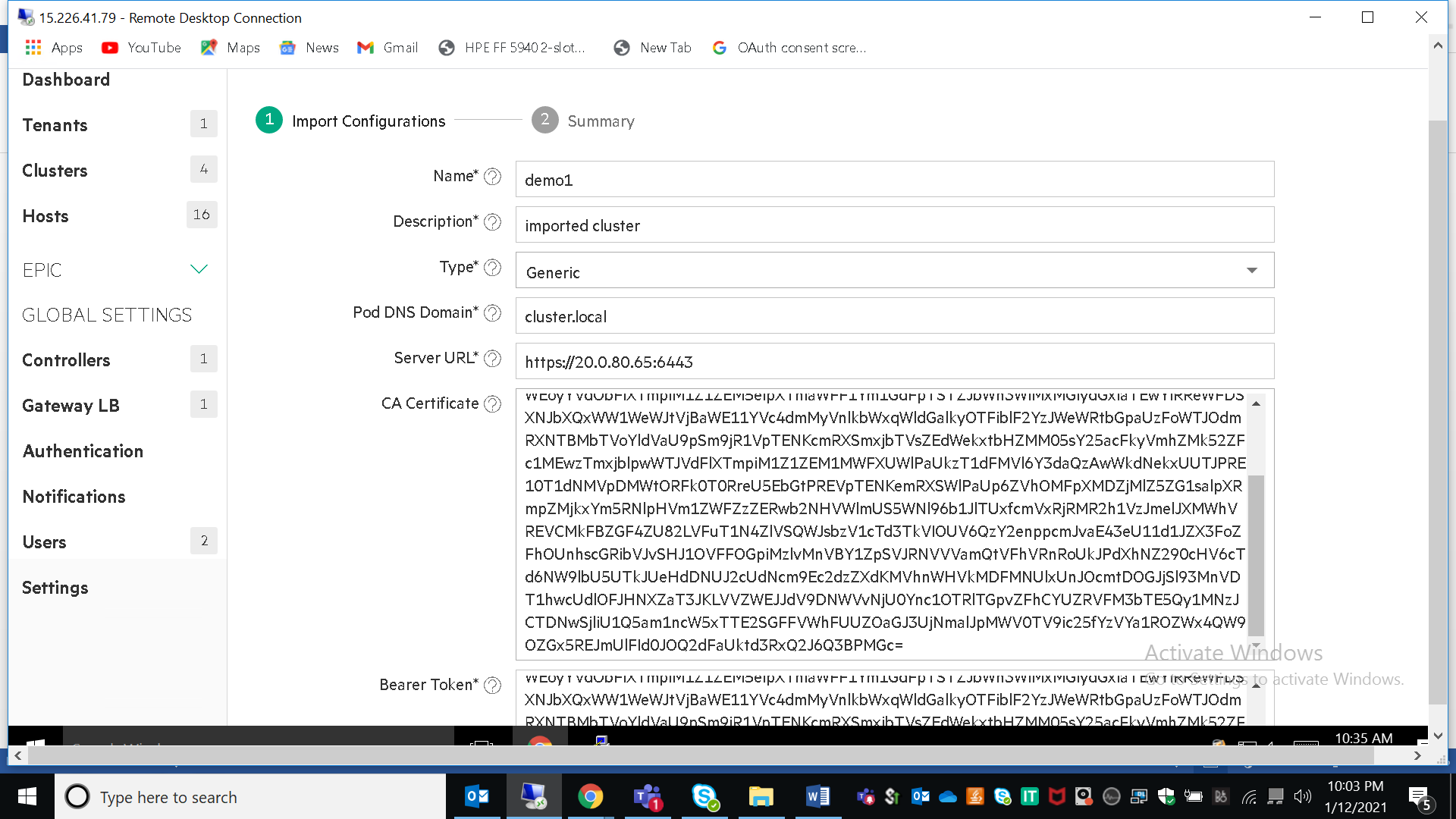

4.Fill the following details

A) Name the cluster

B) Enter description

C) Type is "generic"

D) Pod Dns name is "cluster.local"

E) Server URL --> you will have to execute Step 1 to get these details

F) CA CERTIFICATE & BEARER TOKEN --> you will have to execute Step 2 and copy the keys from ca.crt.base64 & token.base64

5.Click on next and click submit, after sometime you should see the cluster status is ready