# IPI deployment of OCP on Bare Metal

# Introduction

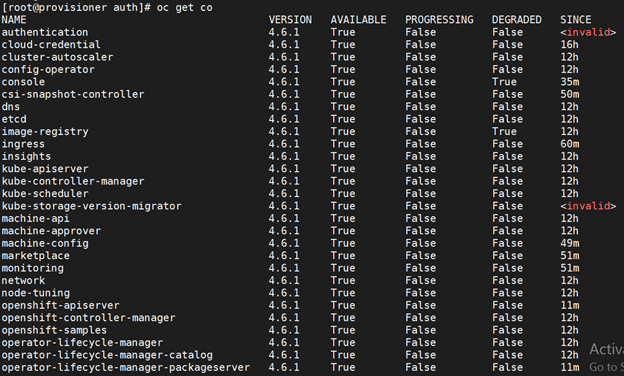

Installer-provisioned Infrastructure (IPI) provides a full-stack installation and setup of the Openshift container platform (OCP). It creates Bootstrapping node which will take care deploying the cluster.

Installer-provisioned Infrastructure on bare metal creates a bootstrap VM on Provisioner node. The role of the bootstrap VM is to assist in the process of deploying an OpenShift Container Platform cluster. The bootstrap VM connects to the baremetal network via the network bridges.

When the installation of OpenShift Container Platform control plane nodes is complete and fully operational, the installer destroys the bootstrap VM automatically and moves the virtual IP addresses (VIPs) to the appropriate nodes accordingly. The API VIP moves to the control plane nodes and the Ingress VIP moves to the worker nodes.

# High Level Architecture

Figure 72. Installer-provisioned Infrastructure Installation High Level Architecture

PREREQUISITES

One provisioner node with RHEL 8.1 installed.

Three Control Plane nodes.

At least two worker nodes.

Baseboard Management Controller (BMC) access to each node.

At least one network

DNS and DHCP Setup

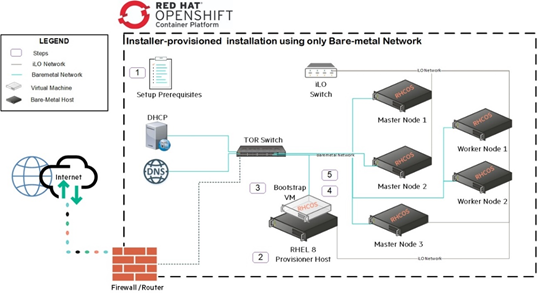

# Flow Diagram

Figure 73. Installer-provisioned Infrastructure Installation

Solution Flow Diagram

Figure 73. Installer-provisioned Infrastructure Installation

Solution Flow Diagram

# Deployment Process

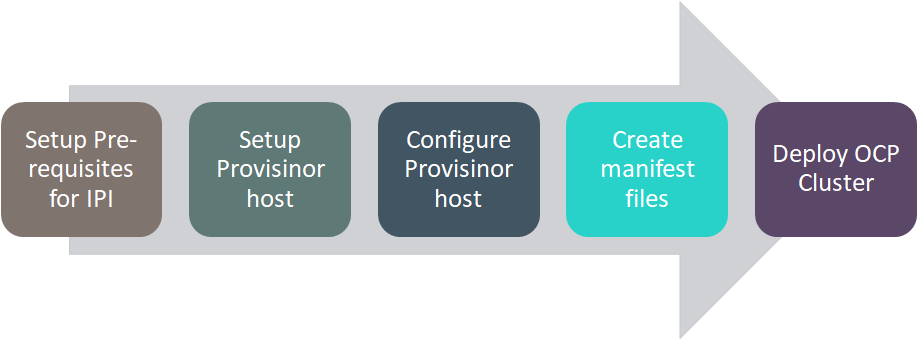

# Add DNS records

Create DNS records for api, ingressvip, master and worker nodes as shown below.

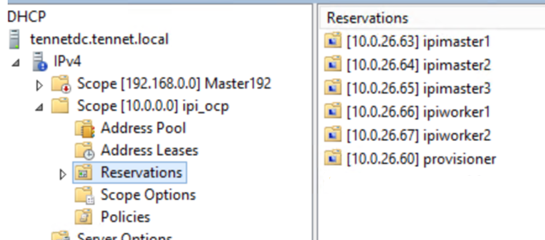

# Add DHCP records

Create DHCP records for provisioner, master and worker nodes with their MAC address

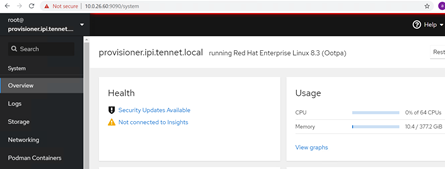

# Preparing the provisioner node

Once the provisioner node is booted with RHEL 8.1, upgrade to latest. Perform below steps to make the node ready for OCP deployment

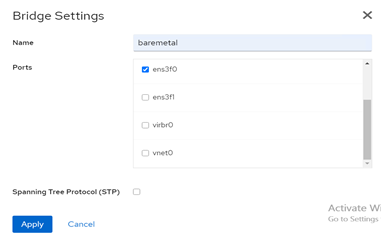

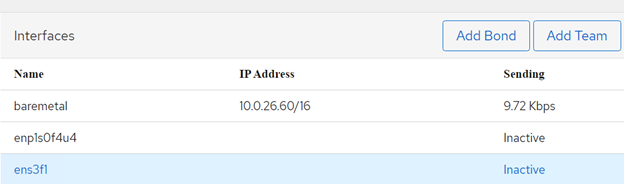

# Create baremetal bridge

IPI process of installation create bootstrap vm in provisoner node and hence we need a bridge network.

We will create bridge (baremetal) from existing ethernet (ens3f0) using cockpit

a. Enable cockpit on RHEL8

systemctl enable --now cockpit.socket

b. Now access the cockpit from browser

c. On Networking menu and click on Add Bridge

d. On pop window choose name "baremetal" and select relevant Ports on your OS

e. Click Apply and you will notice baremetal interface created

# Create a non-root user (kni) with sudo privileges and generate ssh key

> useradd kni

> passwd kni

> echo "kni ALL=(root) NOPASSWD:ALL" | tee -a /etc/sudoers.d/kni

> chmod 0440 /etc/sudoers.d/kni

> su - kni -c "ssh-keygen -t rsa -f /home/kni/.ssh/id_rsa -N ''"

- Login as kni and perform the following steps

> su - kni

> sudo subscription-manager register --username=<username>

--password=<password>. --auto-attach

> sudo subscription-manager repos

--enable=rhel-8-for-x86_64-appstream-rpms

--enable=rhel-8-for-x86_64-baseos-rpms

> sudo dnf install -y libvirt qemu-kvm mkisofs python3-devel jq ipmitool

> sudo usermod --append --groups libvirt kni

> sudo systemctl start firewalld

> sudo firewall-cmd --zone=public --add-service=http --permanent

> sudo firewall-cmd --reload

> sudo systemctl start libvirtd

> sudo systemctl enable libvirtd --now

# Create the default storage pool and start it.

> sudo virsh pool-define-as --name default --type dir --target

/var/lib/libvirt/images

> sudo virsh pool-start default

> sudo virsh pool-autostart default

# Create a pull-secret.txt file

Copy pull secret from redhat website and paste the contents into the pull-secret.txt under kni user's home directory

vim pull-secret.txt

# Retrieving the OpenShift Container Platform installer

# Set the environment variables:

> export VERSION=latest-4.6

> export RELEASE_IMAGE=$(curl -s

https://mirror.openshift.com/pub/openshift-v4/clients/ocp/$VERSION/release.txt

| grep 'Pull From: quay.io' | awk -F ' ' '{print $3}')

> echo $RELEASE_IMAGE

> export cmd=openshift-baremetal-install

> export pullsecret_file=~/pull-secret.txt

> export extract_dir=$(pwd)

> echo $cmd

> echo $pullsecret_file

> echo $extract_dir

# Get the oc binary:

> curl -s https://mirror.openshift.com/pub/openshift-v4/clients/ocp/$VERSION/openshift-client-linux.tar.gz | tar zxvf - oc

> sudo cp oc /usr/local/bin

# Extract the installer

> oc adm release extract --registry-config "${pullsecret_file}"

--command=$cmd --to "${extract_dir}" ${RELEASE_IMAGE}

> sudo cp openshift-baremetal-install /usr/local/bin/

# Creating an RHCOS images cache

# Installing podman and configuring cache

> sudo dnf install -y podman

> sudo firewall-cmd --add-port=8080/tcp --zone=public --permanent

> mkdir /home/kni/rhcos_image_cache

> sudo semanage fcontext -a -t httpd_sys_content_t

"/home/kni/rhcos_image_cache(/.*)?"

> sudo restorecon -Rv rhcos_image_cache/

> export COMMIT_ID=$(/usr/local/bin/openshift-baremetal-install version

| grep '\^built from commit' | awk '{print $4}')

> export RHCOS_OPENSTACK_URI=$(curl -s -S

https://raw.githubusercontent.com/openshift/installer/$COMMIT_ID/data/data/rhcos.json

| jq .images.openstack.path | sed 's/"//g')

> echo $COMMIT_ID

> echo $RHCOS_OPENSTACK_URI

> export RHCOS_QEMU_URI=$(curl -s -S

https://raw.githubusercontent.com/openshift/installer/$COMMIT_ID/data/data/rhcos.json

| jq .images.qemu.path | sed 's/"//g')

> export RHCOS_PATH=$(curl -s -S

https://raw.githubusercontent.com/openshift/installer/$COMMIT_ID/data/data/rhcos.json

| jq .baseURI | sed 's/"//g')

> export RHCOS_QEMU_SHA_UNCOMPRESSED=$(curl -s -S

https://raw.githubusercontent.com/openshift/installer/$COMMIT_ID/data/data/rhcos.json

| jq -r '.images.qemu["uncompressed-sha256"]')

> export RHCOS_OPENSTACK_SHA_COMPRESSED=$(curl -s -S

https://raw.githubusercontent.com/openshift/installer/$COMMIT_ID/data/data/rhcos.json

| jq -r '.images.openstack.sha256')

# Download the images and place them in the /home/kni/rhcos_image_cache directory.

> cd /home/kni/rhcos_image_cache

> wget ${RHCOS_PATH}${RHCOS_QEMU_URI}

> wget ${RHCOS_PATH}${RHCOS_OPENSTACK_URI}

# Confirm SELinux type is of httpd_sys_content_t for the newly created files.

> cd

> ls -Z /home/kni/rhcos_image_cache

#### Create the pod for registries.

> podman run -d --name rhcos_image_cache \

-v /home/kni/rhcos_image_cache:/var/www/html \

-p 8080:8080/tcp \

registry.centos.org/centos/httpd-24-centos7:latest

# Create install-config.yaml file

Update install-config.yaml file as per below

> vi install-config.yaml

apiVersion: v1

baseDomain: tennet.local

metadata:

name: ipi

networking:

machineCIDR: 10.0.0.0/16

networkType: OVNKubernetes

compute:

- name: worker

replicas: 2

controlPlane:

name: master

replicas: 3

platform:

baremetal: {}

platform:

baremetal:

apiVIP: 10.0.26.61

ingressVIP: 10.0.26.62

provisioningNetwork: "Disabled"

provisioningHostIP: 10.0.26.69

bootstrapProvisioningIP: 10.0.26.68

hosts:

- name: ipimaster1

role: master

bmc:

address: redfish-virtualmedia://10.0.5.28/redfish/v1/Systems/1

username: ajuser

password: test123456

disableCertificateVerification: True

bootMACAddress: AA:5F:88:80:00:F4

rootDeviceHints:

deviceName: "/dev/sdb"

- name: ipimaster2

role: master

bmc:

address: redfish-virtualmedia://10.0.5.32/redfish/v1/Systems/1

username: ajuser

password: test123456

disableCertificateVerification: True

bootMACAddress: AA:5F:88:80:00:E7

rootDeviceHints:

deviceName: "/dev/sdb"

- name: ipimaster3

role: master

bmc:

address: redfish-virtualmedia://10.0.5.35/redfish/v1/Systems/1

username: ajuser

password: test123456

disableCertificateVerification: True

bootMACAddress: AA:5F:88:80:00:E8

rootDeviceHints:

deviceName: "/dev/sdb"

- name: ipiworker1

role: worker

bmc:

address: redfish-virtualmedia://10.0.5.26/redfish/v1/Systems/1

username: ajuser

password: test123456

disableCertificateVerification: True

bootMACAddress: AA:5F:88:80:00:EA

rootDeviceHints:

deviceName: "/dev/sdb"

- name: ipiworker2

role: worker

bmc:

address: redfish-virtualmedia://10.0.5.29/redfish/v1/Systems/1

username: ajuser

password: test123456

disableCertificateVerification: True

bootMACAddress: AA:5F:88:80:00:E9

rootDeviceHints:

deviceName: "/dev/sdb"

pullSecret: ''

sshKey: ''

Following sections needs to be modified as required in install-config.yaml file

baseDomain : The domain name for the cluster. For example, example.com

metadata:

name: The name to be given to the OpenShift Container Platform cluster. For example, openshift

networking:

machineCIDR: The public CIDR of the external network. For example, 10.0.0.0/16.

apiVIP: The VIP to use for internal API communication. IP address of api. .<clustername.clusterdomain>

ingressVIP: The VIP to use for ingress traffic. Ex: IP address of test.apps.<clustername.clusterdomain>

bootstrapProvisioningIP: Set this value to an IP address that is available on the baremetal network

provisioningHostIP: Set this parameter to an available IP address on the baremetal network

bmc: Update with correct username, password and bootMACAddress of respective nodes

Following sections needs to be modified as required in install-config.yaml file

baseDomain : The domain name for the cluster. For example, example.com

metadata:

name: The name to be given to the OpenShift Container Platform cluster. For example, openshift

networking:

machineCIDR: The public CIDR of the external network. For example, 10.0.0.0/16.

apiVIP: The VIP to use for internal API communication. IP address of api. .<clustername.clusterdomain>

ingressVIP: The VIP to use for ingress traffic. Ex: IP address of test.apps.<clustername.clusterdomain>

bootstrapProvisioningIP: Set this value to an IP address that is available on the baremetal network

provisioningHostIP: Set this parameter to an available IP address on the baremetal network

bmc: Update with correct username, password and bootMACAddress of respective nodes

# Create a directory to store cluster configs

> mkdir ~/clusterconfigs

> cp install-config.yaml ~/clusterconfigs

# Create the OCP manifests

Run below command to generate manifests files

> sudo ./openshift-baremetal-install --dir ~/clusterconfigs create

manifests

# Deploying the OCP cluster

Run below command to deploy the ocp cluster

> sudo ./openshift-baremetal-install --dir ~/clusterconfigs --log-level

debug create cluster

Output

DEBUG Still waiting for the cluster to initialize: Working towards

4.6.3: 100% complete

DEBUG Still waiting for the cluster to initialize: Cluster operator

authentication is reporting a failure: WellKnownReadyControllerDegraded:

kube-apiserver oauth endpoint

<https://10.0.26.60:6443/.well-known/oauth-authorization-server> is not

yet served and authentication operator keeps waiting (check

kube-apiserver operator, and check that instances roll out successfully,

which can take several minutes per instance)

DEBUG Cluster is initialized

INFO Waiting up to 10m0s for the openshift-console route to be

created...

DEBUG Route found in openshift-console namespace: console

DEBUG Route found in openshift-console namespace: downloads

DEBUG OpenShift console route is created

INFO Install complete!

INFO To access the cluster as the system:admin user when using 'oc',

run 'export KUBECONFIG=/home/kni/clusterconfigs/auth/kubeconfig'

INFO Access the OpenShift web-console here:

<https://console-openshift-console.apps.ipi.tennet.local>

INFO Login to the console with user: "kubeadmin", and password:

"K3zqG-mw9oF-PtZGR-zbhIS"

DEBUG Time elapsed per stage:

DEBUG Infrastructure: 20m4s

DEBUG Bootstrap Complete: 24m53s

DEBUG Bootstrap Destroy: 11s

DEBUG Cluster Operators: 43m44s

INFO Time elapsed: 1h33m18s

INFO Install complete!

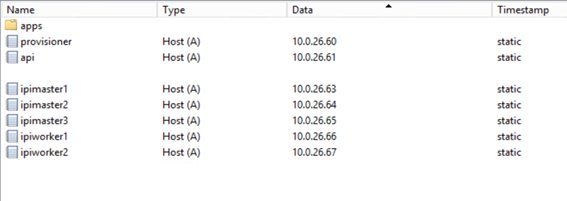

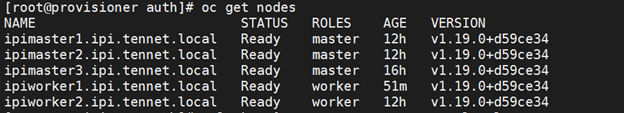

# Validation

Verify all nodes are available

Verify all cluster operator are available